Agents are the New Microservices: Why the Multi-LLM Era is Here

The future of AI isn't about finding the perfect model—it's about orchestrating the right models for the right tasks.

Remember when we thought monolithic applications were the answer? One massive codebase handling everything from user authentication to payment processing to data analytics. We learned the hard way that this approach doesn't scale. The microservices revolution taught us something fundamental: complex systems work better when you match specialized components to specific problems.

We're about to learn this lesson all over again—this time with AI agents.

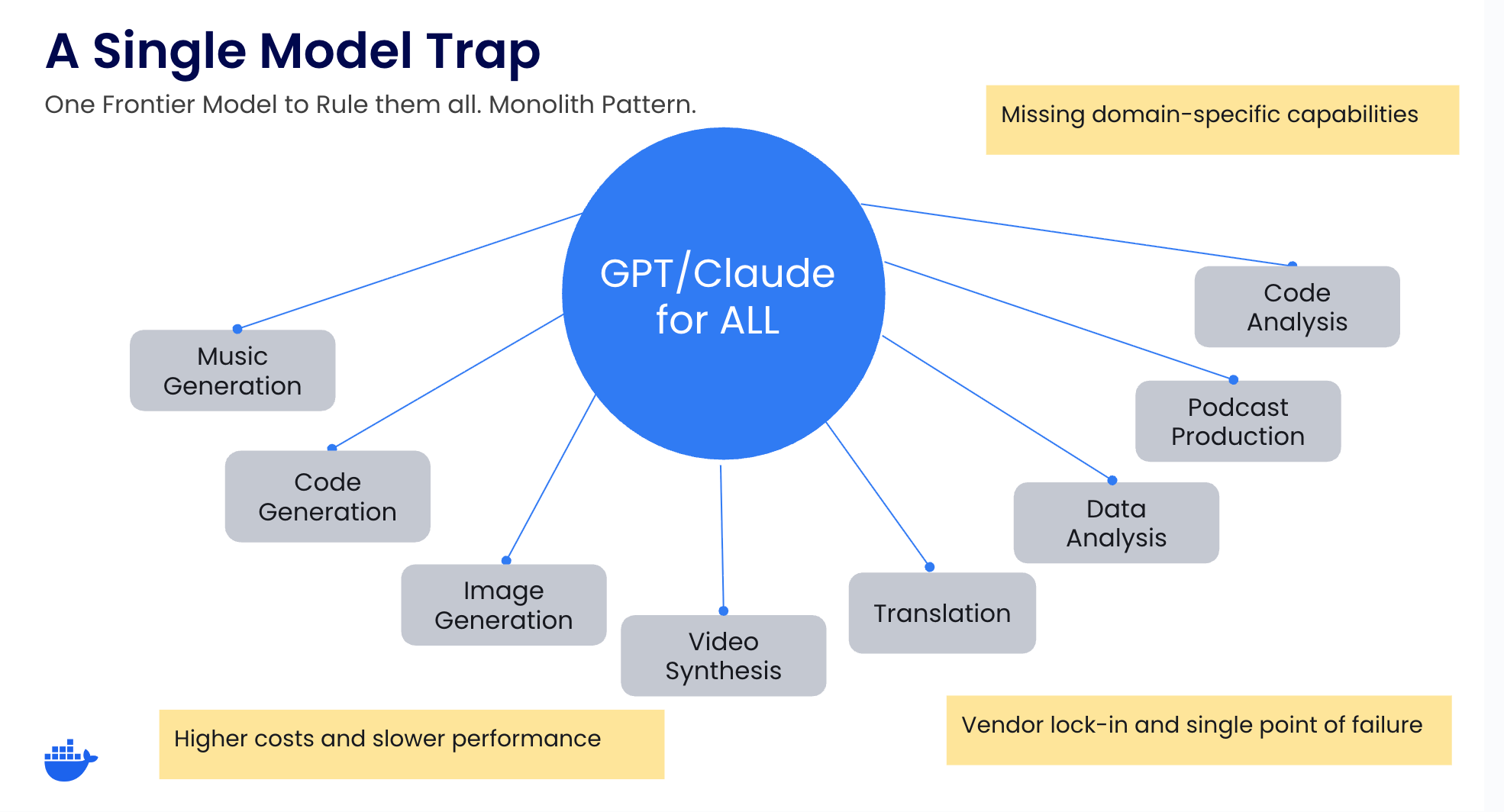

The Single-Model Trap

Right now, most teams are building agentic AI like it's 2010 all over again. One frontier model to rule them all. GPT-4 for everything. Claude for all tasks. It's the monolithic application pattern, dressed up in transformer architecture.

But here's what's actually happening in production: you need different models for different jobs.

Sometimes only a frontier model can handle complex reasoning across multiple domains. Sometimes cost constraints mean you need a local 7B parameter model. Sometimes latency requirements demand edge deployment. Sometimes privacy regulations mean data can't leave your infrastructure.

The math is simple: context engineering + the right model + the right tools = success. Miss any part of that equation, and your agent fails.

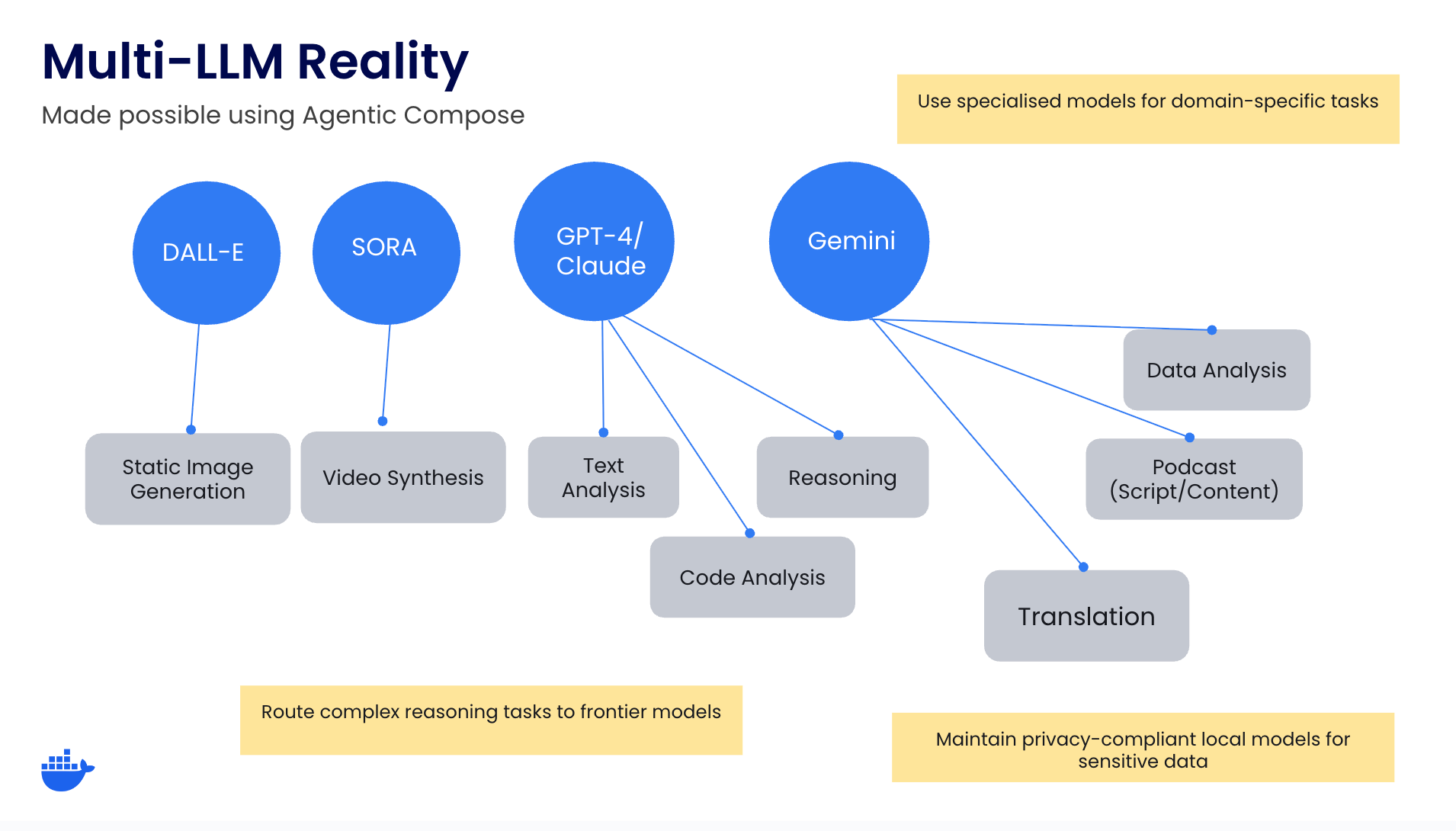

The Multi-LLM Reality

Smart teams are already building multi-LLM architectures. They're learning to:

- Route complex reasoning tasks to frontier models

- Handle simple classification with efficient local models

- Use specialized models for domain-specific tasks (code, math, legal)

- Deploy edge models for real-time interactions

- Maintain privacy-compliant local models for sensitive data

This isn't theoretical. It's happening right now. The question isn't whether you'll adopt multi-LLM patterns—it's whether you'll do it intentionally or stumble into it.

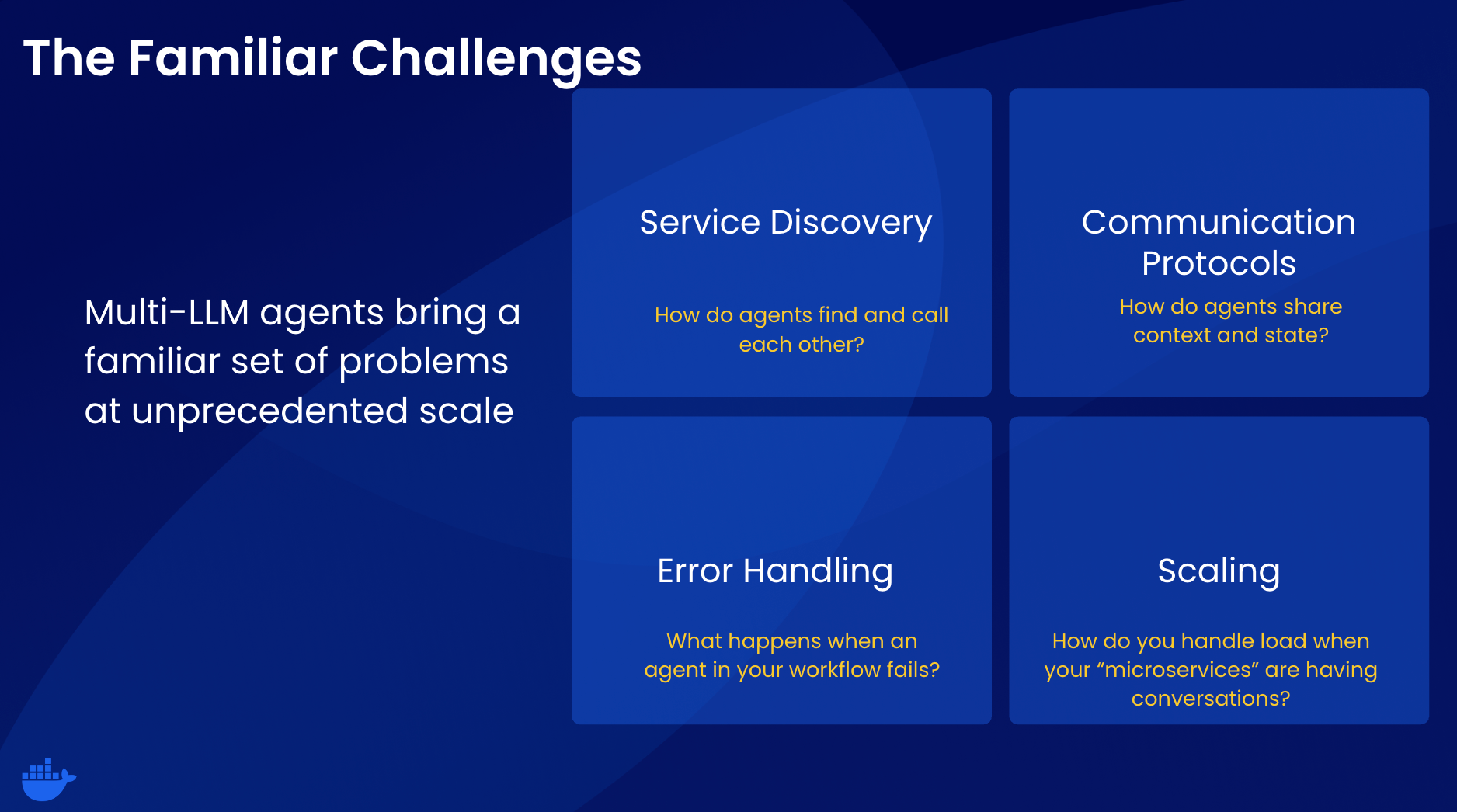

The Familiar Challenge

But multi-LLM agents bring a familiar set of problems at unprecedented scale:

- Service Discovery: How do agents find and call each other?

- Communication Protocols: How do agents share context and state?

- Error Handling: What happens when an agent in your workflow fails?-

- Monitoring: How do you observe a system where components are literally thinking?

- Scaling: How do you handle load when your "microservices" are having conversations?

Sound familiar? These are the same distributed systems challenges we solved with microservices. The difference is that now your services are having creative conversations, learning from each other, and sometimes disagreeing about what to do next.

The Cloud-Native Solution

Here's the good news: these problems aren't new. The same principles that made cloud-native possible are solving them for agents:

- Build once, run anywhere: Agent containers that work across environments

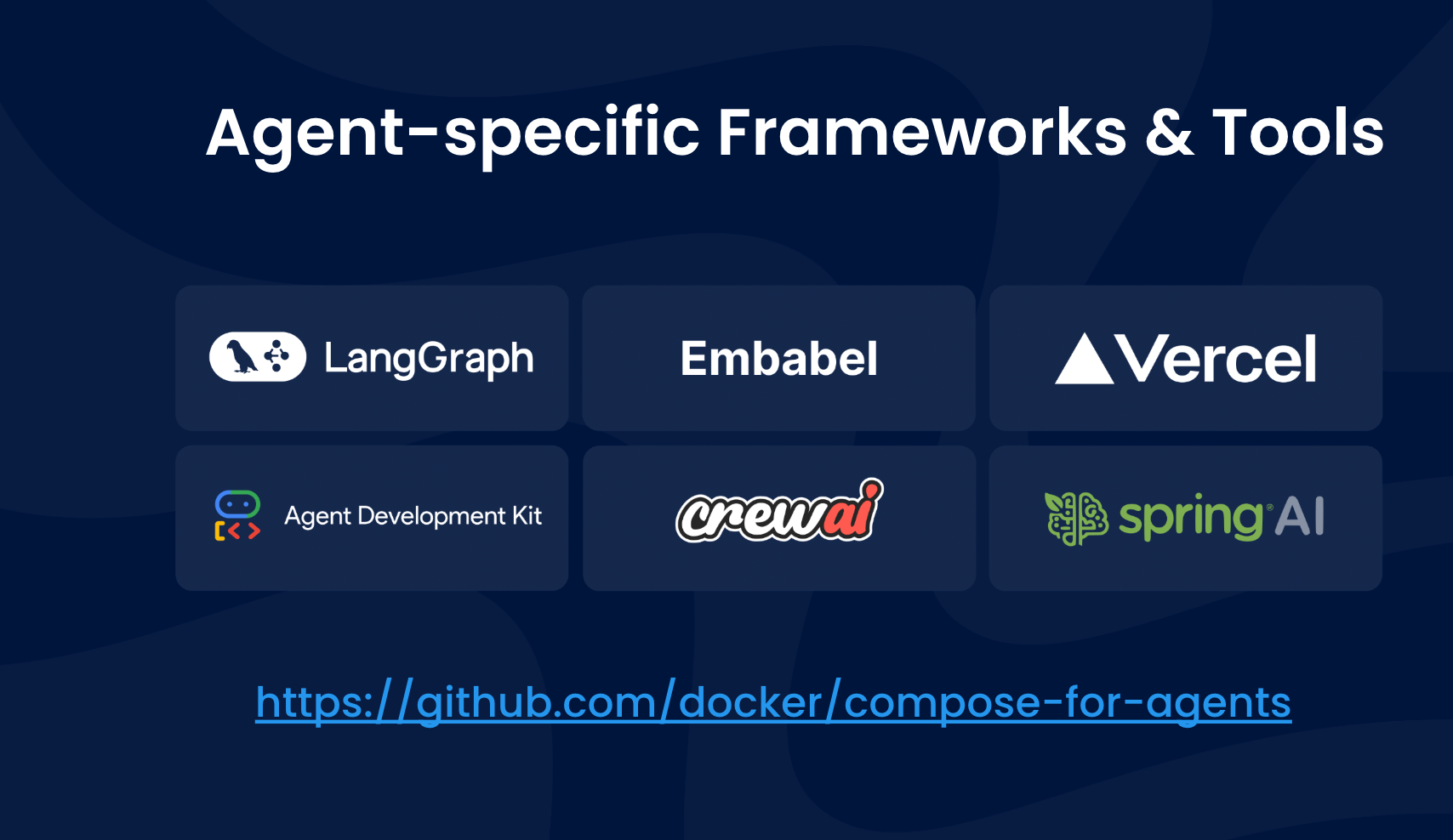

- Open source first: Community-driven agent frameworks and protocols

- Declarative configuration: Infrastructure as code for agent workflows

- Observable by default: Monitoring and tracing for agent interactions

The infrastructure patterns are emerging. Agent orchestration platforms. Service meshes for AI workflows. Distributed tracing for multi-LLM conversations. We're building the Kubernetes of agentic AI.

What's Coming Next

This is just the beginning. As agents become truly cooperative—discovering each other, learning from interactions, forming spontaneous swarms to solve complex problems—the operational complexity will dwarf what we faced with traditional microservices.

But we're not starting from scratch. Every lesson learned from cloud-native applies here. Every pattern from distributed systems scales to multi-agent systems. We just need to adapt them for a world where our infrastructure components are having thoughts.

Want to dive deeper into practical multi-LLM patterns? I'm presenting "Agents are the New Microservices: The Multi-LLM Era" at AgentNexus Conference on September 27 at Samarthanam Auditorium, HSR Layout, Bengaluru, India. We'll explore:

- Real-world multi-LLM architectures in production

- Practical patterns for agent-to-agent communication

- Operational strategies for AI workflows that think for themselves

- Live demos of multi-agent systems in action

The future is multi-LLM. The question is whether you'll be ready for it.

Event details: https://agentsnexus.io/

What patterns are you seeing in your multi-LLM implementations? Share your experiences in the comments below.