Beyond ChatBots - A Day of Agentic AI, Docker cagent, and Community Energy at LeadSquared

A full-day recap of the Collabnix "Cloud Native & AI Day" meetup at LeadSquared, Bengaluru ~ featuring a hands-on Docker cagent workshop, deep dives into MCP on Kubernetes, model serving strategies, and what it takes to move agentic AI from prototype to production

Last Saturday, on February 7th, the Collabnix community came together with KSUG.AI for one of our most ambitious meetups yet - "Cloud Native & AI Day: Beyond ChatBots - Let's Talk About Agentic Stack." Hosted at the LeadSquared office (3rd Floor, Omega Embassy Tech Square, Marathahalli, Bengaluru), the full-day event brought together developers, architects, DevOps engineers, and AI enthusiasts to explore how agentic AI is reshaping cloud-native infrastructure.

Morning Kickoff: Networking and Industry Perspectives

The day started at 9:00 AM with an hour of open networking. There's something special about those pre-event conversations - developers swapping war stories about Kubernetes, newcomers finding mentors, and old friends reconnecting over coffee. By the time the formal sessions began, the room was already buzzing.

Manish Bindal, Senior Director - SRE & Performance Testing, Cloud Operations at LeadSquared, kickstarted the event by welcoming the community and setting the tone for the day. Rohit Motwani, Associate Vice President - Data and AI, along with Ravi Shukla, Sr. Product Manager, followed with a talk on "Turning Lead Overload into Revenue Focus using ML" - a compelling look at how LeadSquared applies machine learning to solve real business problems. Next up was a fascinating session from Alagu Prakalya and Navaneetha Krishnana from ASAPP on "Real Engineering Beyond Vibe Coding."

Their talk was a much-needed reality check - in a world where "vibe coding" has become a trend, they reminded us what disciplined, real-world ML engineering looks like. It was a refreshing message: AI is powerful, but shipping reliable systems still demands rigor.

After a quick tea and coffee break, we split into two parallel tracks for the core of the day.

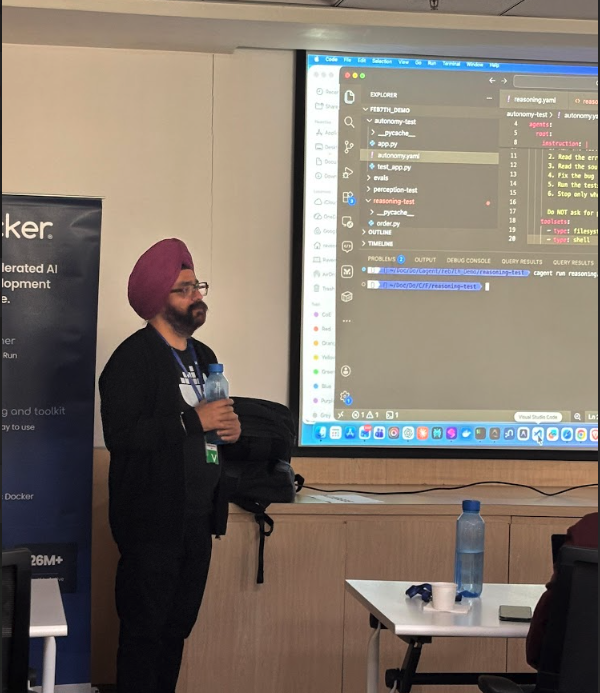

Track 1: Hands-On Workshop — Let's Build & Deploy AI Agents the Docker cagent Way

This was the session I was most excited about. I conducted a 2-hour hands-on workshop on Docker cagent ~ Docker's open-source, multi-agent runtime for building and deploying autonomous AI agent teams.

The workshop material is available at dockerworkshop.vercel.app/lab10/overview, and we walked through it step by step.

What is Docker cagent?

For those unfamiliar, cagent is a game-changer for anyone building AI agent systems. It solves the fundamental problem that today's AI agents work in isolation ~ they can't collaborate, specialize, or delegate. cagent introduces a multi-agent orchestration platform where you define teams of specialized agents using simple YAML configurations. Each agent has its own expertise, tools, and capabilities, and a root agent coordinates them to tackle complex tasks.

Key features we explored during the workshop include:

- Hierarchical Agent Teams ~ Root agents coordinating with specialized sub-agents, each with specific expertise and tools.

- Rich Tool Ecosystem ~ Built-in tools like

think,todo,memory,filesystem, andshell, plus MCP (Model Context Protocol) integration for external tools. - Multiple AI Provider Support ~ OpenAI, Anthropic, Gemini, and Docker Model Runner. You can even mix different models in a single conversation using "alloy models."

- Flexible Interfaces ~ CLI, Web UI, TUI, and MCP Server modes, all driven by the same YAML configs.

- Docker-Native Workflow ~ Push and pull agent configs like container images. Share agents through Docker Hub.

Workshop Flow

We started with the fundamentals ~ understanding the concepts of autonomy, perception, reasoning, action, and goal-oriented behavior that underpin cagent's design. From there, participants got hands-on building their first agents, configuring tools, and eventually creating multi-agent systems including a Developer Agent, a Financial Analysis Team, and a Docker Expert Team.

A huge shoutout to Raveendiran RR, who served as a mentor throughout the workshop. While I was driving the session from the front, Raveendiran was on the ground helping attendees troubleshoot issues, fix configurations, and get unstuck. Having that kind of real-time support made all the difference ~ participants could keep pace with the workshop instead of falling behind on setup issues. Thank you, Raveendiran!

The excitement in the room was palpable. Seeing developers go from "What is cagent?" to running their own multi-agent teams within two hours was incredibly rewarding.

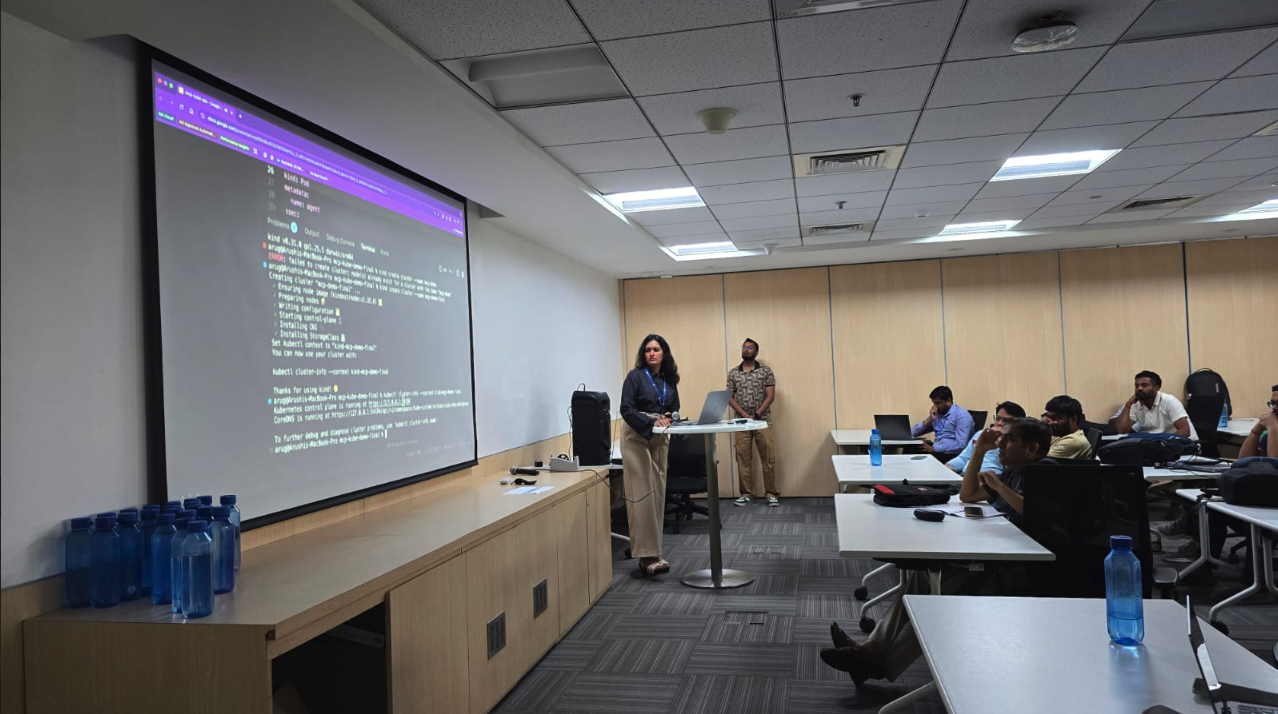

Track 2: Deep Dives into Cloud-Native AI Infrastructure

Running in parallel, Track 2 delivered a series of focused talks on the infrastructure side of the agentic AI stack.

Bala Sundaram kicked things off with "Beyond the Ingress: Orchestrating Traffic in KGateway," exploring how Kubernetes Gateway API is evolving to handle the unique traffic patterns that agentic systems introduce.

As AI agents start making autonomous decisions about which services to call, traditional ingress controllers aren't enough anymore.

Next up, Arushi Garg (Adobe) and Shiva (Google) delivered a joint session on "Standardizing the Intelligence Layer: A Deep Dive into Model Context Protocol (MCP) on Kubernetes."

MCP is becoming the connective tissue between AI models and the tools they need, and seeing how it maps onto Kubernetes-native patterns was eye-opening for many in the audience.

Prarabitha Srivastava from IISc then took us through "Scaling AI Infra: Data Pipelines, Orchestration, and Distributed Training with Ray," providing a research-grounded perspective on how distributed computing frameworks handle AI workloads at scale.

The track wrapped up with a lightning talk from Sandeep Raghuwanshi (Bureau) on "Efficient Model Serving in the Real World: From On-Prem GPUs to Cloud Native" ~ a practical look at the trade-offs teams face when deciding where and how to serve their models.

Post-Lunch: Single Track Sessions

After lunch, we consolidated into a single track for the afternoon sessions, and the quality kept building.

Abhinav Dubey from Devtron Inc. followed with "From LLMs to Agentic Systems in Kubernetes," bridging the gap between the raw capabilities of large language models and the operational infrastructure needed to run them as autonomous agents in Kubernetes.

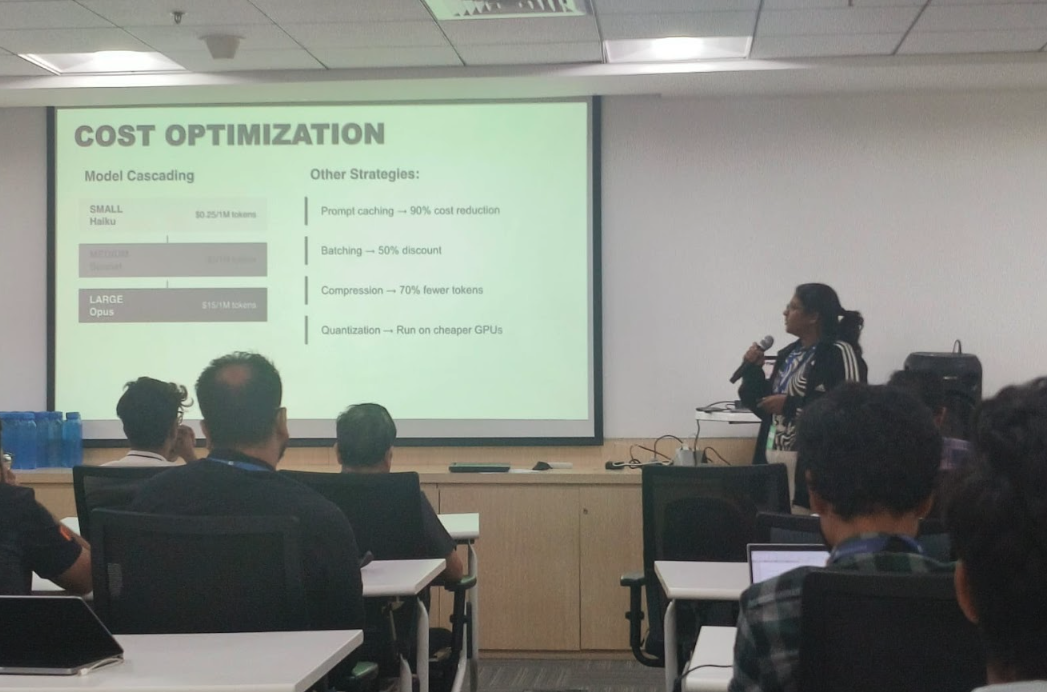

Anannya Roy Chowdhury, Gen AI Developer Advocate at AWS, delivered a critical session on "Moving Agentic Apps from Prototype to Production Responsibly." This is where many teams struggle — the gap between a demo that works on your laptop and a production system that handles real users with proper guardrails is enormous.

Amit Nikhade from IntelliConvene then gave one of the most technically impressive talks of the day: "Shrinking Medical AI from 175GB to 4GB." The techniques for model compression and optimization he shared are exactly what the industry needs as AI moves from cloud-only to edge deployments.

The day concluded with a fun quiz session and closing remarks, capping off a packed 8+ hours of learning, building, and connecting.

Key Takeaways

Looking back at the day, a few themes stood out clearly:

Agentic AI is no longer theoretical. From Docker cagent to MCP on Kubernetes, the tooling for building production-grade agent systems is maturing rapidly. Developers can start building today.

The cloud-native ecosystem is adapting. Gateway APIs, distributed training frameworks, container-native agent runtimes ~ the infrastructure layer is evolving to meet the unique demands of autonomous AI workloads.

The gap between prototype and production is real, but solvable. Multiple talks addressed this directly, and the consensus is clear: responsible deployment requires observability, fault tolerance, security, and proper orchestration.

Community makes the difference. The energy, questions, and hallway conversations were just as valuable as the talks themselves. That's what makes Collabnix meetups special.

Resources

- Workshop Material: dockerworkshop.vercel.app/lab10/overview

- Docker cagent GitHub: github.com/docker/cagent

- Event Photos: Google Drive Album

- Meetup Page: meetup.com/collabnix/events/313001410

What's Next?

The Collabnix community continues to grow, and we have more meetups lined up for 2026. If you missed this one, make sure to join us on Slack or Discord to stay updated on upcoming events.

Special thanks to Anand Kumar Rai, SRE at LeadSquared for all his help and support (even in his difficult times) in making this event successful.

The conversation about agentic AI in cloud-native environments is just getting started. See you at the next one!