How to Handle CORS Settings in Docker Model Runner

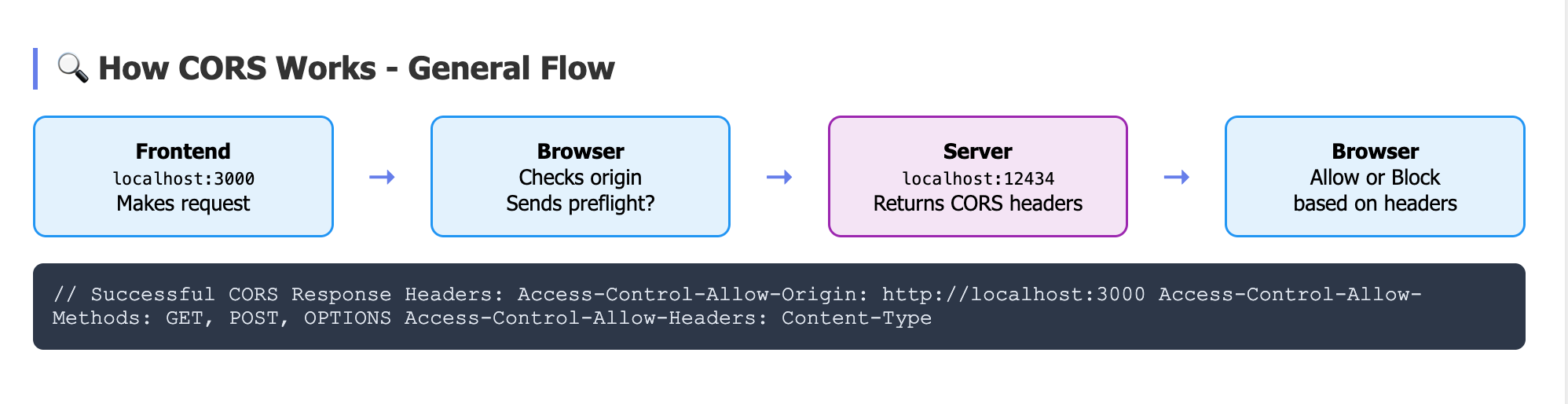

Cross-Origin Resource Sharing (CORS) is a security feature implemented by web browsers to control how web applications can request resources from different domains, ports, or protocols. At its core, CORS enforces the Same-Origin Policy—a fundamental web security concept that prevents a webpage from one domain from accessing resources on another domain without explicit permission.

Understanding CORS: The Foundation

When a web application running on http://localhost:3000 tries to make an API call to http://localhost:8080, the browser treats this as a "cross-origin" request because the ports differ. Without proper CORS configuration, the browser will block this request with the familiar error: "Access to fetch has been blocked by CORS policy."

How CORS Works:

- Preflight Request: For complex requests, browsers send an OPTIONS request first to check permissions

- Server Response: The server responds with CORS headers indicating which origins, methods, and headers are allowed

- Browser Decision: The browser either allows or blocks the actual request based on these headers

- Actual Request: If permitted, the browser sends the real request

Key CORS Headers:

Access-Control-Allow-Origin: Specifies which origins can access the resourceAccess-Control-Allow-Methods: Lists allowed HTTP methods (GET, POST, PUT, etc.)Access-Control-Allow-Headers: Defines permitted request headersAccess-Control-Allow-Credentials: Controls whether cookies and credentials can be sent

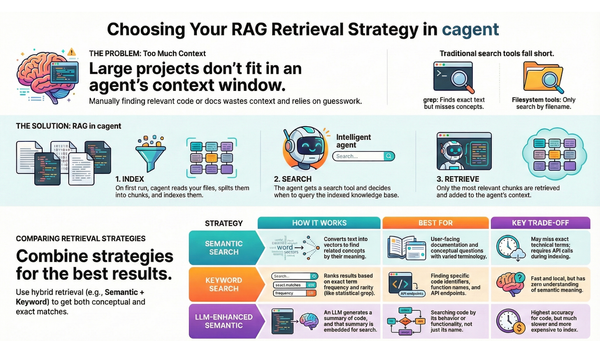

CORS in GenAI Applications: The Challenge

Modern GenAI applications typically involve multiple services running on different ports:

- Frontend Application (React/Vue/Angular) on port 3000

- Backend API Server (Node.js/Go/Python) on port 8080

- AI Model Runner (Local LLM service) on port 12434

- Observability Stack (Grafana, Prometheus) on various ports

Each connection between these services represents a potential CORS boundary. Traditional solutions involved either:

- Setting permissive

Access-Control-Allow-Origin: *(insecure) - Complex proxy configurations (adds latency)

- Same-origin deployment (limits development flexibility)

How Docker Desktop solves this problem

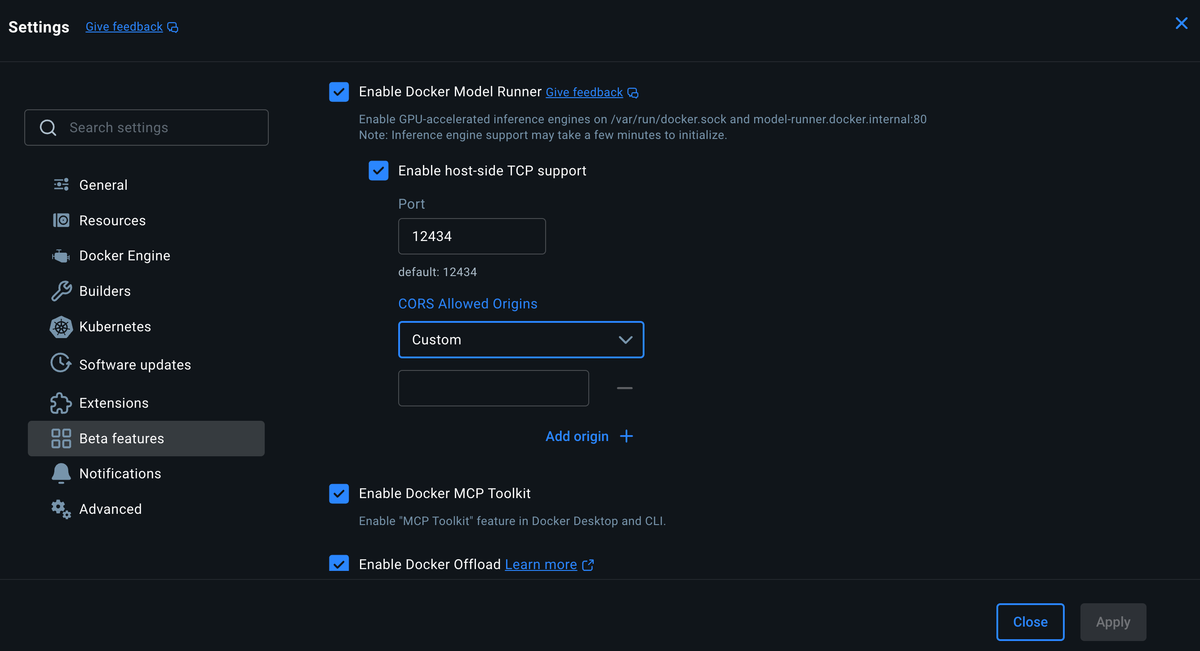

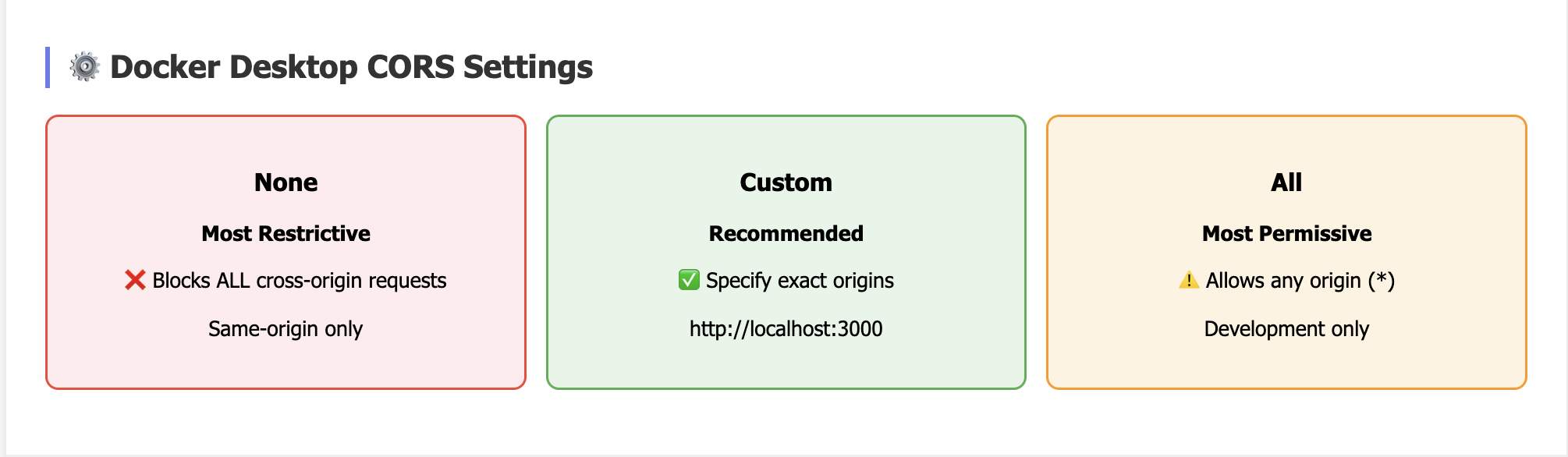

Docker Desktop 4.43.0 changes this by providing granular CORS control directly in the Model Runner settings, eliminating the need for workarounds while maintaining security.

Docker Desktop finally gives developers granular CORS control for Model Runner

Let's explore this using a real GenAI chat application that demonstrates the exact CORS challenges developers face and how the new configuration solves them.

The Demo Application Architecture

We'll use the genai-model-runner-metrics repository, which showcases a complete AI chat application with:

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ React App │───▶│ Go Backend │───▶│ Model Runner │

│ localhost:3000│ │ localhost:8080│ │ localhost:12434 │

└─────────────────┘ └─────────────────┘ └─────────────────┘

│ │ │

│ ┌────────▼────────┐ │

│ │ Observability │ │

│ │ Prometheus+Grafana+Jaeger │

└──────────────▼─────────────────────────────────┘

All running in Docker containersStep 1: CORS = "None"

Reset Docker Desktop CORS:

- Docker Desktop → Settings → Beta features → Model Runner

- Set CORS to "None" (most restrictive)

- Restart Model Runner (uncheck/check "Enable Docker Model Runner")

- Wait for full restart

Verify Model Runner is Running:

curl http://localhost:12434/

Docker Model Runner

The service is running.

Documentation: https://docs.docker.com/desktop/features/model-runner/

fetch('http://localhost:12434/engines/llama.cpp/v1/models')

.then(r => r.json())

.then(d => console.log('✅ Engine endpoint with CORS=None:', d))

.catch(e => console.error('❌ Engine endpoint with CORS=None:', e.message));

Now Test Engine-Specific Endpoints

With CORS still set to "None", let's test the engine endpoints:

// Test 2a: Engine models (GET)

fetch('http://localhost:12434/engines/llama.cpp/v1/models')

.then(r => r.json())

.then(d => console.log('✅ Engine models with CORS=None:', d))

.catch(e => console.error('❌ Engine models with CORS=None:', e.message));

// Test 2b: Engine completions (GET)

fetch('http://localhost:12434/engines/llama.cpp/v1/completions')

.then(r => console.log('✅ Engine completions GET with CORS=None:', r.status))

.catch(e => console.error('❌ Engine completions GET with CORS=None:', e.message));

// Test 2c: Engine completions (POST)

fetch('http://localhost:12434/engines/llama.cpp/v1/completions', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({

model: 'ai/llama3.2:1B-Q8_0',

prompt: 'Hi',

max_tokens: 1

})

})

.then(r => console.log('✅ Engine completions POST with CORS=None:', r.status))

.catch(e => console.error('❌ Engine completions POST with CORS=None:', e.message));Complete Results with CORS = "None":

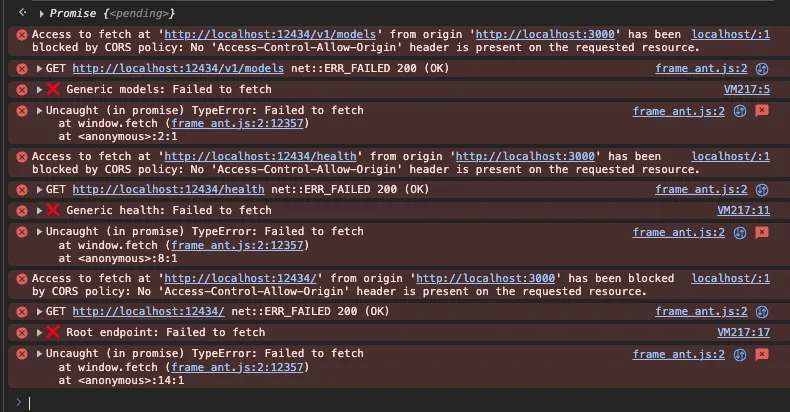

❌ ALL Endpoints BLOCKED:

Generic Endpoints:

/v1/models→ CORS blocked/health→ CORS blocked/(root) → CORS blocked

Engine-Specific Endpoints:

/engines/llama.cpp/v1/models→ CORS blocked/engines/llama.cpp/v1/completions(GET) → CORS blocked/engines/llama.cpp/v1/completions(POST) → CORS blocked

Key Finding:

Docker Desktop CORS = "None" blocks ALL endpoints uniformly!

Step 3: Test with CORS = "All"

- Set CORS to "All" (most permissive)

- Restart Model Runner (uncheck/check Enable Docker Model Runner)

- Wait for restart

Test the Same Endpoints:

// Test all the same endpoints with CORS="All"

// Generic endpoints

fetch('http://localhost:12434/v1/models').then(r => r.json()).then(d => console.log('✅ Generic models with CORS=All:', d)).catch(e => console.error('❌ Generic models with CORS=All:', e.message));

fetch('http://localhost:12434/health').then(r => r.text()).then(d => console.log('✅ Generic health with CORS=All:', d)).catch(e => console.error('❌ Generic health with CORS=All:', e.message));

// Engine endpoints

fetch('http://localhost:12434/engines/llama.cpp/v1/models').then(r => r.json()).then(d => console.log('✅ Engine models with CORS=All:', d)).catch(e => console.error('❌ Engine models with CORS=All:', e.message));This will tell us the full scope of Docker Desktop's CORS control!

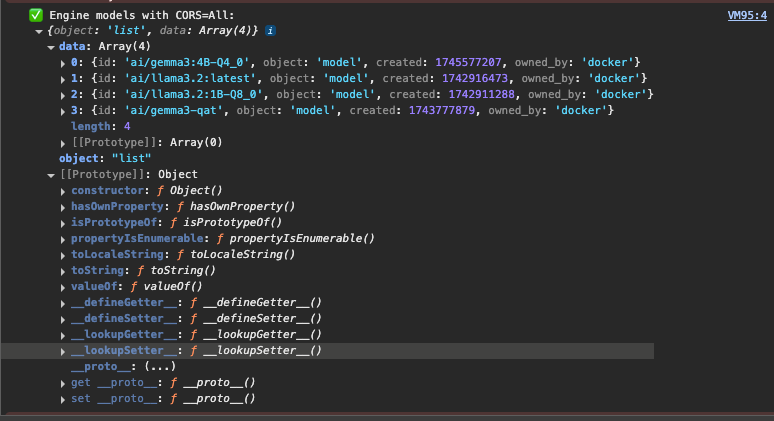

Results with CORS = "All":

❌ Generic Endpoints STILL BLOCKED:

/v1/models→ Still CORS blocked!/health→ Still CORS blocked!

✅ Engine Endpoints WORKS:

/engines/llama.cpp/v1/models→ SUCCESS! Returns model data!

The Pattern is Clear:

Docker Desktop 4.43.x CORS configuration ONLY applies to:

- ✅ Engine-specific endpoints:

/engines/{engine}/v1/*

Docker Desktop CORS does NOT affect:

- ❌ Generic Model Runner endpoints:

/v1/*,/health,/

Step 2 Complete Summary - CORS = "All":

| Endpoint Type | Method | Result | Status |

|---|---|---|---|

Generic /v1/models | GET | ❌ Blocked | No CORS Headers |

Generic /health | GET | ❌ Blocked | No CORS Headers |

Generic / | GET | ❌ Blocked | No CORS Headers |

Engine /engines/llama.cpp/v1/models | GET | ✅ Works | Success |

Engine /engines/llama.cpp/v1/completions | GET | ❌ Blocked | No CORS Headers |

Engine /engines/llama.cpp/v1/completions | POST | ❌ Blocked | Duplicate Headers |

So what we discovered! 🔍

Docker Desktop CORS is even more granular:

- Not just engine vs generic scoping

- Endpoint-specific within engines (models endpoint works, completions endpoint doesn't)

Conclusion

Docker Desktop 4.43.x's CORS configuration transforms how developers build GenAI applications. Using the genai-model-runner-metrics demo, we've seen how proper CORS configuration. The new CORS controls finally give developers the security and flexibility needed for modern GenAI applications. Start with the demo repository, experiment with different CORS configurations, and build secure AI-powered applications with confidence.