How to Setup Gemini CLI + Docker MCP Toolkit for AI-assisted Development

Learn how to set up Gemini CLI with Docker MCP Toolkit for powerful AI-assisted development. Complete guide with step-by-step instructions, benefits, and real-world examples

After extensive testing, I've discovered the optimal setup that eliminates complexity while maximizing power: Gemini CLI paired with Docker MCP Toolkit.

This combination delivers enterprise-grade AI assistance without the overhead of IDEs or complex configurations. Here's why this matters and how to set it up perfectly.

What is Gemini CLI and Why Should You Care?

Gemini CLI is Google's open-source AI agent that brings Gemini 2.5 Pro directly to your terminal. Unlike web-based AI tools, Gemini CLI provides:

- Direct terminal integration with your development workflow

- 1 million token context window for analyzing large codebases

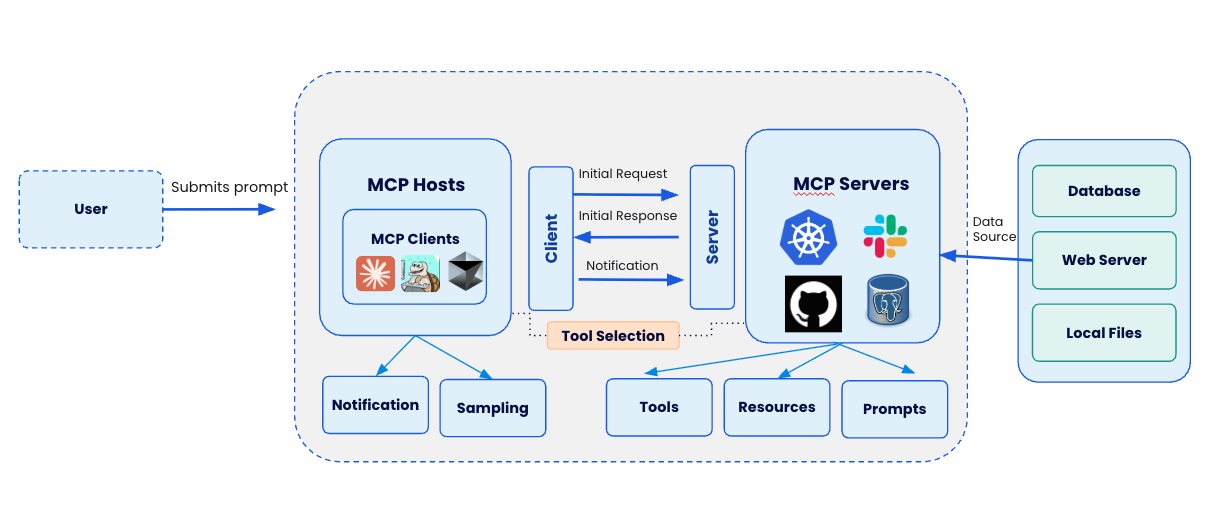

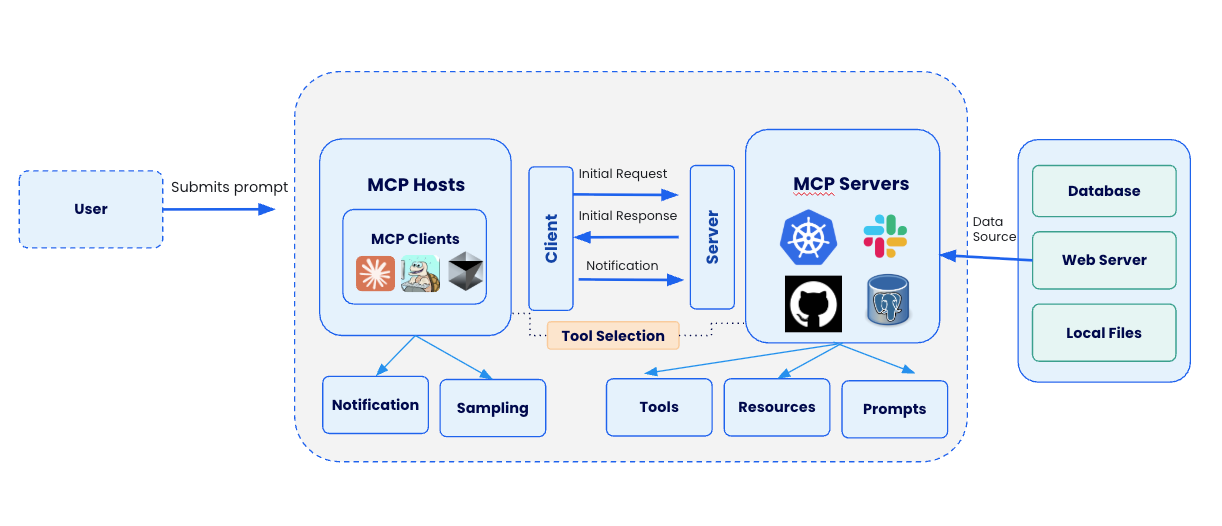

- Built-in tool support through Model Context Protocol (MCP)

- Free tier access with generous usage limits

- Real-time code execution and file manipulation

Docker MCP Toolkit

"The MCP Ecosystem is exploding. In just weeks, Docker MCP Catalog has surpassed 1 million pulls, validating that developers are hungry for a secure way to run MCP Servers."

~ The Docker Team

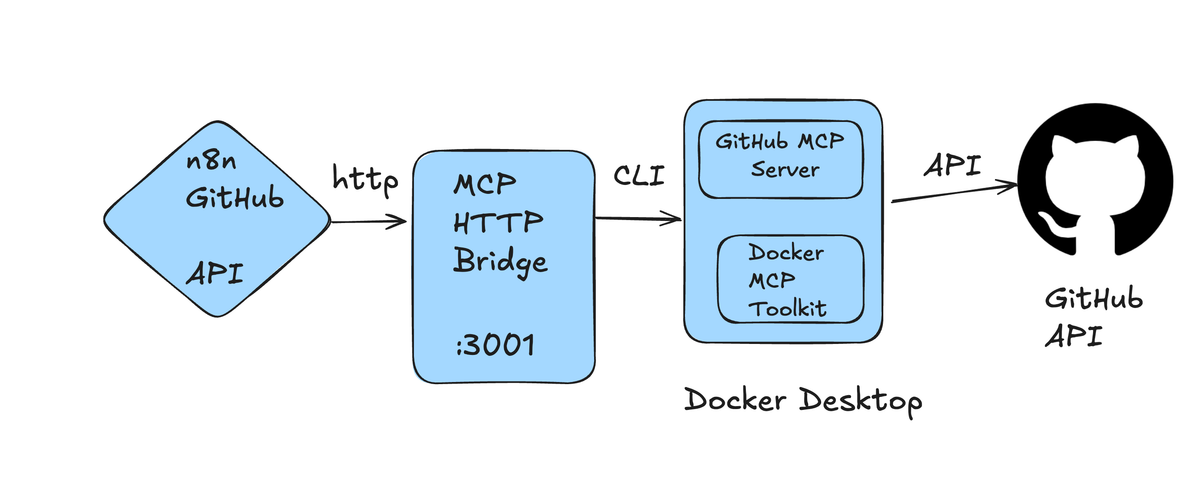

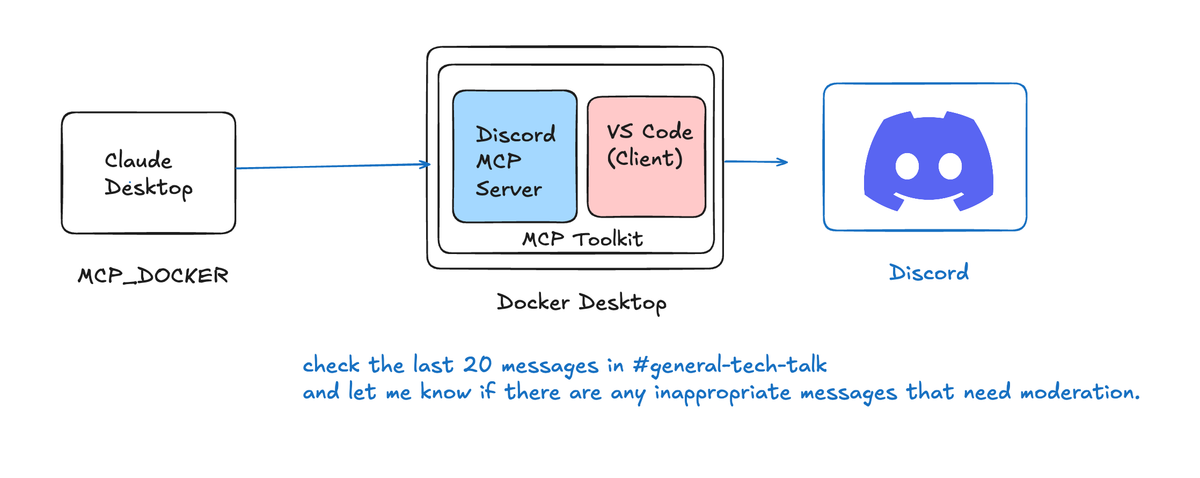

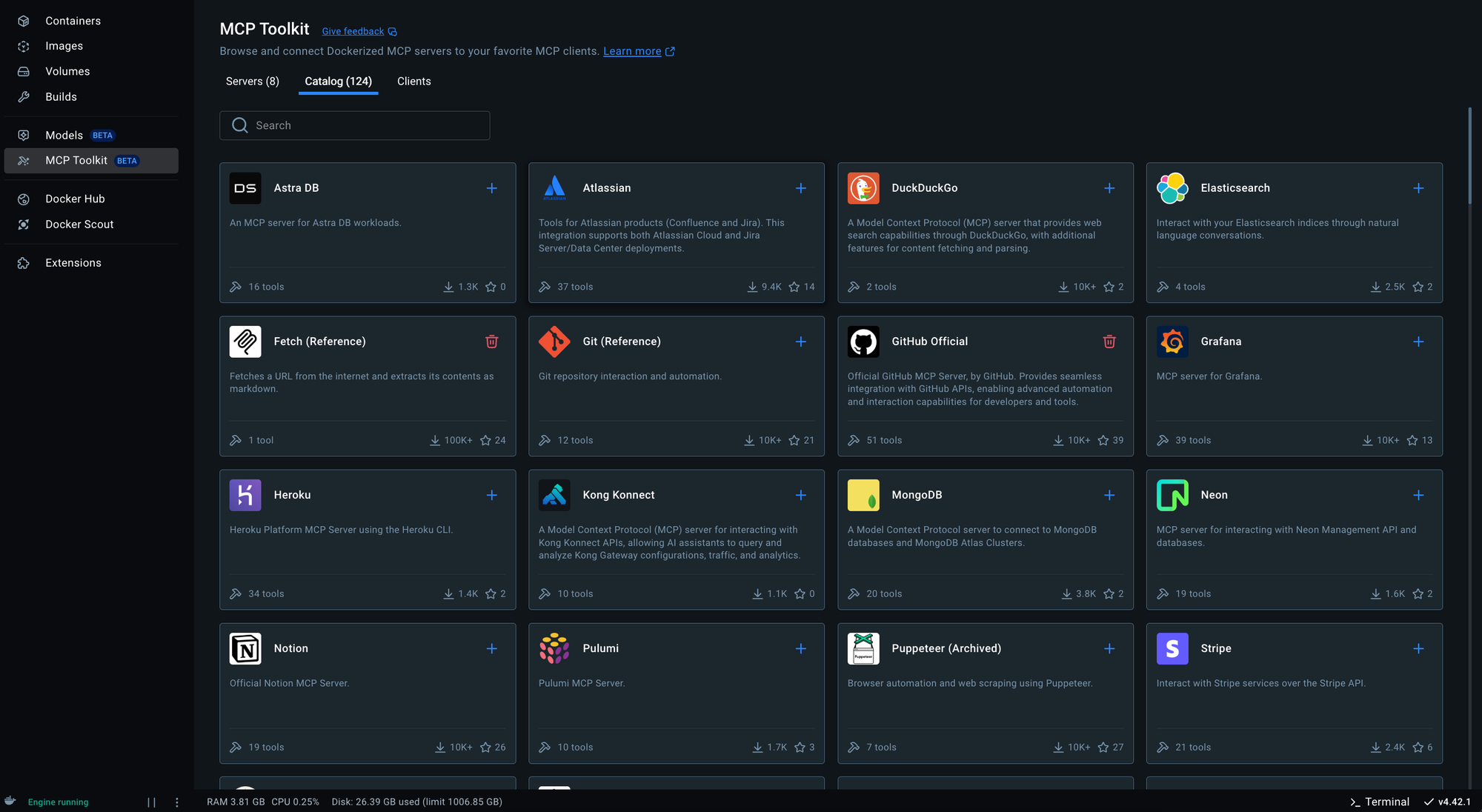

The Docker MCP Toolkit revolutionizes how AI agents interact with development tools. Instead of manually configuring individual MCP servers, you get:

- 100+ pre-configured MCP servers in the catalog

- One-click installation of development tools

- Secure, containerized execution environment

- Gateway architecture that simplifies client connections

- Built-in OAuth and credential management

If you're new to the Model Context Protocol and Docker MCP Toolkit, click the below link to learn more about the implementation.

Why This Terminal-Based Approach Works So Well

The Gemini CLI + Docker MCP Toolkit combination offers unique advantages that make it ideal for modern development workflows:

Performance Benefits

- Lightning-fast startup with minimal resource usage

- Direct system access without middleware limitations

- Efficient memory footprint for long development sessions

Flexibility Advantages

- Works in any terminal (Terminal.app, iTerm2, Windows Terminal, Linux shells)

- No dependency conflicts or compatibility issues

- Portable across development environments

- Independent of external tool updates

Workflow Efficiency

- Single interface for all AI interactions

- Seamless context switching between projects

- Direct command execution in your working directory

- Natural integration with existing terminal workflows

Step-by-Step Setup Guide

Prerequisite

- Install Docker Desktop

- Enable Docker MCP Toolkit

- Enable at-least 1 MCP Server ( Docker, GitHub, Firecrawl, Kubernetes, Slack)

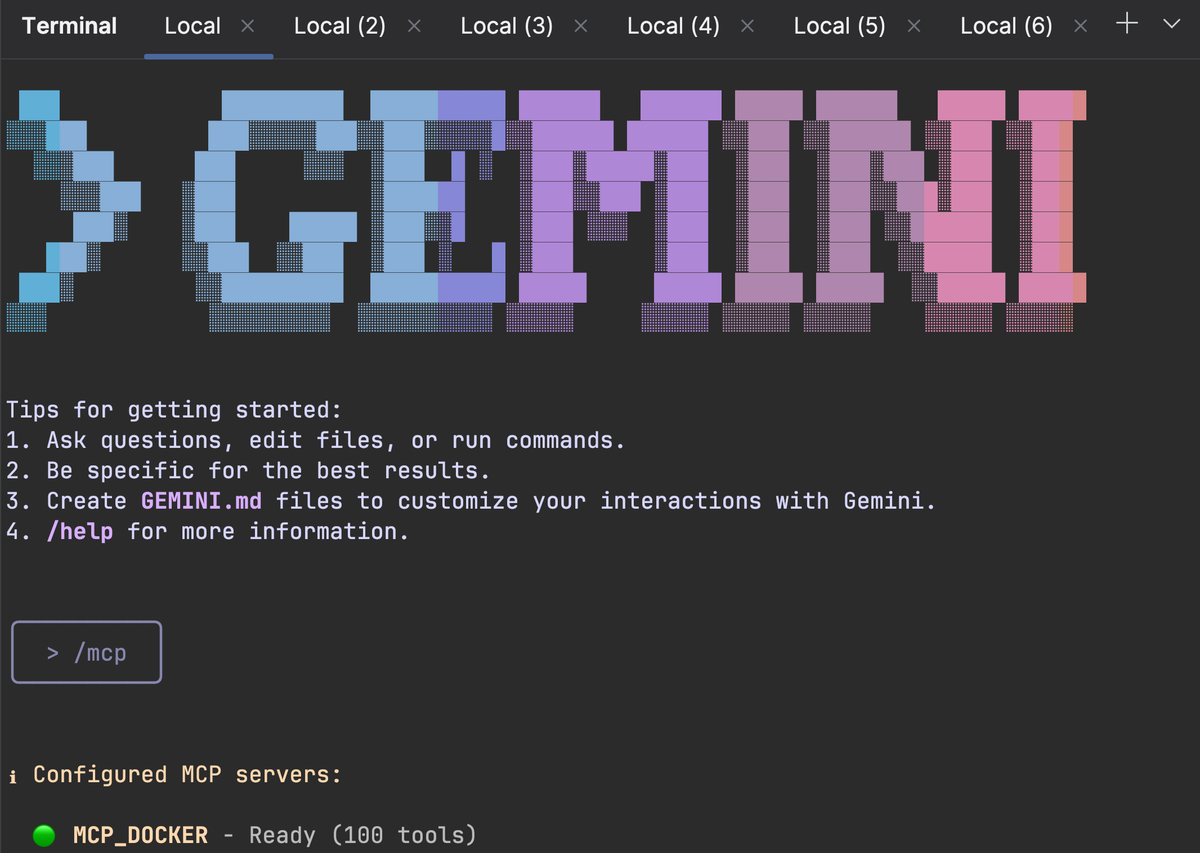

Step 2. Install Gemini CLI

Install via npm:

npm install -g @google/gemini-cli

Step 3. Launch and authenticate

gemini

Step 4. Login via Google

Follow the setup wizard:

- Select a preferred theme style from the options provided Google Gemini CLI Tutorial: How to Install and Use It (With Images) - DEV Community

- Choose a login method. I recommend "Login with Google", which allows up to 60 requests/minute and 1,000 requests/day for free

In case you need higher rate limits or enterprise access, I suggested you to use an API key from Google AI Studio. You can easily set it as an environment variable:

export GEMINI_API_KEY="YOUR_API_KEY"

- After selecting your sign-in method, a browser window will open. Simply log in with your Google account

Step 5. Start chatting with Gemini

Just type gemini to start chatting with Gemini and entering your prompt.

Gemini CLI Configuration

This is configured via the ~/.gemini/settings.json file.

Your current settings.json file only has only a basic configuration. You need to add the Docker MCP Gateway configuration to it. Here's how to update it:

{

"theme": "Default",

"selectedAuthType": "oauth-personal",

"mcpServers": {

"MCP_DOCKER": {

"command": "docker",

"args": ["mcp", "gateway", "run"],

"env": {}

}

}

}

That's it. Open a terminal and type:

gemini

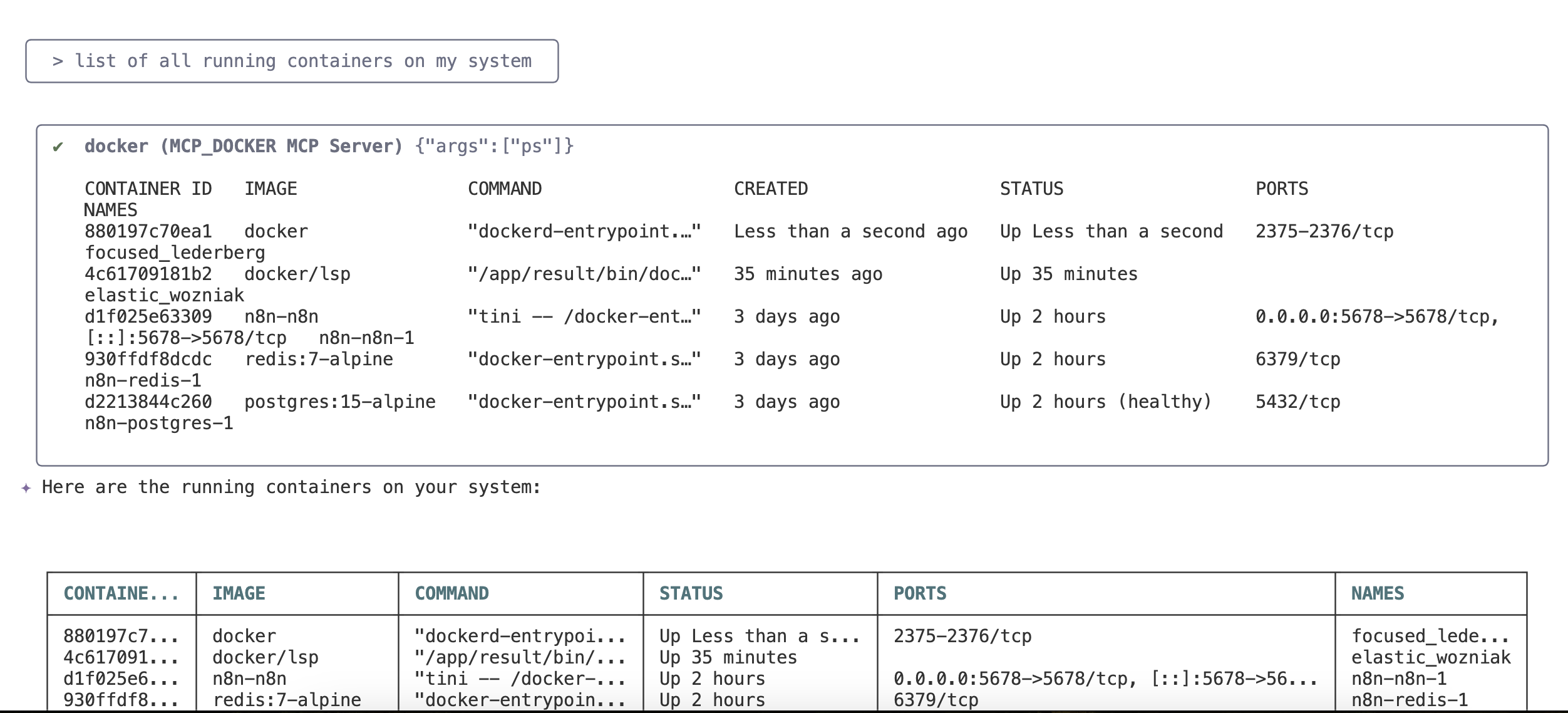

Start playing around with MCP by typing /mcp.

Type this prompt: "List of all running containers on my system"

The combination of Gemini CLI and Docker MCP Toolkit represents a paradigm shift in AI-assisted development. By leveraging terminal-native tools and containerized services, you get:

- Unmatched flexibility in tool selection

- Superior performance with minimal overhead

- Enterprise-grade security through Docker's container isolation

- Future-proof architecture that scales with your needs

This setup isn't just about convenience—it's about building a development environment that adapts to your workflow rather than forcing you to adapt to it.

Further Readings