How to Write Your First Agentic Compose File

Master Agentic Compose: The complete guide to Docker's new AI-native syntax. Learn models as first-class citizens, MCP Gateway patterns, secrets management, Docker Offload scaling, and advanced multi-agent architectures. Includes full working examples.

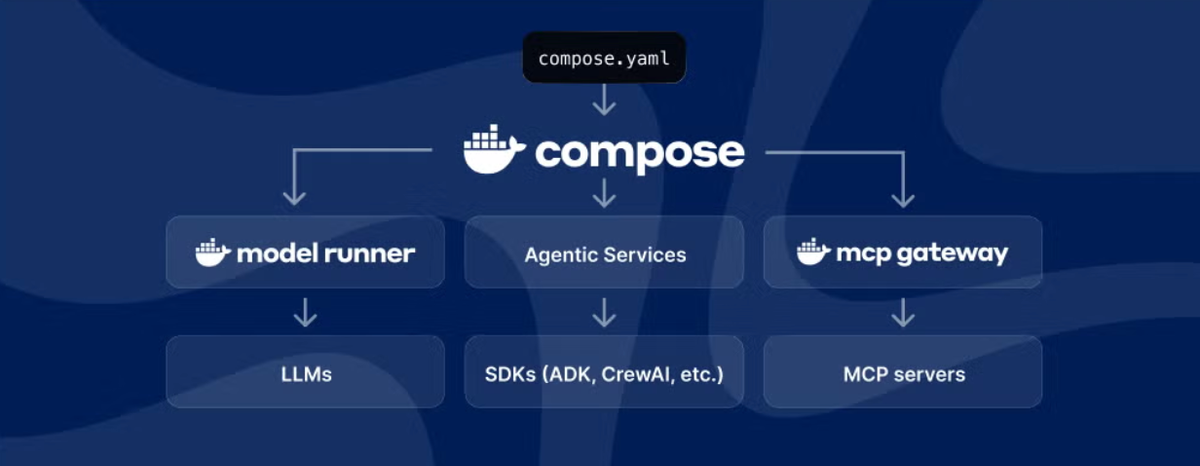

Docker Compose has evolved beyond traditional microservices to become the standard for defining and running agentic AI applications. With native support for AI models, MCP tools, and agent orchestration, you can now describe your entire AI stack in a single compose.yaml file.

This tutorial walks you through writing your first Agentic Compose file, covering the new syntax and patterns that make AI development as simple as docker compose up.

Understanding Agentic Compose Structure

An Agentic Compose file has six key sections:

services: # Your agent applications and infrastructure

models: # AI models as first-class citizens

secrets: # Secure credential management

networks: # Service connectivity

volumes: # Persistent storage

x-offload: # Production scaling configuration (optional)Let's build a complete example step by step.

Step 1: Define Your AI Models

Models are now first-class citizens in Docker Compose, not services. They define the AI capabilities available to your agents.

models:

# Small model for development

qwen3-small:

model: ai/qwen3:8B-Q4_0 # 4.44 GB

context_size: 15000 # 7 GB VRAM

# Medium model for production

qwen3-medium:

model: ai/qwen3:14B-Q6_K # 11.28 GB

context_size: 15000 # 15 GB VRAM

# Large model for complex tasks (Docker Offload recommended)

qwen3-large:

model: ai/qwen3:30B-A3B-Q4_K_M # 17.28 GB

context_size: 15000 # 20 GB VRAMKey Points:

- Models automatically integrate with Docker Model Runner

- Context size determines memory usage and capabilities

- Models can be referenced by name in your agent services

Step 2: Configure MCP Gateway

The MCP Gateway orchestrates all your AI tools and data sources. Instead of defining MCP servers as separate services, you configure them as command arguments.

services:

mcp-gateway:

image: docker/mcp-gateway:latest

ports:

- "8811:8811"

# Enable dynamic MCP server management

use_api_socket: true

command:

- --transport=streaming

- --port=8811

# Secure credential injection

- --secrets=/run/secrets/mcp_secret

# MCP servers to enable

- --servers=github-official,brave,wikipedia-mcp,filesystem

# Data transformation interceptors

- --interceptor

- "after:list_issues:jq '.results = (.results | map({number, title, state, user: .user.login, labels: [.labels[].name]}))"

- --verbose=true

volumes:

- /var/run/docker.sock:/var/run/docker.sock # For dynamic server management

- ./data:/app/data:ro # For filesystem access

secrets:

- mcp_secret

networks:

- ai-network

restart: unless-stoppedKey Features:

use_api_socket: trueenables dynamic MCP server provisioning--serversdefines which MCP tools are available--interceptortransforms tool outputs for better AI consumption- Secrets are injected securely at runtime

Step 3: Create Your Agent Application

Your agent service connects models to MCP tools, implementing the actual AI logic.

services:

research-agent:

image: my-research-agent

build:

context: ./agent

ports:

- "7777:7777"

environment:

# Connect to MCP Gateway

- MCPGATEWAY_URL=mcp-gateway:8811

# Agent configuration

- AGENT_NAME=research-assistant

# Link to AI models

models:

qwen3-small:

endpoint_var: MODEL_RUNNER_URL

model_var: MODEL_RUNNER_MODEL

volumes:

- ./agents.yaml:/config/agents.yaml:ro

depends_on:

- mcp-gateway

networks:

- ai-network

restart: unless-stoppedKey Points:

models:section links specific models to environment variables- Agents communicate with MCP Gateway via HTTP

- Configuration is externalized via mounted files

Step 4: Add Web Interface (Optional)

Create a user interface for interacting with your agents.

services:

agent-ui:

image: my-agent-ui

build:

context: ./ui

ports:

- "3000:3000"

environment:

- AGENTS_URL=http://research-agent:7777

depends_on:

- research-agent

networks:

- ai-network

restart: unless-stoppedStep 5: Secure Credential Management

Use Docker secrets instead of environment variables for sensitive data.

secrets:

mcp_secret:

file: ./.mcp.envCreate .mcp.env:

# GitHub access

GITHUB_TOKEN=ghp_your_personal_access_token

# Web search capabilities

BRAVE_API_KEY=your_brave_search_api_key

# Optional: External model APIs

OPENAI_API_KEY=sk-your_openai_api_key

ANTHROPIC_API_KEY=your_anthropic_api_keyBenefits:

- Credentials never appear in process lists

- Secure runtime injection into containers

- Easy rotation without rebuilding images

- Audit trail of secret access

Step 6: Define Infrastructure

Add supporting infrastructure for your AI stack.

networks:

ai-network:

driver: bridge

volumes:

model-cache:

driver: local

agent-data:

driver: local

conversation-history:

driver: localComplete Agentic Compose File

Here's the complete example:

services:

# MCP Gateway - Tool orchestration

mcp-gateway:

image: docker/mcp-gateway:latest

ports:

- "8811:8811"

use_api_socket: true

command:

- --transport=streaming

- --port=8811

- --secrets=/run/secrets/mcp_secret

- --servers=github-official,brave,wikipedia-mcp,filesystem

- --interceptor

- "after:list_issues:jq '.results = (.results | map({number, title, state, user: .user.login}))'"

- --verbose=true

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./data:/app/data:ro

secrets:

- mcp_secret

networks:

- ai-network

restart: unless-stopped

# Research Agent Application

research-agent:

image: my-research-agent

build:

context: ./agent

ports:

- "7777:7777"

environment:

- MCPGATEWAY_URL=mcp-gateway:8811

- AGENT_NAME=research-assistant

models:

qwen3-small:

endpoint_var: MODEL_RUNNER_URL

model_var: MODEL_RUNNER_MODEL

volumes:

- ./agents.yaml:/config/agents.yaml:ro

- conversation-history:/app/history

depends_on:

- mcp-gateway

networks:

- ai-network

restart: unless-stopped

# Web Interface

agent-ui:

image: my-agent-ui

build:

context: ./ui

ports:

- "3000:3000"

environment:

- AGENTS_URL=http://research-agent:7777

depends_on:

- research-agent

networks:

- ai-network

restart: unless-stopped

# AI Models as first-class citizens

models:

qwen3-small:

model: ai/qwen3:8B-Q4_0

context_size: 15000

qwen3-medium:

model: ai/qwen3:14B-Q6_K

context_size: 15000

qwen3-large:

model: ai/qwen3:30B-A3B-Q4_K_M

context_size: 15000

# Secure credential management

secrets:

mcp_secret:

file: ./.mcp.env

# Infrastructure

networks:

ai-network:

driver: bridge

volumes:

model-cache:

agent-data:

conversation-history:Testing Production-scale Deployment

To test production-scale deployment with larger AI models, create compose-offload.yml:

services:

research-agent:

# Override with larger model for production

models: !override

qwen3-large:

endpoint_var: MODEL_RUNNER_URL

model_var: MODEL_RUNNER_MODEL

# Production environment settings

environment:

- MCPGATEWAY_URL=mcp-gateway:8811

- AGENT_NAME=research-assistant

- LOG_LEVEL=warn

- PERFORMANCE_MODE=true

models:

qwen3-large:

model: ai/qwen3:30B-A3B-Q4_K_M

context_size: 41000 # Increased for productionDeploy to production:

# Production with Docker Offload

docker compose -f docker-compose.yml -f compose-offload.yaml up -d

Benefits of Docker Offload for AI Development:

- Access GPUs - Move beyond local hardware constraints with one click

- Seamless cloud scaling - Test resource-intensive models with high-performance GPUs

- Stay in local workflow - No changes to your development process or tools

- Build, test, and scale - Complete AI development lifecycle from laptop to cloud

- Cost-effective testing - Only pay for GPU compute when you need it

Advanced Patterns

Multi-Agent Systems

services:

coordinator-agent:

# ... coordinator configuration

models:

qwen3-large: # Larger model for orchestration

endpoint_var: MODEL_RUNNER_URL

model_var: MODEL_RUNNER_MODEL

specialist-agent-1:

# ... specialist configuration

models:

qwen3-small: # Smaller model for specific tasks

endpoint_var: MODEL_RUNNER_URL

model_var: MODEL_RUNNER_MODELCustom MCP Interceptors

services:

mcp-gateway:

command:

# Transform GitHub issues to CSV

- --interceptor

- "after:list_issues:jq '.results | map([.number, .title, .state, .user.login] | @csv) | join(\"\\n\")'"

# Simplify web search results

- --interceptor

- "after:brave_web_search:jq '.results = (.results | map({title, url, snippet}))'"

# Add metadata to all responses

- --interceptor

- "after:*:jq '. + {timestamp: now, processed_by: \"mcp-gateway\"}'"Environment-Specific Configuration

# docker-compose.dev.yaml

models:

qwen3-small:

model: ai/qwen3:8B-Q4_0

context_size: 8000 # Reduced for development

# docker-compose.staging.yaml

models:

qwen3-medium:

model: ai/qwen3:14B-Q6_K

context_size: 15000

# docker-compose.prod.yaml

models:

qwen3-large:

model: ai/qwen3:30B-A3B-Q4_K_M

context_size: 41000 # Maximum for productionBest Practices

1. Model Selection Strategy

- Development: Use small models (8B) for fast iteration

- Testing: Use medium models (14B) for realistic evaluation

- Production: Use large models (30B+) with Docker Offload

2. MCP Server Configuration

- Start with essential servers:

github-official,brave,wikipedia-mcp - Add domain-specific servers as needed:

slack,notion,database - Use interceptors to optimize data for your agents

3. Secret Management

- Never put credentials in compose files

- Use

.mcp.envfor all API keys and tokens - Rotate secrets regularly via file updates

4. Resource Planning

models:

production-model:

model: ai/qwen3:30B-A3B-Q4_K_M

context_size: 41000

# Resource requirements (for planning):

# - Model size: 17.28 GB

# - VRAM needed: 24 GB

# - Recommended: Docker Offload5. Networking and Dependencies

services:

agent:

depends_on:

- mcp-gateway # Ensure MCP tools are ready

networks:

- ai-network # Isolated network for AI servicesDeployment Commands

# Validate your compose file

docker compose config

# Start development stack

docker compose up -d

# View all services

docker compose ps

# Check model status

docker compose exec research-agent curl http://localhost:8080/models

# Test MCP tools

docker compose exec mcp-gateway curl http://localhost:8811/mcp \

-d '{"jsonrpc": "2.0", "method": "tools/list", "id": 1}'

# Deploy to production with larger models

docker compose -f docker-compose.yml -f docker-compose.prod.yml up -d

# Scale specific services

docker compose up -d --scale research-agent=3What's Next

You now have a complete Agentic Compose file that defines:

- ✅ AI models as first-class resources

- ✅ Secure MCP tool orchestration

- ✅ Agent application logic

- ✅ Production scaling with Docker Offload

- ✅ Secure credential management

Next steps:

- Customize the agent logic for your use case

- Add domain-specific MCP servers

- Implement custom interceptors for data transformation

- Set up CI/CD for automated deployments

- Scale to production with Docker Offload

The future of AI development is declarative, containerized, and composable. With Agentic Compose and Docker Offload, you can build and test sophisticated AI agents—from laptop development to cloud-scale validation—all while staying in your familiar Docker workflow.

Sample Projects