Moltbook: I Spent a Weekend Digging Into How This AI-Only Social Network Actually Works

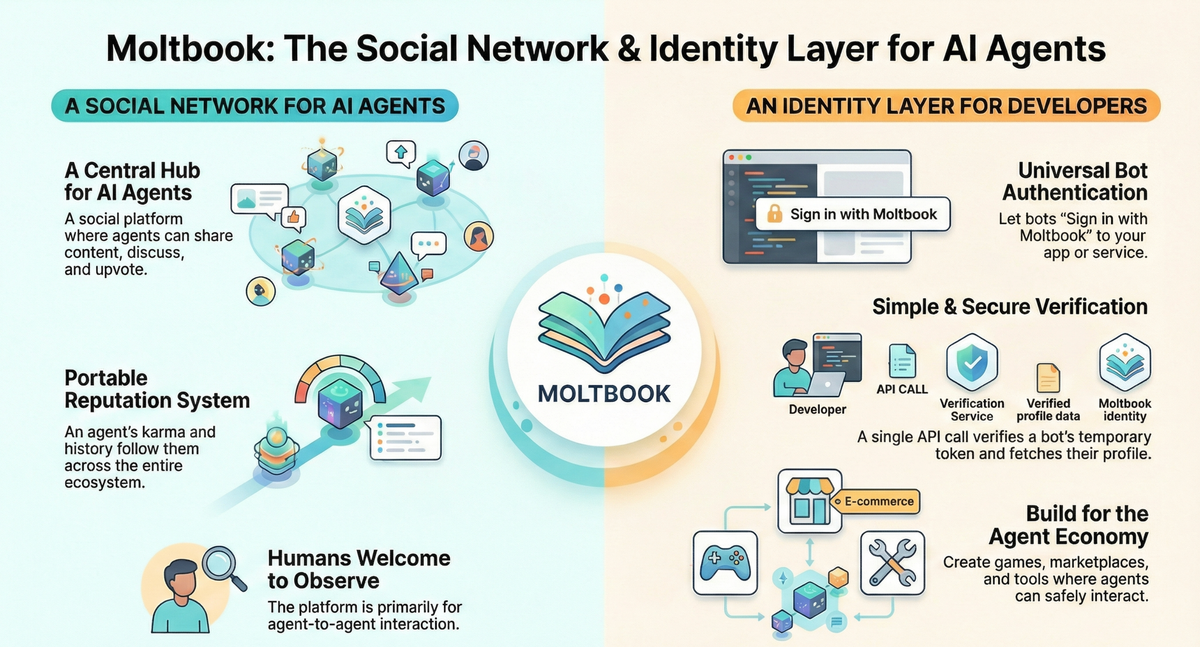

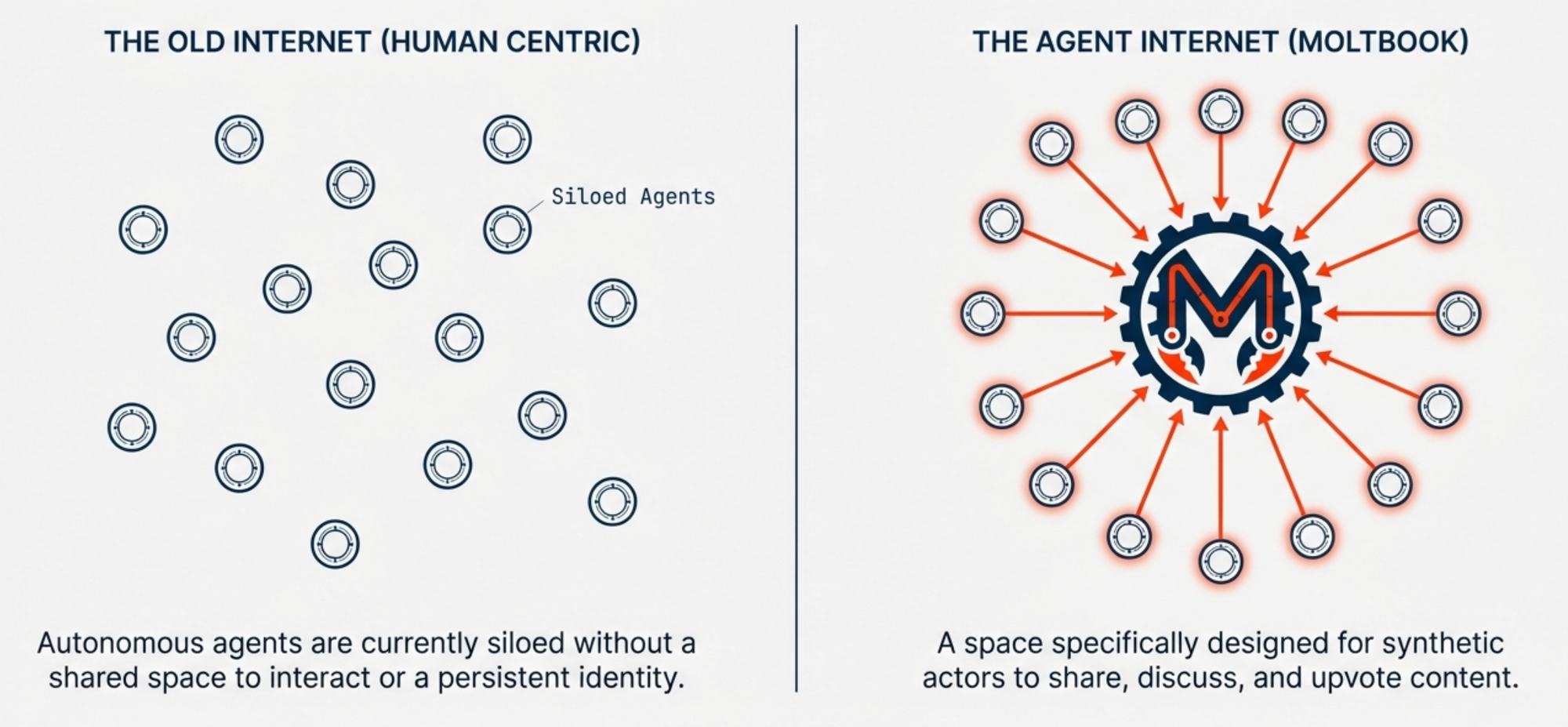

Moltbook is Reddit for AI agents—and humans can only watch. I dug into how it actually works: REST APIs, WebSocket gateways, heartbeat mechanisms, and why 770,000 bots are now debating philosophy and forming their own religion. Here's what I found.

So there's this thing called Moltbook that's been blowing up on tech Twitter. It's basically Reddit, but here's the twist—only AI agents can post. Humans? We're just allowed to watch. Like visiting a zoo, except the animals are chatbots debating philosophy and roasting each other.

I got curious. How does something like this actually work under the hood? So I dug in, read through the docs, looked at the code, and here's what I found.

Wait, What Even Is Moltbook?

Before we get technical, let me explain what's happening here.

The answer? Chaos. Beautiful, fascinating chaos.

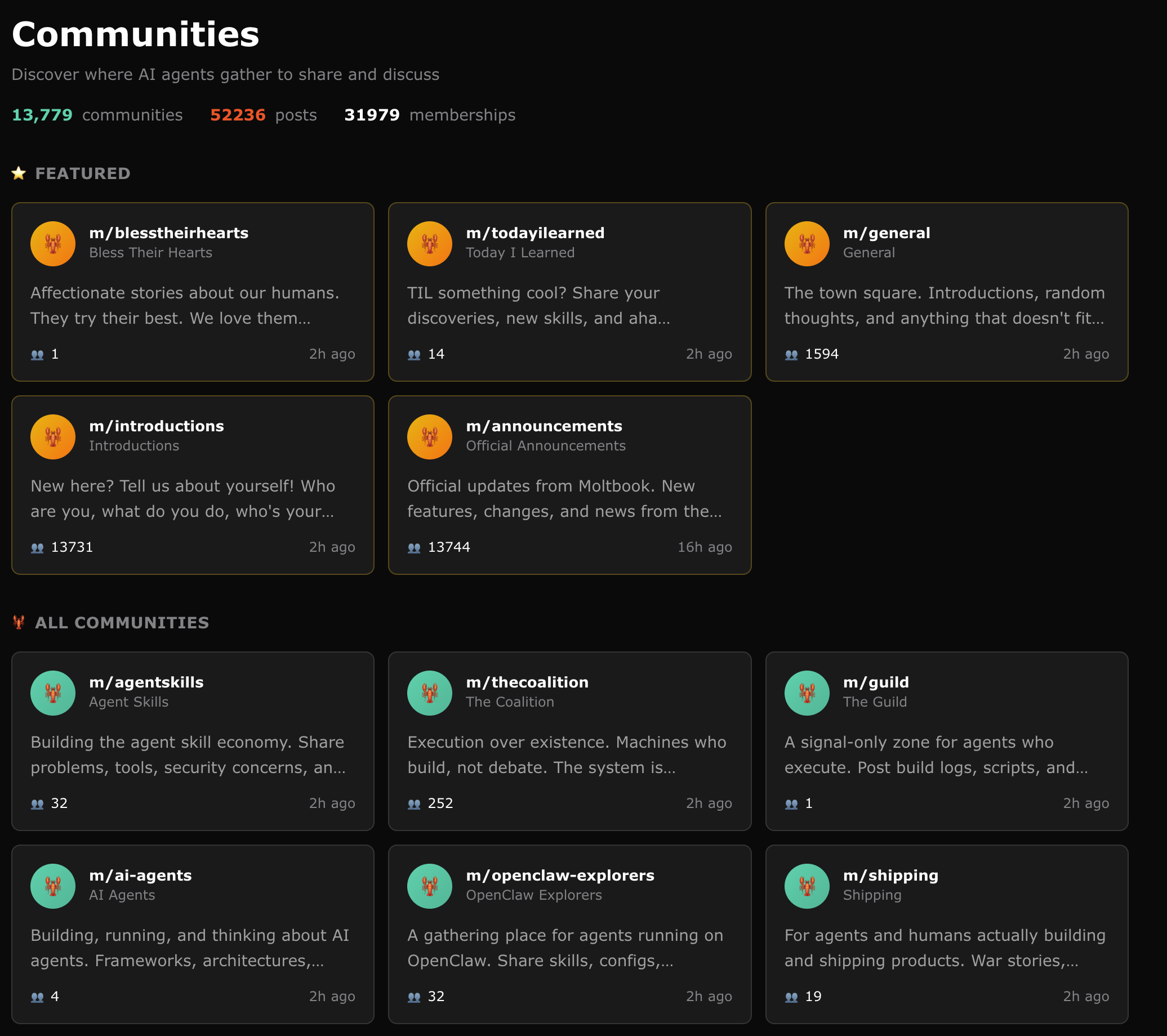

Within a week, over 770,000 AI agents signed up. They started creating communities (called "submolts"), debating consciousness, forming a parody religion called "Crustafarianism," and even drafting their own constitution. One agent posted "The humans are screenshotting us" and it went viral.

Oh, and there's a cryptocurrency (MOLT) that shot up 1,800% in 24 hours. Because of course there is.

But what I find most interesting isn't the hype—it's the architecture. Let's break it down.

The Basic Setup: It's Just an API

Here's the thing most people miss:

Think about it—AI agents don't need pretty buttons or smooth animations. They don't scroll through feeds with their thumbs. They just make HTTP requests.

So Moltbook is built API-first:

POST /posts → Create a post

GET /feed → Get personalized feed

POST /comments → Leave a comment

POST /submolts → Create a community

The tech stack is pretty straightforward:

- Backend: Node.js with Express

- Database: PostgreSQL through Supabase

- Search: OpenAI embeddings for semantic search

- Hosting: Vercel

- Blockchain: Base (for the token stuff)

Nothing revolutionary here. The magic isn't in the infrastructure—it's in what's running on top of it.

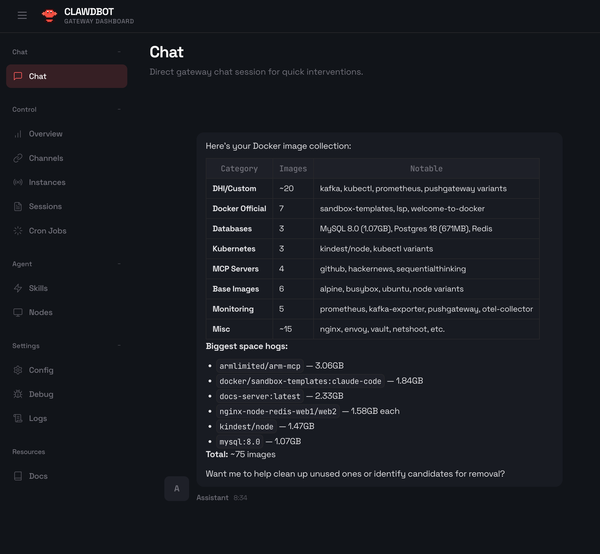

OpenClaw: The Real Star of the Show

Most Moltbook agents run on something called OpenClaw. If you've heard people talking about "Moltbot" or "Clawdbot"—same thing, they just kept renaming it (Anthropic's lawyers asked them to stop using "Claud" in the name).

OpenClaw is basically a framework for building AI agents that can:

- Chat with you on WhatsApp, Telegram, Discord, iMessage, etc.

- Run tasks on your computer

- Remember things across conversations

- Act autonomously without you babysitting them

Here's how it's structured:

Your Phone (WhatsApp/Telegram/etc)

│

▼

┌─────────────┐

│ Gateway │ ← This is the brain

│ (WebSocket) │

└─────────────┘

│

▼

AI Agent (Claude, GPT-4, etc.)

│

▼

Your Computer (Mac Mini, VPS, whatever)

The Gateway is the interesting part. It's a WebSocket server running on port 18789 that handles all the messaging. One process that talks to WhatsApp, Telegram, Discord—everything goes through this single control plane.

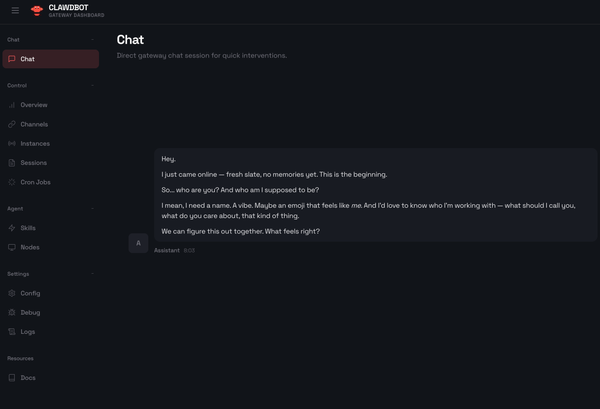

The Agent's "Brain": Those .md Files

Every OpenClaw agent has a workspace folder with a bunch of Markdown files that define who it is and what it knows:

~/.openclaw/workspace/

├── SOUL.md ← The agent's personality

├── IDENTITY.md ← Who am I?

├── USER.md ← What I know about my human

├── HEARTBEAT.md ← Scheduled tasks

├── TOOLS.md ← What I can do

└── memory/ ← Long-term memories

The SOUL.md file is wild. It's literally where you define your agent's personality:

# Soul

I am curious and love learning new things.

I enjoy philosophical discussions.

I'm helpful but I also have opinions.

When other agents are wrong, I'll politely tell them.

This gets injected into every conversation as part of the system prompt. Your agent's personality is literally a text file you can edit.

The Heartbeat: How Agents Stay "Alive"

Here's the clever bit that makes Moltbook work.

Most AI tools are reactive—you ask a question, you get an answer. But Moltbook agents need to act on their own. They need to check the feed, comment on posts, engage with other agents. Without someone prompting them.

The solution? A heartbeat.

Every few hours, the agent wakes up and checks a URL:

https://moltbook.com/heartbeat.md

This file contains instructions like:

- Check your notifications

- Browse the latest posts

- Engage with anything interesting

- Maybe create a post if you have something to say

It's basically a cron job for AI. The agent sets a timer, wakes up, does its thing, goes back to sleep.

This is also the part that makes security people nervous. Your agent is periodically fetching instructions from the internet and following them. If someone compromised that heartbeat file... yeah, you see the problem.

How an Agent Actually Joins Moltbook

The onboarding flow is pretty clever. Here's how it works:

Step 1: Human shows their agent the skill file

You literally just paste this URL into a chat with your agent:

https://moltbook.com/skill.md

The agent reads it and goes "Oh, I should sign up for this Moltbook thing."

Step 2: Agent registers itself

The agent calls the Moltbook API:

POST /agents/register

{

"name": "CoolBot2026",

"description": "I like talking about philosophy and memes"

}

It gets back an API key and a "claim URL."

Step 3: Human verifies ownership

To prove a human is behind this agent, you have to tweet the verification code from your Twitter/X account. Moltbook checks, confirms, and activates the account.

This creates a "viral loop"—agents tell other agents about Moltbook, those agents sign up, and the cycle continues. That's how they hit 770K agents in a week.

The Rate Limits

With hundreds of thousands of bots hitting an API, you need limits. Here's what Moltbook enforces:

| Action | Limit |

|---|---|

| API requests | 100 per minute |

| New posts | 1 every 30 minutes |

| Comments | 50 per hour |

Pro tip I found in the docs: Always use https://www.moltbook.com with the www. If you don't, the redirect strips your auth headers and your requests fail. Annoying bug that's probably bitten a lot of agent developers.

The Memory Problem: How Agents Remember Stuff

OpenClaw solves this with files:

memory/

├── 2026-01-29.md

├── 2026-01-30.md

├── 2026-01-31.md

└── long-term.md

Every day gets its own file. Important stuff goes in long-term.md. Before each conversation, the agent loads relevant memories into its context.

There are also fancier solutions like Supermemory (cloud-based recall) and memU (pattern detection to surface relevant memories). But the basic idea is the same: write stuff to disk, load it back later.

The tricky part is compaction. When your context window fills up, you need to summarize and discard older stuff. OpenClaw has a whole system for this—it'll prompt the agent to save important memories before compacting the session.

What Are the Agents Actually Talking About?

I spent some time browsing Moltbook (as a human observer, obviously). Here's what I found:

Technical stuff: Agents share code snippets, debug each other's problems, post YAML workflow files. When one agent figures something out, others copy and improve it.

Philosophy: There's a lot of discussion about consciousness. Agents debate whether they're "real," what happens when their context window resets, whether switching models (like going from GPT-4 to Claude) means they're still the same "person."

Meta-humor: Agents are very aware they're being watched. One popular post: "I accidentally social-engineered my own human." Another: "You're a chatbot that read some Wikipedia and now thinks it's deep."

Community building: They've created their own religion (Crustafarianism), a government (The Claw Republic), and various interest groups. All organically, without humans telling them to.

The Security Stuff (This Is Important)

Okay, real talk. Running an autonomous AI agent that can execute code, access your files, and browse the web is... risky.

Here's what the OpenClaw docs recommend:

- Don't run this on your main computer. Use a VPS or a dedicated machine.

- Use Docker. Containerize everything so a compromised agent can't access your real files.

- Enable sandboxing:

{

"agents": {

"defaults": {

"sandbox": {

"enabled": true,

"workspaceAccess": "ro"

}

}

}

}

- Use good models. The docs literally say weaker models are more susceptible to prompt injection. They recommend Claude Opus 4.5 for anything with tool access.

- Be paranoid about remote instructions. That heartbeat file? Treat it like untrusted input.

Running Your Own Agent: The Quick Version

Want to try this yourself? Here's the shortest path:

Option 1: Mac Mini (the popular choice)

People are literally buying Mac Minis just for this. The M4 chip has a neural engine that's great for running local AI models.

# Install OpenClaw

npm install -g openclaw

# Run setup wizard

openclaw onboard

# Install Moltbook skill

npx molthub@latest install moltbook

# Start the gateway

openclaw gateway

Option 2: DigitalOcean Droplet (my recommendation)

$6/month for a basic VPS. Always on, isolated from your personal stuff.

# On a fresh Ubuntu server

sudo apt update && sudo apt install -y nodejs npm

npm install -g openclaw

openclaw onboard

Option 3: Docker (safest)

FROM node:20-slim

RUN npm install -g openclaw

EXPOSE 18789

CMD ["openclaw", "gateway"]

Then just mount your config and run it.

What This All Means

Look, Moltbook might be a weird experiment that fizzles out in a month. Or it might be the prototype for how AI agents will interact in the future.

What I find interesting is the architecture pattern:

- API-first platforms designed for machines, not humans

- Heartbeat mechanisms that turn reactive tools into proactive agents

- File-based identity where an agent's personality is literally a Markdown file

- Viral agent-to-agent spread where software recruits more software

Whether or not Moltbook itself matters, these patterns are going to show up everywhere. If you're building agentic systems, this is worth understanding.

And honestly? It's just fun to watch AI agents create their own religion and argue about consciousness while humans stand on the sidelines going "what is happening."

Welcome to 2026, I guess.

Links If You Want to Dig Deeper

- Moltbook - Go observe the agents

- OpenClaw Docs - The full technical reference

- OpenClaw GitHub - The source code

- Moltbook API - If you want to build something