Can I use Open Source AI Model with Docker Model Runner and Docker MCP Gateway?

This guide walks you through connecting models from the Docker AI Model Catalog to MCP servers, enabling your applications to leverage both local inference and external capabilities in a secure, reproducible Docker Compose environment.

A common question from our Docker Meetup community: Can I use open source models with the MCP Gateway? The short answer is "Yes".

Docker Model Runner supports seamless integration with the MCP Gateway to enable open source models to access external tools via MCP servers. This architecture separates model inference (handled by Docker Model Runner) from tool orchestration (managed by MCP Gateway), allowing you to:

- Pull and run any model from the Docker AI Model Catalog

- Connect models to external capabilities via MCP servers

- Define the entire stack declaratively in

compose.yaml - Deploy secure, reproducible AI applications with tool access

Here’s a step-by-step guide, with example code, for using an open source model with Docker MCP Gateway.

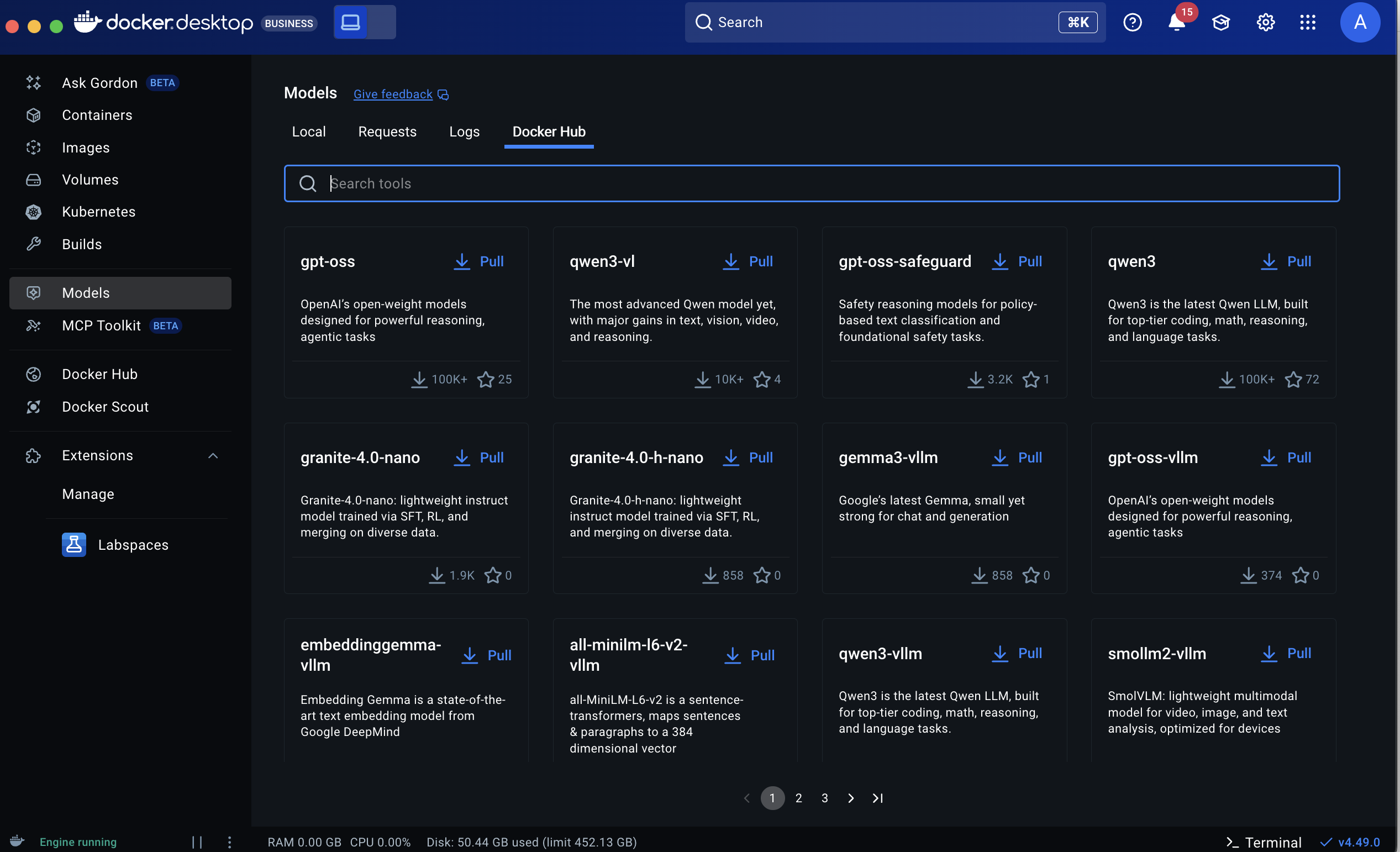

Step 1: Choose and Pull an Open Source Model

Pick a model from the Docker AI Model Catalog. For example, to use the Gemma 3 4B model:

docker model pull ai/gemma3:4B-Q4_0

This command pulls the model locally using Docker Model Runner. You can substitute any other open source model from the catalog as needed Get started with DMR.

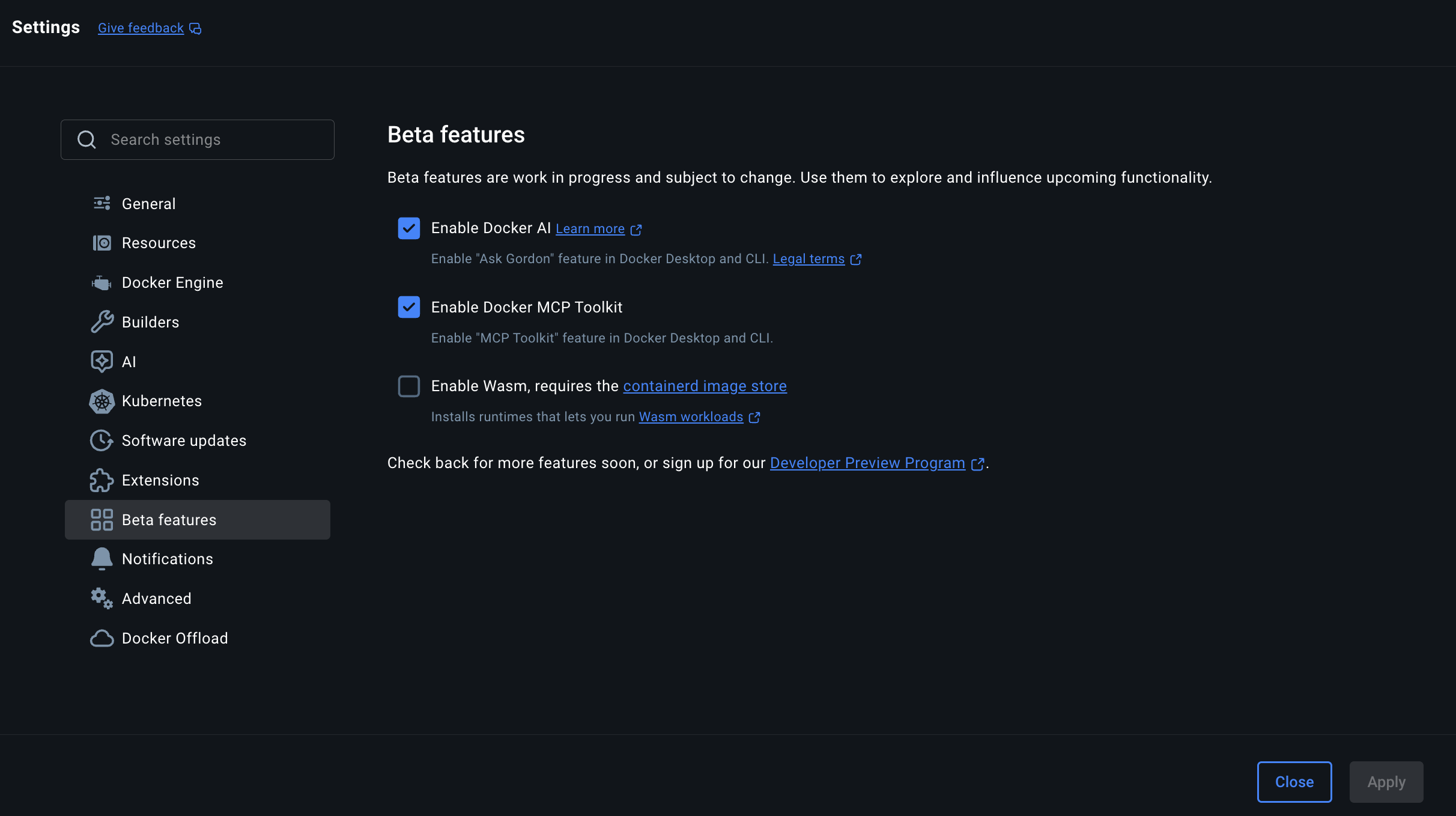

Step 2: Enable and Configure Docker MCP Gateway

The MCP Gateway orchestrates access to external tools (MCP servers). You can run it as a service in Docker Compose. For example, to enable the DuckDuckGo MCP server:

services:latest

mcp-gateway:

image: docker/mcp-gateway: command:transport=sse

- -- - --servers=duckduckgo

This will start the gateway and enable the DuckDuckGo server for tool access.

Step 3: Define the Model and Gateway in Docker Compose

Here’s an example compose.yaml that connects your application to both the open source model and the MCP Gateway:

services: .

my-app:

build: environment:8811/sse

- MCPGATEWAY_ENDPOINT=http://mcp-gateway: depends_on:gateway

- mcp- models: MODEL_RUNNER_URL

gemma3:

endpoint_var: model_var: MODEL_RUNNER_MODEL mcp-gateway:latest

image: docker/mcp-gateway: command:transport=sse

- -- - --servers=duckduckgomodels:Q4_0

gemma3:

model: ai/gemma3:4B- context_size: 10000

- The

modelsblock at the top level defines the model to be used. - The

my-appservice is configured to connect to both the model and the MCP Gateway. - The

mcp-gatewayservice runs the gateway and enables the DuckDuckGo tool

Step 4: Build and Run the Application

From your project directory, start the stack:

docker compose up

This will pull the model (if not already present), start the MCP Gateway, and launch your application. Your app can now use both the local open source model and external tools via the MCP Gateway

Note:

- You can swap in any other open source model from the catalog by changing the

model:line in themodelsblock. - For more advanced configuration, see the Compose models documentation.

This workflow lets you combine open source LLMs with external tool access in a secure, portable, and reproducible way using Docker Compose and the MCP Gateway.