Setting Up Docker MCP Toolkit with LM Studio: A Complete Technical Guide

Transform your local AI into a powerhouse with 176+ tools! Learn how to integrate Docker MCP Toolkit with LM Studio 0.3.17 for web search, GitHub operations, database management, and web scraping - all running locally with complete privacy. Complete setup guide with real troubleshooting tips. 🤖🔧

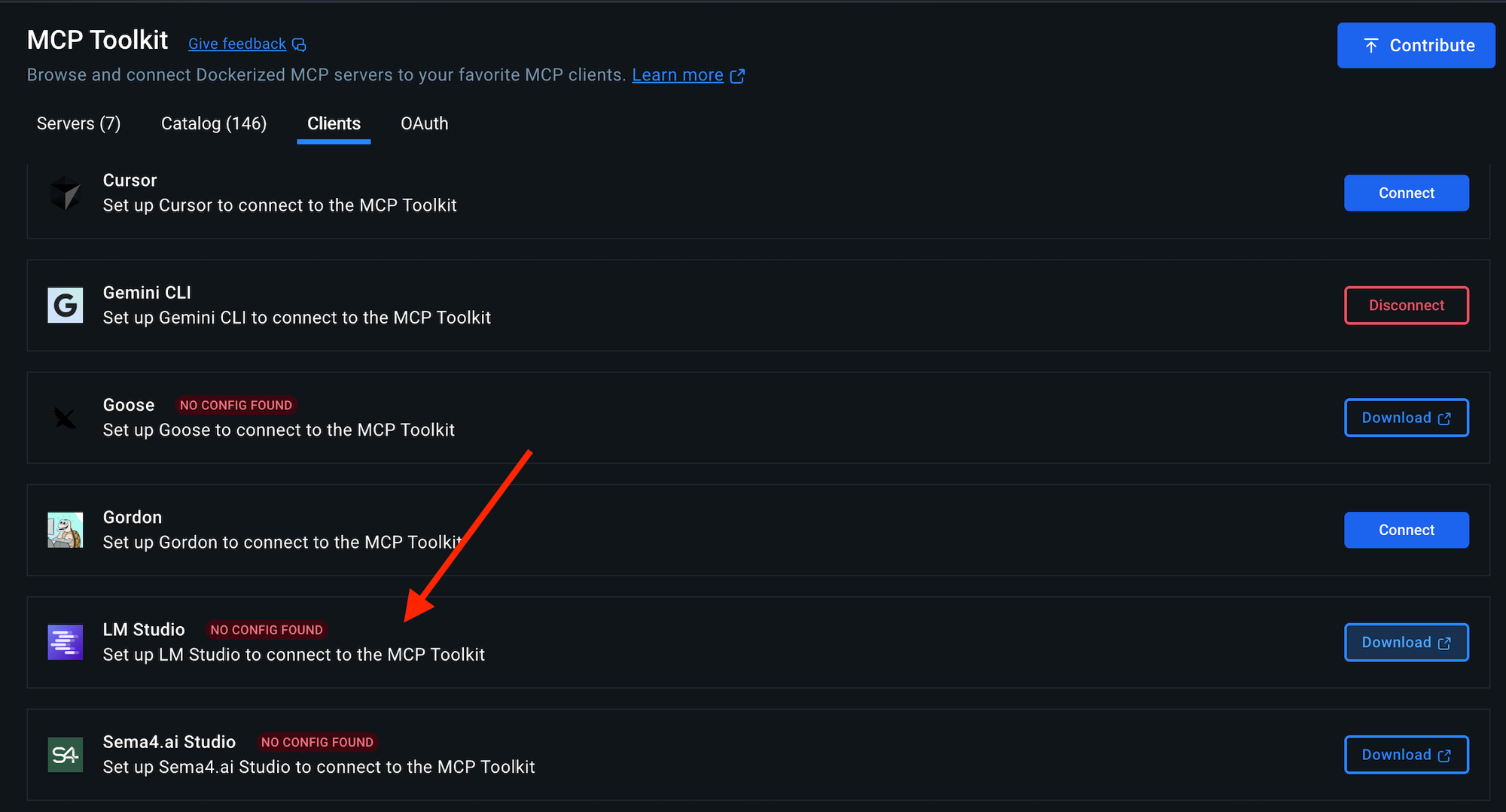

LM Studio 0.3.17 introduced Model Context Protocol (MCP) support, revolutionizing how we can extend local AI models with external capabilities. This guide walks through setting up the Docker MCP Toolkit with LM Studio, enabling your local models to access 176+ tools including web search, GitHub operations, database management, and web scraping.

What is MCP?

Model Context Protocol (MCP) is an open standard that allows AI applications to securely connect to external data sources and tools. The Docker MCP Toolkit packages multiple MCP servers into containerized services, making it easy to add powerful capabilities to your local AI setup.

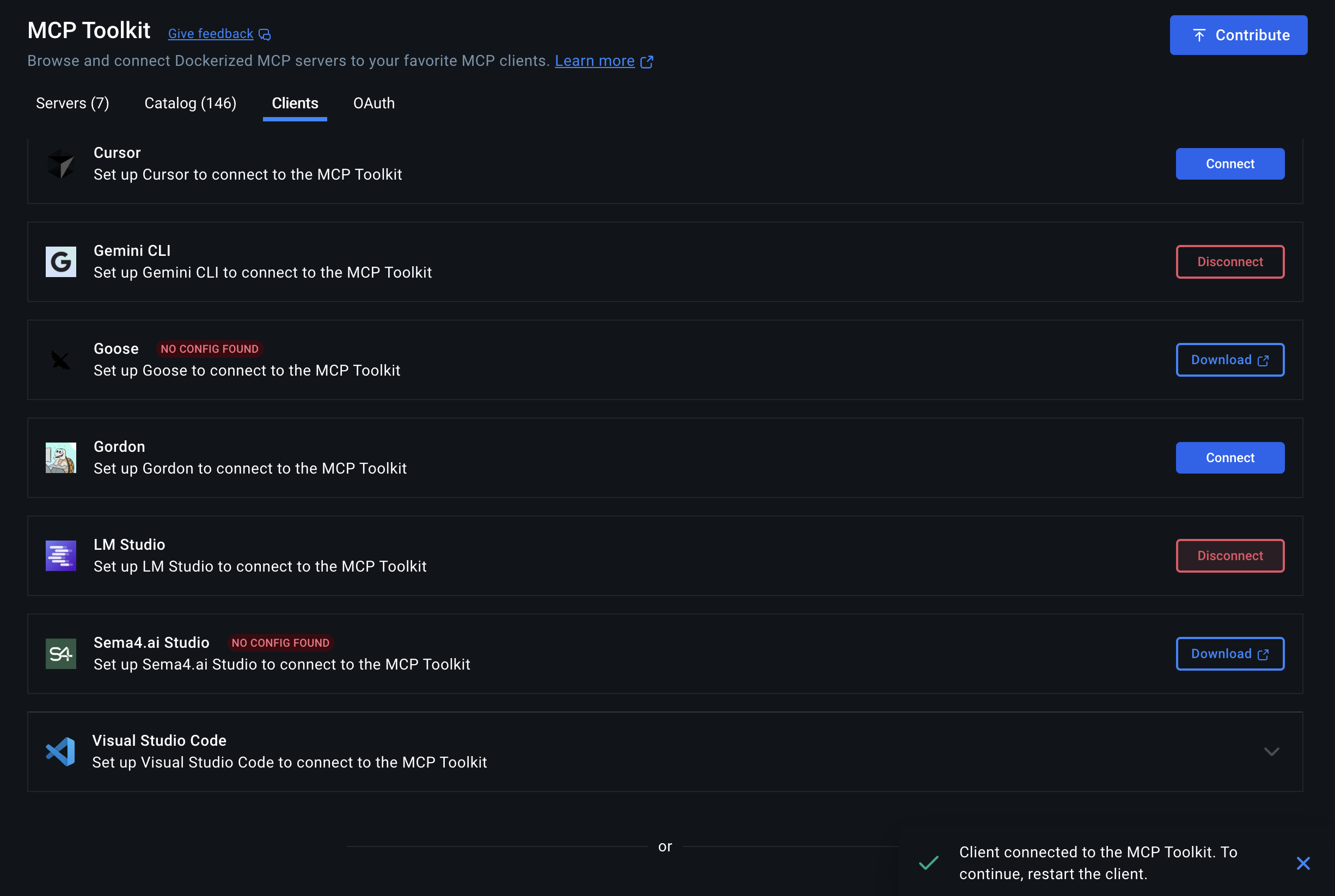

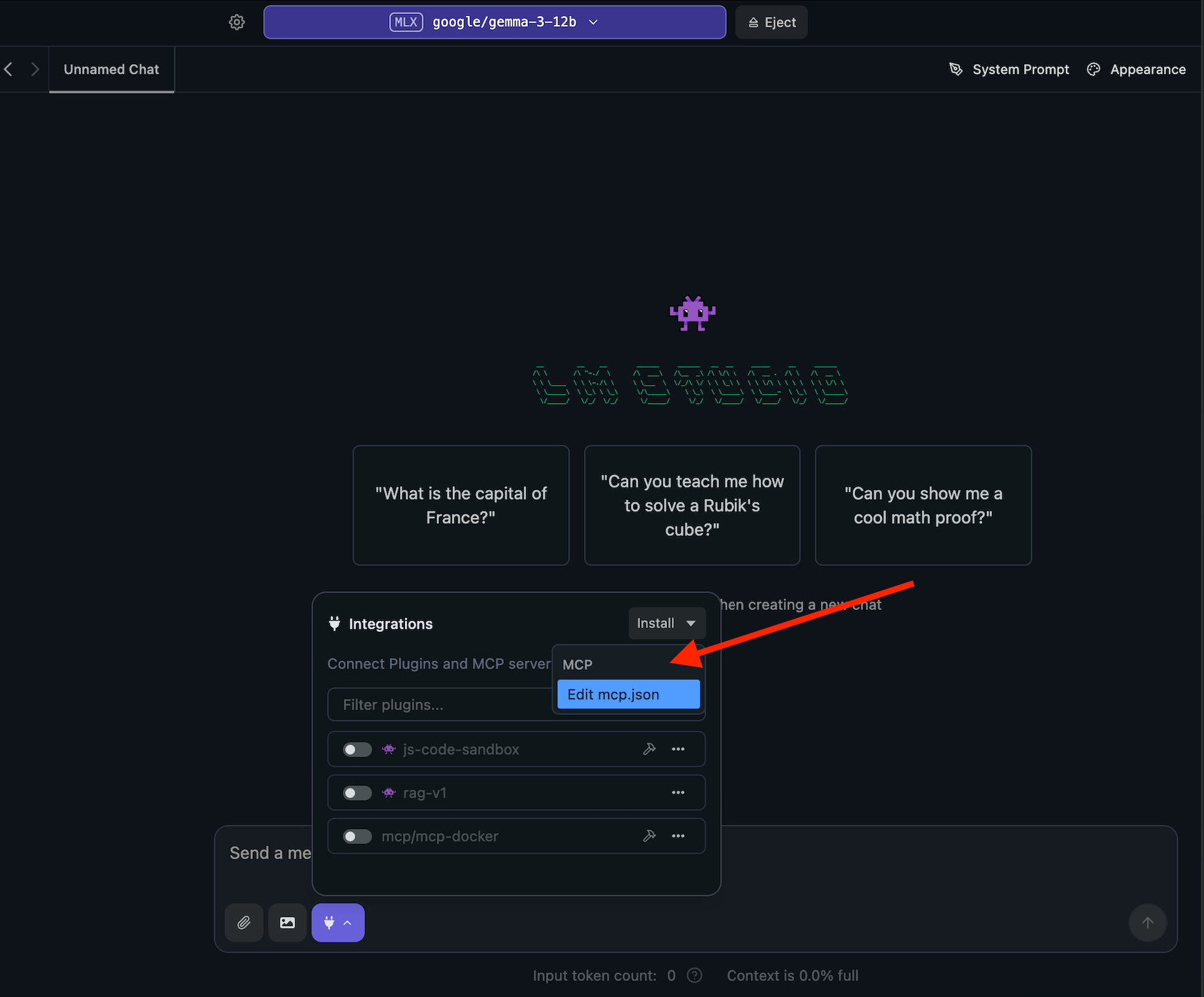

LM Studio supports both local and remote MCP servers. You can add MCPs by editing the app's mcp.json file or via the new "Add to LM Studio" Button, when available.

Prerequisites

Before starting, ensure you have:

- macOS (this guide focuses on macOS setup)

- LM Studio 0.3.17 or later

- Docker Desktop installed and running

- Basic command line familiarity

Step 1: Install and Configure LM Studio

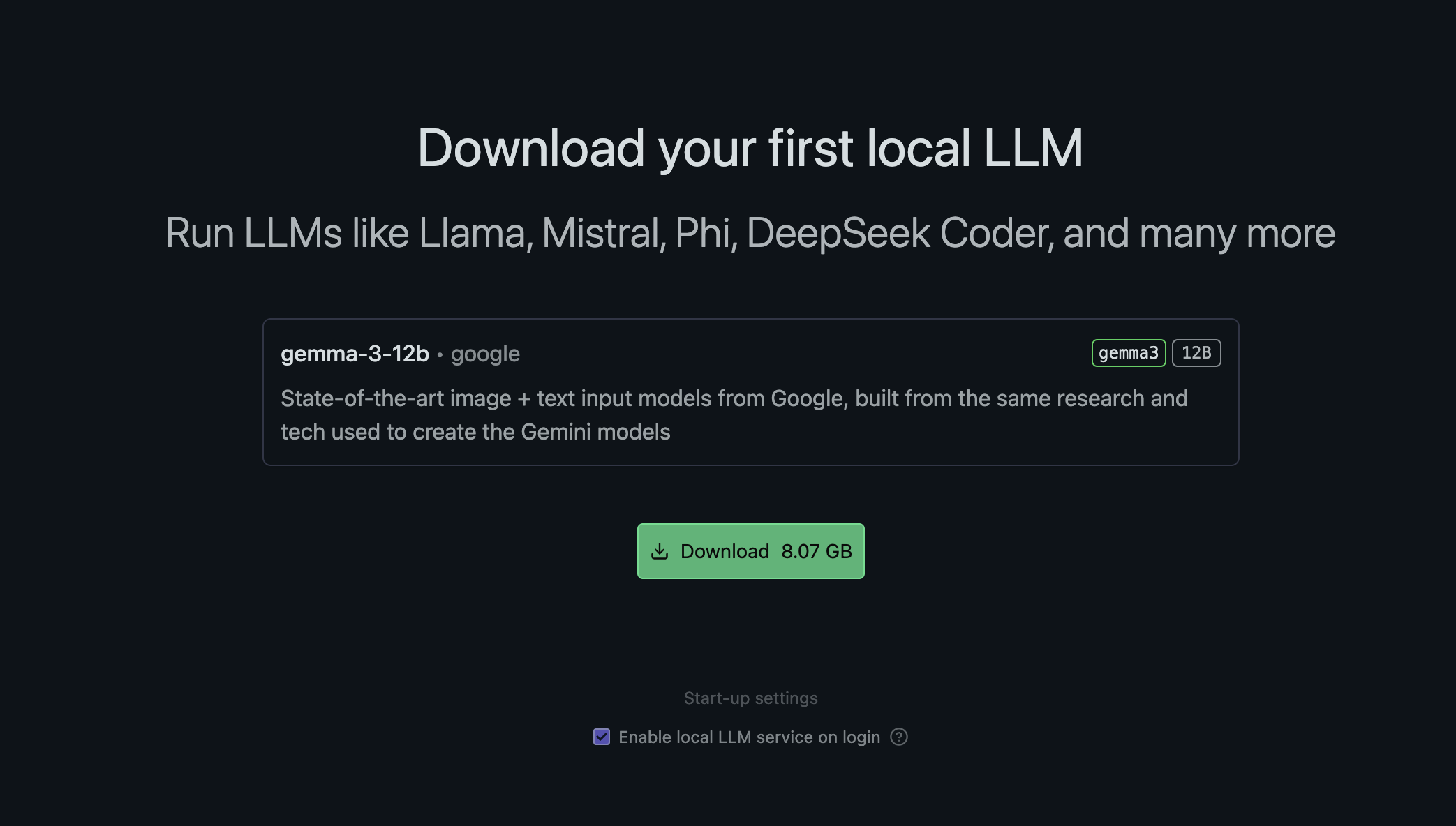

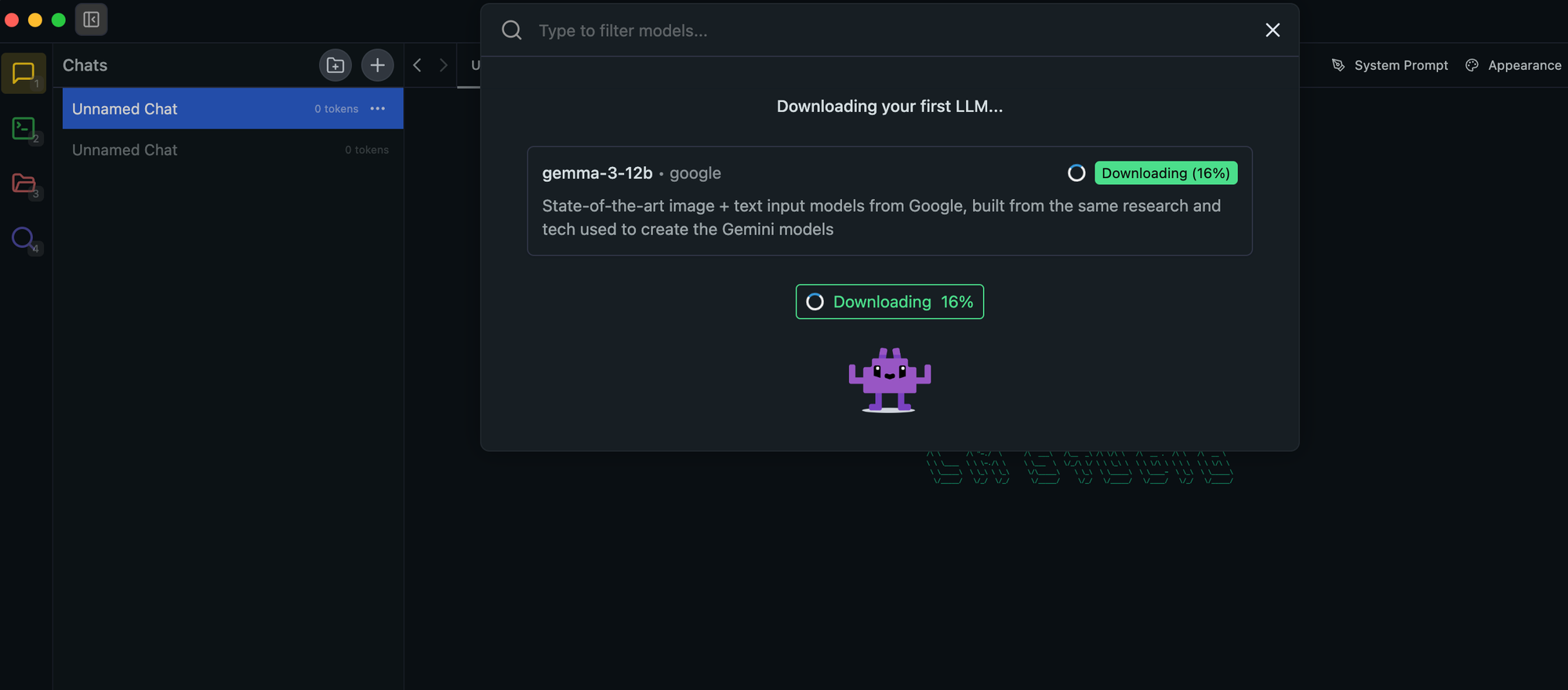

- Download LM Studio from the official website

- Install a compatible model (Gemma3-12b recommended)

- Verify the model loads correctly before proceeding

- Install LM Studio

Step 2: Set Up Docker MCP Toolkit

Install Docker MCP Toolkit

The Docker MCP Toolkit will automatically pull and configure multiple MCP servers. When you run it, you'll see something like this in the logs:

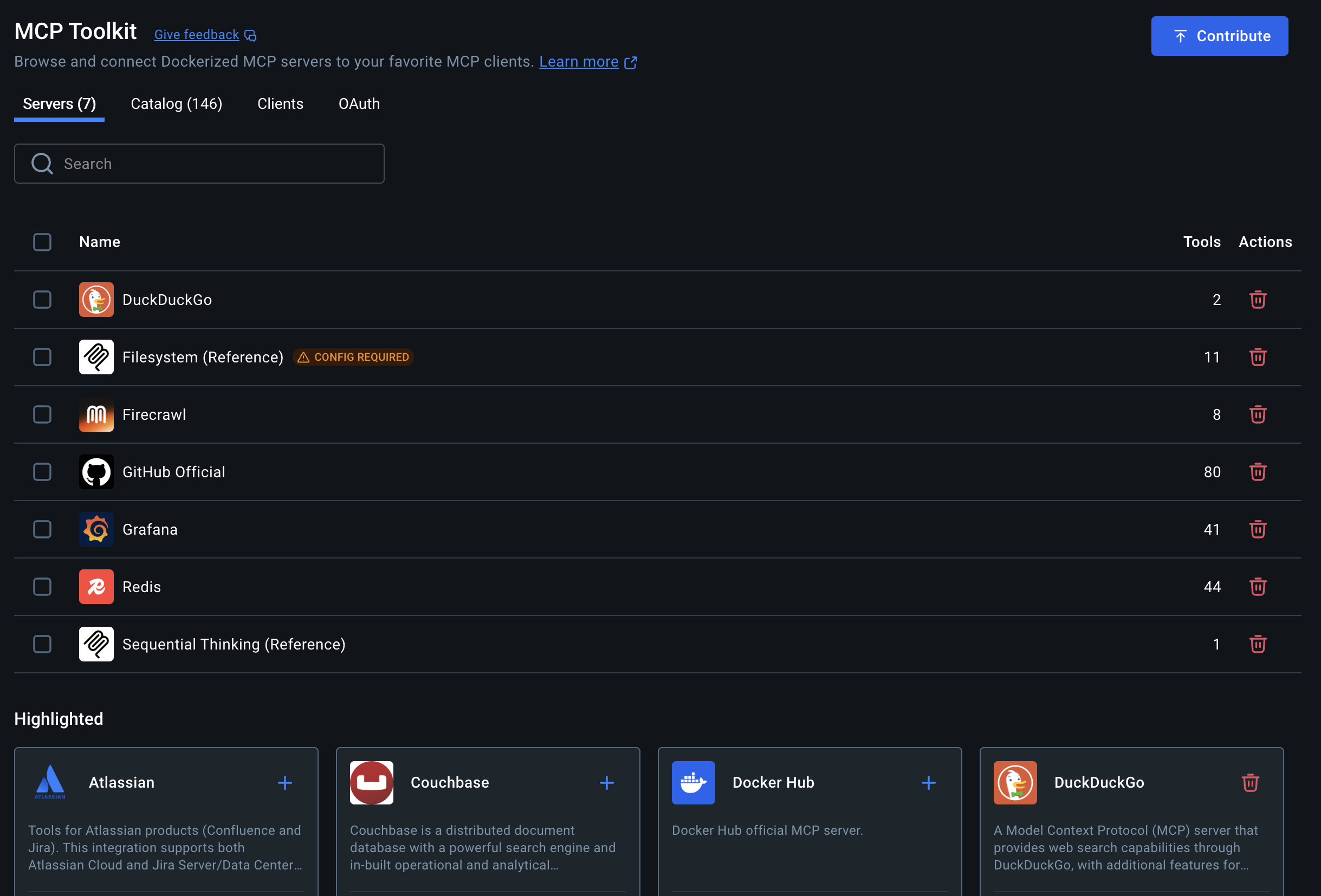

Available MCP Servers

The toolkit includes these powerful services:

| MCP Server | Tools | Capabilities |

|---|---|---|

| GitHub Official | 80 tools | Repository management, issue tracking, PR operations |

| Redis | 44 tools | Database operations, vector search, caching |

| Grafana | 41 tools | Monitoring, alerting, dashboard management |

| Firecrawl | 8 tools | Web scraping, content extraction |

| DuckDuckGo | 2 tools | Web search capabilities |

| Sequential Thinking | 1 tool | Complex reasoning and problem solving |

| Filesystem | Variable | File operations (may need configuration) |

Total: 176+ tools available

Step 3: Configure LM Studio

The critical step is configuring LM Studio to connect to your Docker MCP setup.

Add the Docker MCP Configuration

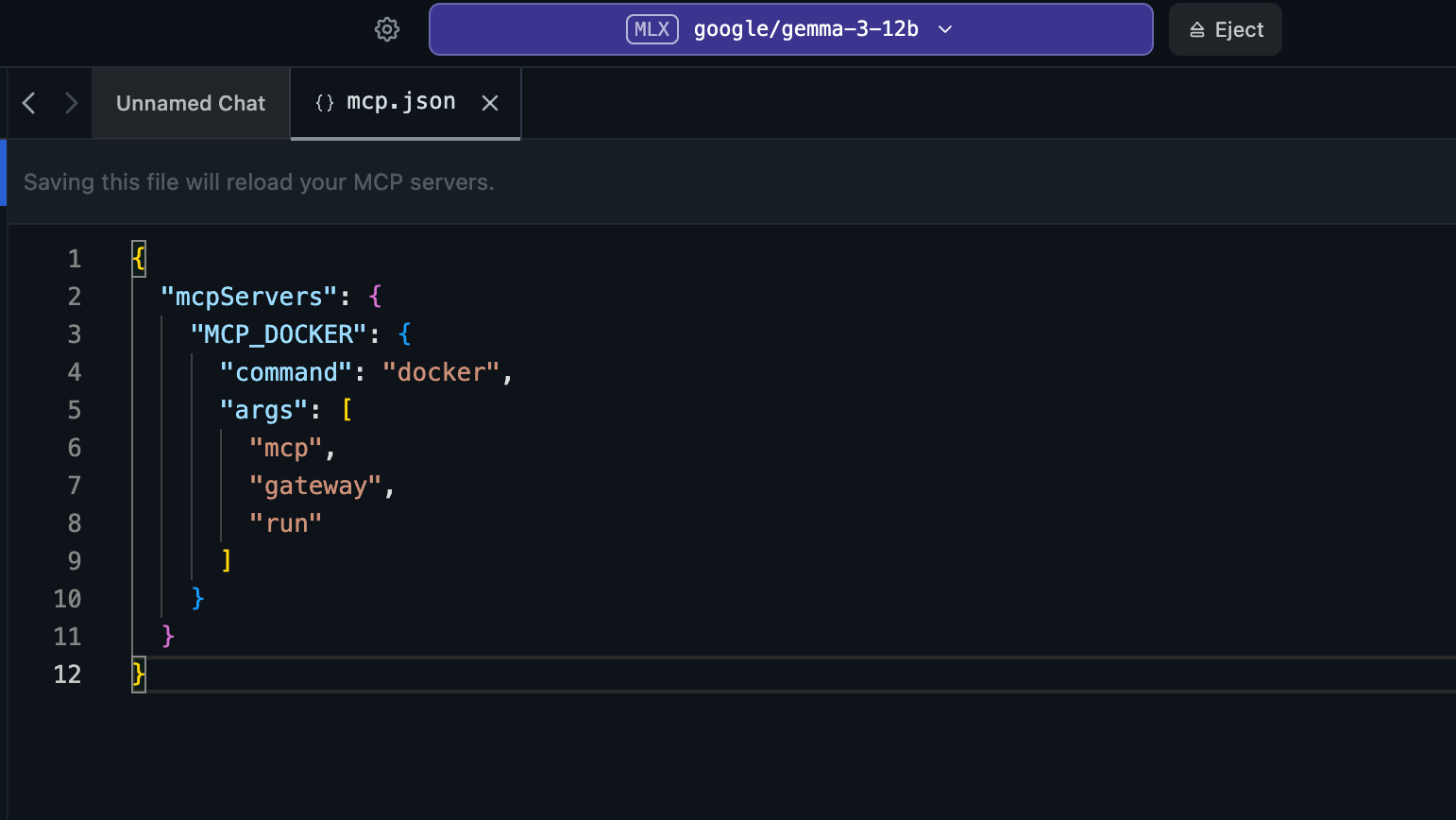

Edit the mcp.json file with this configuration:

{

"mcpServers": {

"MCP_DOCKER": {

"command": "docker",

"args": [

"mcp",

"gateway",

"run"

]

}

}

}Restart LM Studio

After saving the configuration:

- Close LM Studio completely

- Restart the application

- Load your model

Check the Logs

When everything is working correctly, you should see logs similar to:

Developer Logs

2025-08-03 14:55:44 [INFO]

[Plugin(lmstudio/rag-v1)] stdout: [PromptPreprocessor] Register with LM Studio

2025-08-03 14:55:44 [INFO]

[Plugin(lmstudio/js-code-sandbox)] stdout: [Tools Prvdr.] Register with LM Studio

2025-08-03 14:57:53 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Reading configuration...

- Reading registry from registry.yaml

2025-08-03 14:57:53 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Reading catalog from [docker-mcp.yaml]

2025-08-03 14:57:53 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Reading config from config.yaml

2025-08-03 14:57:53 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Reading secrets [firecrawl.api_key github.personal_access_token grafana.api_key redis.password]

2025-08-03 14:58:00 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Configuration read in 7.026816958s

2025-08-03 14:58:00 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Using images:

- ghcr.io/github/github-mcp-server@sha256:634fed5e94587a8b151e0b3457c92f196c1b61466ef890e0675ca40288a2efcc

- mcp/duckduckgo@sha256:68eb20db6109f5c312a695fc5ec3386ad15d93ffb765a0b4eb1baf4328dec14f

- mcp/filesystem@sha256:35fcf0217ca0d5bf7b0a5bd68fb3b89e08174676c0e0b4f431604512cf7b3f67

- mcp/firecrawl@sha256:f8dee5dd3e2c17dfd92e399d884d17ce51bc308bf8eb5f7cb225cf69bb96edad

- mcp/grafana@sha256:8c7d8da459b1276344f852835624c3c828c8403b76ec3a528e3963fa8452cda0

- mcp/redis@sha256:389504d6d301e06d621a22ff54f73360479bbd90aeaaefdb29cdf140e6487eb1

- mcp/sequentialthinking@sha256:cd3174b2ecf37738654cf7671fb1b719a225c40a78274817da00c4241f465e5f

2025-08-03 14:58:37 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > Images pulled in 37.077529875s

2025-08-03 14:58:37 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Those servers are enabled: duckduckgo, filesystem, firecrawl, github-official, grafana, redis, sequentialthinking

- Listing MCP tools...

2025-08-03 14:58:37 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Running ghcr.io/github/github-mcp-server with [run --rm -i --init --security-opt no-new-privileges --cpus 1 --memory 2Gb --pull never -l docker-mcp=true -l docker-mcp-tool-type=mcp -l docker-mcp-name=github-official -l docker-mcp-transport=stdio -e GITHUB_PERSONAL_ACCESS_TOKEN]

- Running mcp/sequentialthinking with [run --rm -i --init --security-opt no-new-privileges --cpus 1 --memory 2Gb --pull never -l docker-mcp=true -l docker-mcp-tool-type=mcp -l docker-mcp-name=sequentialthinking -l docker-mcp-transport=stdio]

2025-08-03 14:58:37 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Running mcp/grafana with [run --rm -i --init --security-opt no-new-privileges --cpus 1 --memory 2Gb --pull never -l docker-mcp=true -l docker-mcp-tool-type=mcp -l docker-mcp-name=grafana -l docker-mcp-transport=stdio -e GRAFANA_API_KEY -e GRAFANA_URL] and command [--transport=stdio]

2025-08-03 14:58:37 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: - Running mcp/duckduckgo with [run --rm -i --init --security-opt no-new-privileges --cpus 1 --memory 2Gb --pull never -l docker-mcp=true -l docker-mcp-tool-type=mcp -l docker-mcp-name=duckduckgo -l docker-mcp-transport=stdio]

- Running mcp/firecrawl with [run --rm -i --init --security-opt no-new-privileges --cpus 1 --memory 2Gb --pull never -l docker-mcp=true -l docker-mcp-tool-type=mcp -l docker-mcp-name=firecrawl -l docker-mcp-transport=stdio -e FIRECRAWL_API_KEY -e FIRECRAWL_API_URL -e FIRECRAWL_RETRY_MAX_ATTEMPTS -e FIRECRAWL_RETRY_INITIAL_DELAY -e FIRECRAWL_RETRY_MAX_DELAY -e FIRECRAWL_RETRY_BACKOFF_FACTOR -e FIRECRAWL_CREDIT_WARNING_THRESHOLD -e FIRECRAWL_CREDIT_CRITICAL_THRESHOLD]

- Running mcp/filesystem with [run --rm -i --init --security-opt no-new-privileges --cpus 1 --memory 2Gb --pull never -l docker-mcp=true -l docker-mcp-tool-type=mcp -l docker-mcp-name=filesystem -l docker-mcp-transport=stdio --network none] and command []

- Running mcp/redis with [run --rm -i --init --security-opt no-new-privileges --cpus 1 --memory 2Gb --pull never -l docker-mcp=true -l docker-mcp-tool-type=mcp -l docker-mcp-name=redis -l docker-mcp-transport=stdio -e REDIS_PWD -e REDIS_HOST -e REDIS_PORT -e REDIS_SSL -e REDIS_CLUSTER_MODE]

2025-08-03 14:58:39 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > grafana: (41 tools)

2025-08-03 14:58:39 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > github-official: (80 tools) (2 prompts) (5 resourceTemplates)

2025-08-03 14:58:39 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > Can't start filesystem: Error accessing directory : Error: ENOENT: no such file or directory, stat ''

at async Object.stat (node:internal/fs/promises:1031:18)

at async file:///app/dist/index.js:33:23

at async Promise.all (index 0)

at async file:///app/dist/index.js:31:1 {

errno: -2,

code: 'ENOENT',

syscall: 'stat',

path: ''

}

2025-08-03 14:58:39 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > sequentialthinking: (1 tools)

2025-08-03 14:58:39 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > firecrawl: (8 tools)

2025-08-03 14:58:39 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > duckduckgo: (2 tools)

2025-08-03 14:58:42 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > redis: (44 tools)

2025-08-03 14:58:42 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > 176 tools listed in 4.245409917s

- Watching for configuration updates...

> Initialized in 48.35971725s

> Start stdio server

2025-08-03 14:58:42 [ERROR]

[Plugin(mcp/mcp-docker)] stderr: > Initializing MCP server with ID: 0

2025-08-03 14:58:42 [INFO]

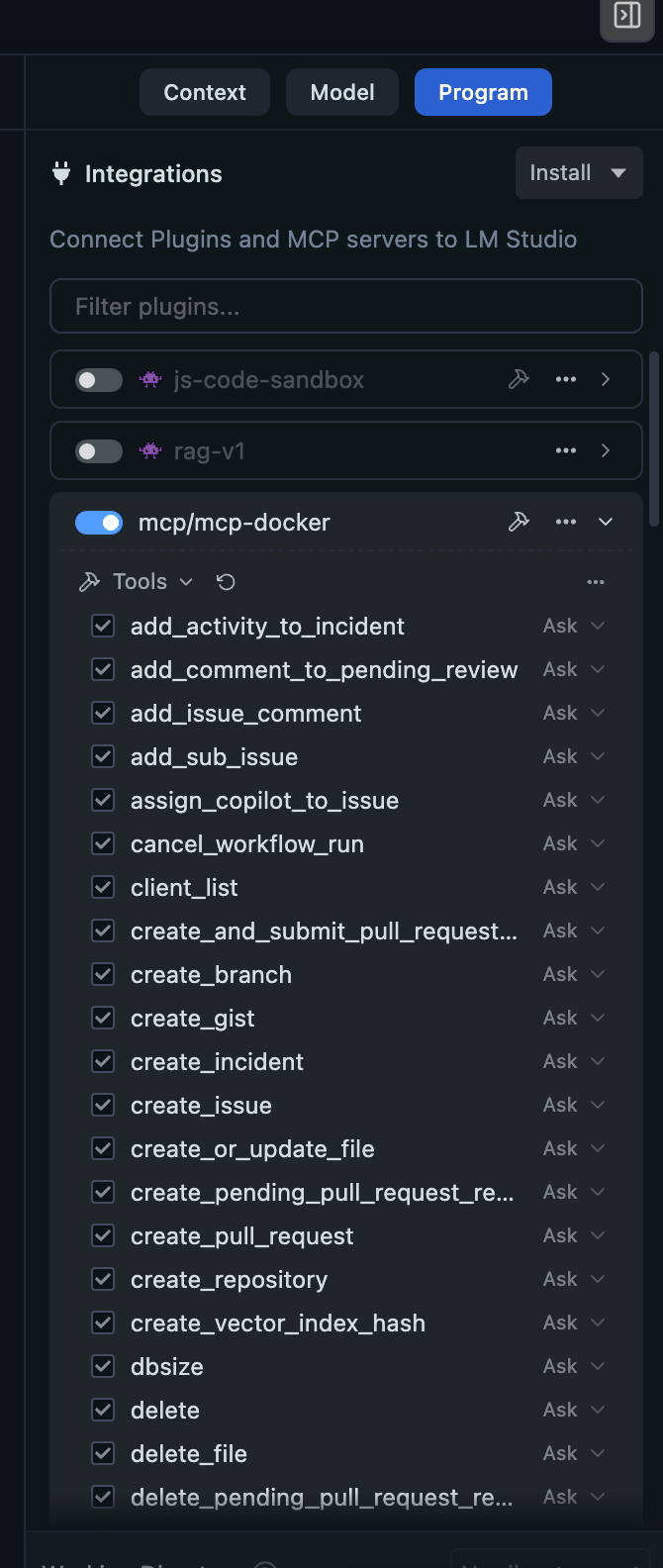

[Plugin(mcp/mcp-docker)] stdout: [Tools Prvdr.] Register with LM StudioWhat's Working Successfully

- Connection Established: LM Studio successfully connected to the Docker MCP system

- Multiple MCP Servers Running: 6 out of 7 MCP servers running successfully:

- GitHub (80 tools) - for GitHub operations

- Grafana (41 tools) - for monitoring and observability

- Redis (44 tools) - for database operations

- Firecrawl (8 tools) - for web scraping

- DuckDuckGo (2 tools) - for web search

- Sequential Thinking (1 tool) - for complex reasoning

- Total Tools Available: 176 tools are now accessible through LM Studio

Test Basic Functionality

Start a new chat and try these test commands:

"What tools do you have access to?"

"Search for recent AI developments"

"List popular Python repositories on GitHub"

When you save the mcp.json file, LM Studio will automatically load the MCP servers defined in it. We spawn a separate process for each MCP server

By now, you should see all the listed tools under LM Studio.

Troubleshooting Common Issues

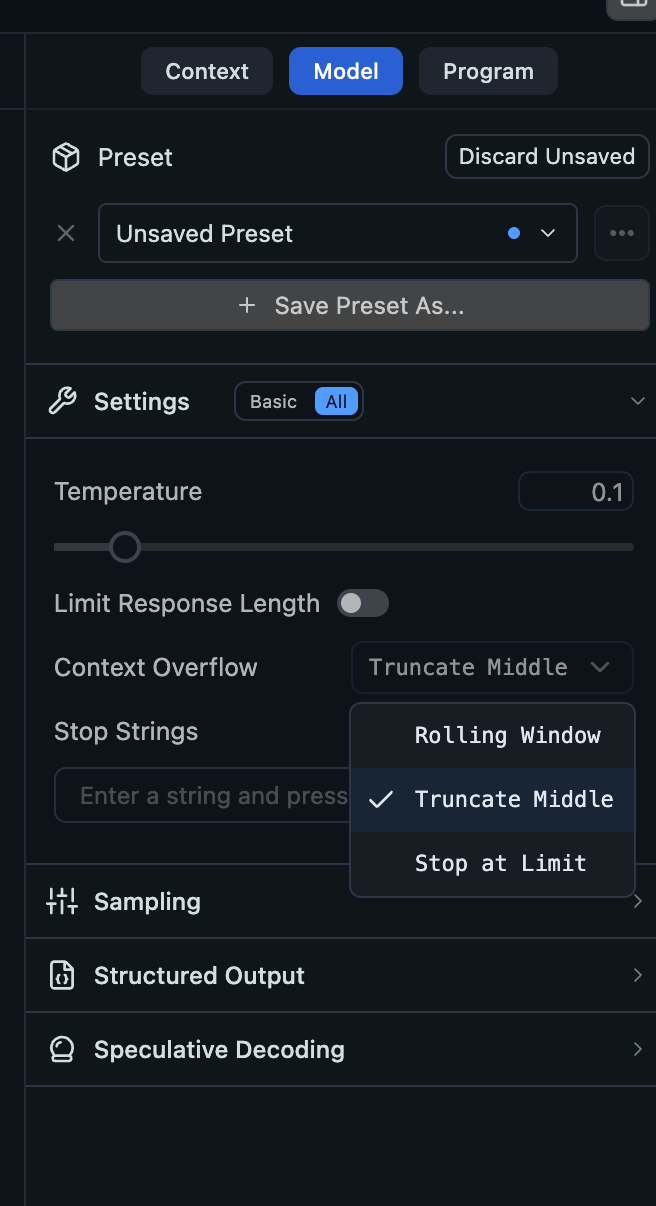

Issue 1: Context Length Errors

Problem: "The number of tokens to keep from the initial prompt is greater than the context length"

Solution:

- Open model settings in LM Studio

- Change Context Overflow from "Truncate Middle" to:

- "Rolling Window" (recommended)

- "Stop at Limit"

Issue 2: Filesystem MCP Server Fails

Problem: Can't start filesystem: Error accessing directory : Error: ENOENT: no such file or directory, stat ''

Solution: This is a configuration issue where the filesystem server can't find the specified directory. You can:

- Configure the correct directory path in your Docker MCP config

- Disable the filesystem server if not needed

- The other 175 tools will still work fine

Issue 3: Model Not Responding

Problem: Model returns "This message contains no content. The AI has nothing to say"

Solutions:

- Start a fresh conversation

- Temporarily disable MCP by renaming

mcp.jsonto test basic functionality - Check Context Overflow settings

- Reload the model

Issue 4: MCP Connection Issues

Problem: Tools not appearing in LM Studio

Solutions:

- Verify Docker is running

- Check that the mcp.json syntax is correct

- Ensure LM Studio was restarted after configuration changes

- Check LM Studio logs for error messages

Advanced Configuration

API Keys and Secrets

For full functionality, configure API keys for:

- GitHub Personal Access Token (for GitHub operations)

- Firecrawl API Key (for advanced web scraping)

- Grafana API Key (for monitoring features)

- Redis Password (if using external Redis)

Custom Tool Selection

You can customize which MCP servers to enable by modifying the Docker MCP configuration files:

config.yaml- Main configurationdocker-mcp.yaml- Server catalogregistry.yaml- Server registry

Testing Your Setup

Basic Web Search

"Search for information about Docker containers"GitHub Operations

"Show me trending JavaScript repositories"

"Create an issue in my repository"Web Scraping

"Scrape the main content from https://example.com"Database Operations

"Store some data in Redis and retrieve it"Complex Reasoning

"Help me break down this complex problem step by step: [your problem]"Performance Considerations

Resource Usage

- Each MCP server runs in its own Docker container

- Default limits: 1 CPU, 2GB RAM per container

- Total system impact: ~7 containers running simultaneously

Optimization Tips

- Use Rolling Window context management for better memory usage

- Consider disabling unused MCP servers to reduce resource consumption

- Monitor Docker resource usage during intensive operations

Security Considerations

The Docker MCP setup includes several security measures:

- Containers run with

--security-opt no-new-privileges - Resource limits prevent runaway processes

- Network isolation for filesystem operations (

--network none) - Secrets management for API keys

Conclusion

Setting up Docker MCP Toolkit with LM Studio transforms your local AI model into a powerful agent capable of web search, code repository management, data analysis, and much more. With 176+ tools at your disposal, you can create sophisticated AI workflows that combine multiple services seamlessly.

The key to success is:

- Correct mcp.json configuration

- Proper context management settings

- Systematic troubleshooting when issues arise

Once configured, you'll have a local AI setup that rivals cloud-based solutions while maintaining complete privacy and control over your data.

What's Next?

- Experiment with combining multiple tools in single requests

- Set up API keys for enhanced functionality

- Explore custom MCP server development

- Consider scaling with additional Docker MCP instances

The Model Context Protocol ecosystem is rapidly expanding, and this setup positions you to take advantage of new capabilities as they become available