Setting Up Local RAG with Docker Model Runner and cagent

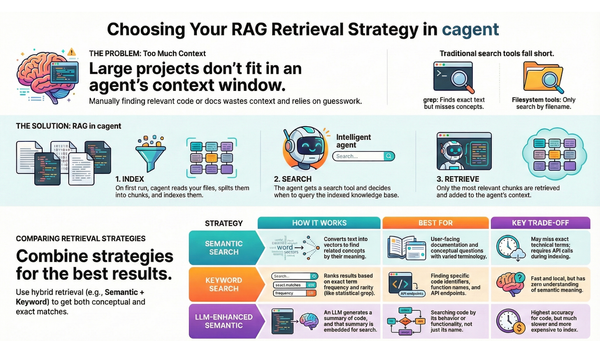

Want to run AI-powered code search without sending your proprietary code to the cloud? This tutorial shows you how to set up Retrieval-Augmented Generation (RAG) using Docker Model Runner and cagent.

This tutorial walks you through setting up Retrieval-Augmented Generation (RAG) using Docker Model Runner (DMR) and cagent. Run AI agents with semantic search capabilities entirely on your local machine—keeping your code private, avoiding API costs, and working offline.

Prerequisites

- Docker Desktop 4.49+ with Docker Model Runner enabled

- macOS, Windows, or Linux

- cagent v1.19.5+

Step 1: Enable Docker Model Runner

On Docker Desktop (macOS/Windows)

- Open Docker Desktop

- Go to Settings → AI

- Check Enable Docker Model Runner

- Click Apply & Restart

Verify Installation

docker model version

Expected output:

Docker Model Runner version v1.0.6

Docker Engine Kind: Docker Desktop

Step 2: Install cagent

Using Homebrew (macOS)

brew install cagent

Manual Installation (macOS ARM64)

wget https://github.com/docker/cagent/releases/download/v1.19.5/cagent-darwin-arm64

chmod +x cagent-darwin-arm64

sudo mv cagent-darwin-arm64 /usr/local/bin/cagent

Verify Installation

cagent version

Expected output:

cagent v1.19.5

Step 3: Pull Required Models

You need three models for a complete RAG setup:

3.1 Pull the Chat Model

docker model pull ai/llama3.2

Or for a more powerful model:

docker model pull ai/qwen3

3.2 Pull the Embedding Model

docker model pull ai/embeddinggemma

3.3 (Optional) Pull the Reranker Model

docker model pull hf.co/ggml-org/qwen3-reranker-0.6b-q8_0-gguf

Verify Models

docker model list

Expected output:

MODEL NAME PARAMETERS QUANTIZATION ARCHITECTURE MODEL ID CREATED SIZE

embeddinggemma 302.86 M Q8_0 gemma-embedding b6635ddcd4cb 4 months ago 307.13 MiB

llama3.2 3.21 B IQ2_XXS/Q4_K_M llama 436bb282b419 10 months ago 1.87 GiB

qwen3 8.19 B IQ2_XXS/Q4_K_M qwen3 79fa56c07429 8 months ago 4.68 GiB

Step 4: Create Project Structure

Create a test project with sample files to index:

mkdir -p rag-dmr-test/src rag-dmr-test/docs

cd rag-dmr-test

4.1 Create Sample Source Code

cat > src/app.py << 'EOF'

"""

Main application file for the RAG Demo App.

This app processes customer orders and calculates shipping costs.

"""

def calculate_shipping(weight, distance):

"""Calculate shipping cost based on weight and distance.

Args:

weight: Package weight in pounds

distance: Shipping distance in miles

Returns:

Total shipping cost in dollars

"""

base_rate = 5.99

weight_rate = 0.50 * weight

distance_rate = 0.10 * distance

return base_rate + weight_rate + distance_rate

def process_order(order_id, items):

"""Process a customer order and return total.

Args:

order_id: Unique order identifier

items: List of items with price and quantity

Returns:

Dictionary with order_id and calculated total

"""

total = sum(item['price'] * item['quantity'] for item in items)

return {'order_id': order_id, 'total': total}

def apply_discount(total, discount_code):

"""Apply discount code to order total.

Supported codes:

- SAVE10: 10% off

- SAVE20: 20% off

- FREESHIP: Free shipping ($5.99 off)

"""

discounts = {

'SAVE10': 0.10,

'SAVE20': 0.20,

'FREESHIP': 5.99

}

if discount_code in discounts:

if discount_code == 'FREESHIP':

return total - discounts[discount_code]

return total * (1 - discounts[discount_code])

return total

EOF

4.2 Create Sample Documentation

cat > docs/README.md << 'EOF'

# RAG Demo Project

This project demonstrates local RAG with Docker Model Runner.

## Features

- Order processing system

- Shipping cost calculator

- Discount code support

- Built with Python

## Installation

1. Clone the repository

2. Install dependencies: `pip install -r requirements.txt`

3. Run the app: `python src/app.py`

## Configuration

Set the following environment variables before running:

- `SHIPPING_API_KEY`: API key for shipping provider

- `DATABASE_URL`: Connection string for the database

- `DEBUG`: Set to "true" for debug mode

## API Endpoints

- `POST /orders`: Create a new order

- `GET /orders/{id}`: Get order details

- `POST /shipping/calculate`: Calculate shipping cost

## Discount Codes

- `SAVE10`: 10% off your order

- `SAVE20`: 20% off your order

- `FREESHIP`: Free shipping on any order

EOF

Step 5: Create the Agent Configuration

Create agent.yaml in your project root:

cat > agent.yaml << 'EOF'

agents:

root:

model: dmr/ai/llama3.2

instruction: |

You are a helpful coding assistant.

Use the RAG tool to search the codebase before answering questions.

rag: [codebase]

toolsets:

- type: filesystem

- type: shell

rag:

codebase:

docs: [./src, ./docs]

strategies:

- type: chunked-embeddings

embedding_model: dmr/ai/embeddinggemma

vector_dimensions: 768

database: ./code.db

chunking:

size: 1000

overlap: 100

- type: bm25

database: ./bm25.db

EOF

Configuration Explained

| Field | Description |

|---|---|

model: dmr/ai/llama3.2 |

Use Llama 3.2 via Docker Model Runner |

rag: [codebase] |

Enable RAG with the "codebase" source |

docs: [./src, ./docs] |

Directories to index |

embedding_model: dmr/ai/embeddinggemma |

Local embedding model |

vector_dimensions: 768 |

Embedding vector size for embeddinggemma |

chunked-embeddings |

Semantic search strategy |

bm25 |

Keyword search strategy (hybrid search) |

Step 6: Pre-warm the Models (Optional but Recommended)

Loading models takes time on first use. Pre-warm them:

# Warm up the chat model

curl http://localhost:12434/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{"model": "ai/llama3.2", "messages": [{"role": "user", "content": "hi"}]}'

Expected output:

{

"choices": [{

"finish_reason": "stop",

"message": {

"role": "assistant",

"content": "How can I assist you today?"

}

}],

"model": "model.gguf",

"usage": {

"completion_tokens": 8,

"prompt_tokens": 36,

"total_tokens": 44

}

}

Step 7: Run the Agent

cagent run agent.yaml

First run behavior:

- cagent indexes your

./srcand./docsdirectories - Creates

code.db(vector embeddings) andbm25.db(keyword index) - Loads the chat model

- Opens an interactive chat interface

Expected output:

┌─────────────────────────────────────────────────────────────┐

│ cagent │

├─────────────────────────────────────────────────────────────┤

│ Session │

│ New session │

│ │

│ Agent │

│ ▶ root │

│ Provider: dmr │

│ Model: model.gguf │

│ │

│ Tools │

│ 13 tools available │

├─────────────────────────────────────────────────────────────┤

│ Type your message here... │

└─────────────────────────────────────────────────────────────┘

Step 8: Test RAG Queries

Try these questions to test your RAG setup:

Question 1: Code Understanding

What does the calculate_shipping function do?

Expected response: The agent searches your codebase and explains the function based on the actual code in src/app.py.

Question 2: Documentation Retrieval

How do I configure this project?

Expected response: The agent retrieves information from docs/README.md about environment variables and configuration.

Question 3: Feature Discovery

What discount codes are available?

Expected response: The agent finds both the code implementation and documentation about SAVE10, SAVE20, and FREESHIP codes.

Question 4: Cross-reference

How is the FREESHIP discount different from percentage discounts?

Expected response: The agent analyzes the apply_discount function and explains that FREESHIP subtracts a flat $5.99 while others apply percentages.

Summary

You now have a fully local RAG setup with:

| Component | Model | Purpose |

|---|---|---|

| Chat | Llama 3.2 or Qwen3 | Answer questions |

| Embeddings | embeddinggemma | Semantic search |

| BM25 | Built-in | Keyword search |

| Reranker | qwen3-reranker (optional) | Improve accuracy |