Supercharge Your Docker Compose Applications with AI Models

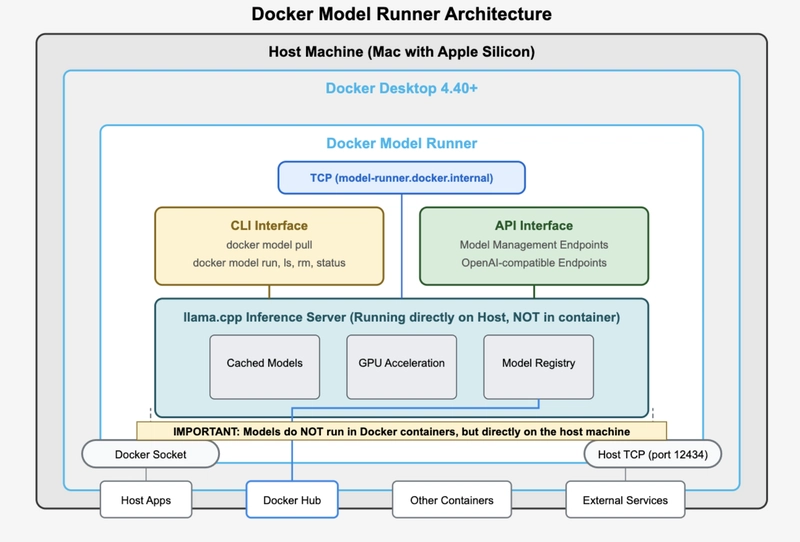

Docker Compose now supports AI models as first-class citizens with the new models top-level element. Adding machine learning capabilities to your applications is now as simple as defining a model and binding it to your services.

The world of containerized applications is evolving rapidly, and one of the most exciting developments is the integration of AI models directly into Docker Compose workflows. If you've been wondering how to seamlessly incorporate machine learning capabilities into your multi-service applications, you're in for a treat.

The Traditional Compose Setup

Let's start with a familiar scenario. Imagine you have a typical multi-service application with monitoring and tracing capabilities:

services:

backend:

env_file: 'backend.env'

build:

context: .

target: backend

ports:

- '8080:8080'

- '9090:9090' # Metrics port

healthcheck:

test: ['CMD', 'wget', '-qO-', 'http://localhost:8080/health']

interval: 3s

timeout: 3s

retries: 3

networks:

- app-network

frontend:

build:

context: ./frontend

ports:

- '3000:3000'

depends_on:

backend:

condition: service_healthy

networks:

- app-network

prometheus:

image: prom/prometheus:v2.45.0

volumes:

- ./prometheus/prometheus.yml:/etc/prometheus/prometheus.yml

command:

- '--config.file=/etc/prometheus/prometheus.yml'

- '--storage.tsdb.path=/prometheus'

- '--web.console.libraries=/etc/prometheus/console_libraries'

- '--web.console.templates=/etc/prometheus/consoles'

- '--web.enable-lifecycle'

ports:

- '9091:9090'

networks:

- app-network

grafana:

image: grafana/grafana:10.1.0

volumes:

- ./grafana/provisioning:/etc/grafana/provisioning

- grafana-data:/var/lib/grafana

environment:

- GF_SECURITY_ADMIN_PASSWORD=admin

- GF_USERS_ALLOW_SIGN_UP=false

- GF_SERVER_DOMAIN=localhost

ports:

- '3001:3000'

depends_on:

- prometheus

networks:

- app-network

jaeger:

image: jaegertracing/all-in-one:1.46

environment:

- COLLECTOR_ZIPKIN_HOST_PORT=:9411

ports:

- '16686:16686' # UI

- '4317:4317' # OTLP gRPC

- '4318:4318' # OTLP HTTP

networks:

- app-network

volumes:

grafana-data:

networks:

app-network:

driver: bridgeThis setup gives you a robust application stack with monitoring via Prometheus and Grafana, plus distributed tracing through Jaeger. But what if you want to add AI capabilities to your backend service?

Enter the Models Top-Level Element

Docker Compose now supports a powerful new feature: the models top-level element. This allows you to define AI models as first-class citizens in your Compose applications, making it incredibly easy to integrate machine learning capabilities.

Here's the basic syntax:

services:

backend:

image: my-chat-app

models:

- llm

models:

llm:

model: ai/llama3.2:1B-Q8_0This simple addition defines a service called backend that uses a model named llm, with the model definition referencing the ai/llama3.2:1B-Q8_0 model image.

Putting It All Together

Let's see how our complete application looks with AI models integrated:

services:

backend:

env_file: 'backend.env'

build:

context: .

target: backend

ports:

- '8080:8080'

- '9090:9090' # Metrics port

healthcheck:

test: ['CMD', 'wget', '-qO-', 'http://localhost:8080/health']

interval: 3s

timeout: 3s

retries: 3

networks:

- app-network

models:

- llm

frontend:

build:

context: ./frontend

ports:

- '3000:3000'

depends_on:

backend:

condition: service_healthy

networks:

- app-network

prometheus:

image: prom/prometheus:v2.45.0

volumes:

- ./prometheus/prometheus.yml:/etc/prometheus/prometheus.yml

command:

- '--config.file=/etc/prometheus/prometheus.yml'

- '--storage.tsdb.path=/prometheus'

- '--web.console.libraries=/etc/prometheus/console_libraries'

- '--web.console.templates=/etc/prometheus/consoles'

- '--web.enable-lifecycle'

ports:

- '9091:9090'

networks:

- app-network

grafana:

image: grafana/grafana:10.1.0

volumes:

- ./grafana/provisioning:/etc/grafana/provisioning

- grafana-data:/var/lib/grafana

environment:

- GF_SECURITY_ADMIN_PASSWORD=admin

- GF_USERS_ALLOW_SIGN_UP=false

- GF_SERVER_DOMAIN=localhost

ports:

- '3001:3000'

depends_on:

- prometheus

networks:

- app-network

jaeger:

image: jaegertracing/all-in-one:1.46

environment:

- COLLECTOR_ZIPKIN_HOST_PORT=:9411

ports:

- '16686:16686' # UI

- '4317:4317' # OTLP gRPC

- '4318:4318' # OTLP HTTP

networks:

- app-network

models:

llm:

model: ai/llama3.2:1B-Q8_0

volumes:

grafana-data:

networks:

app-network:

driver: bridgeNotice how seamlessly the AI model integrates with your existing infrastructure. Your backend service now has access to a Llama 3.2 1B model while maintaining all the monitoring and tracing capabilities you already had.

Advanced Model Configuration

Models aren't just simple references - they support various configuration options to fine-tune their behavior:

models:

llm:

model: ai/smollm2

context_size: 1024

runtime_flags:

- "--a-flag"

- "--another-flag=42"The key configuration options include:

- model (required): The OCI artifact identifier for the model that Compose pulls and runs

- runtime_flags: Raw command-line flags passed to the inference engine (perfect for llama.cpp parameters)

- context_size: Maximum token context size for the model

Pro tip: Each model has its own maximum context size, and increasing it can impact performance. Keep your context size as small as feasible for your specific needs while considering your hardware constraints.

Service Model Binding Made Simple

Docker Compose offers flexible ways to bind models to services. The short syntax we've been using is the most straightforward:

services:

app:

image: my-app

models:

- llm

- embedding-model

models:

llm:

model: ai/smollm2

embedding-model:

model: ai/all-minilmWith this approach, Compose automatically generates environment variables based on your model names:

LLM_URL- URL to access the LLM modelLLM_MODEL- Model identifier for the LLM modelEMBEDDING_MODEL_URL- URL to access the embedding modelEMBEDDING_MODEL_MODEL- Model identifier for the embedding model

This means your application code can easily discover and connect to the AI models without hardcoding URLs or connection details.

Legacy Provider Services (Deprecated)

While you might encounter the older provider service approach in existing documentation, it's worth noting that this method is deprecated:

services:

chat:

image: my-chat-app

depends_on:

- ai_runner

ai_runner:

provider:

type: model

options:

model: ai/smollm2

context-size: 1024

runtime-flags: "--no-prefill-assistant"Stick with the models top-level element for new projects - it's cleaner, more maintainable, and represents the future direction of Docker Compose AI integration.

The Future is AI-Native Applications

By incorporating AI models directly into your Docker Compose workflows, you're not just adding features - you're building truly AI-native applications. Your models become as much a part of your infrastructure as your databases, message queues, and monitoring systems.

This approach offers several compelling advantages: your AI models benefit from the same containerization practices as your other services, you get consistent deployment and scaling patterns across your entire stack, and debugging becomes easier when everything lives in the same Compose environment.

Whether you're building a chat application, adding semantic search capabilities, or implementing intelligent data processing, Docker Compose's model support makes it easier than ever to bring AI to your applications. The future of software development is AI-integrated, and with these tools, that future is available today.

Further Reading