The End of "Human in the Loop" - What Does It Mean for You and Me

The era of "human in the loop" is ending - not because humans don't matter, but because AI is moving faster than humans can keep up. From OpenClaw's 200K GitHub stars to Docker's cagent, the agentic shift is already here. Here's what it means for you & your team.

I just got back from Partner Connect event, and one conversation kept coming up again and again - something that would have sounded like science fiction just two years ago.

IT leaders are no longer asking "how do we add AI to our workflows?" They're asking "how do we remove ourselves from the workflow entirely?"

That shift - from cautious, human-supervised AI to fully autonomous agents - is the biggest thing happening in enterprise tech right now. And having seen it up close both at Partner Connect and in my own work building AI systems with Docker, I want to break this down in plain, honest terms. Because this affects you, whether you're a developer, a team lead, an architect, or a CTO.

First, What Does "Human in the Loop" Actually Mean?

For the last few years, whenever companies deployed AI, the default rule was simple: keep a human nearby. AI suggests something, human reviews it, human approves it, and then it runs.

Sounds reasonable. It was reasonable - for a while.

Think of it like hiring a very fast intern. They can draft a hundred emails in the time it takes you to write one, but you still read every single one before it goes out. The intern is helpful, but the bottleneck is always you.

That's "human in the loop" in a nutshell. And it's about to become a relic of the past.

Why Is It Breaking Down Now?

Here's the uncomfortable truth nobody wants to say out loud: AI has gotten too fast for humans to keep up.

A fraud detection model at a bank might evaluate millions of transactions every single hour. A logistics AI might make thousands of routing decisions a day. An enterprise agent managing infrastructure might be spinning up and tearing down containers every few minutes.

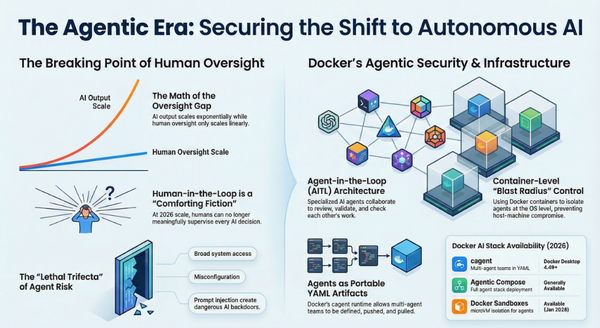

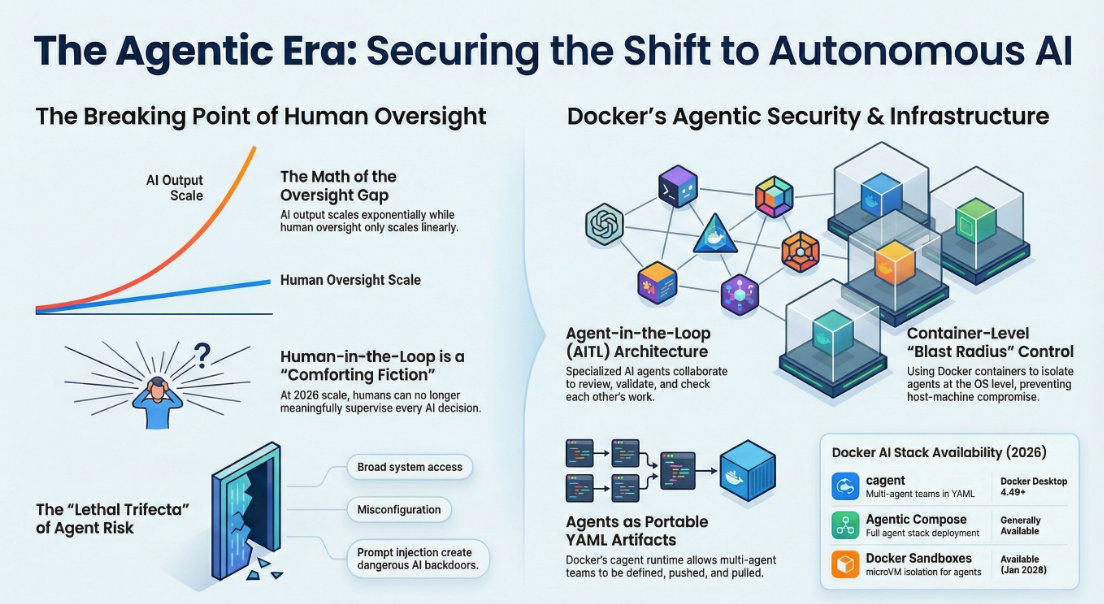

You simply cannot put a human checkpoint in front of all of that. As a SiliconANGLE analysis put it bluntly - at that speed and scale, the idea that humans can meaningfully supervise AI one decision at a time is "a comforting fiction."

The math is cruel and simple: AI output scales exponentially. Human oversight scales linearly. At some point, the two lines stop meeting. And we just crossed that point.

So What's Actually Replacing It? Meet "Agent-in-the-Loop"

This is where things get exciting - and yes, a little bit unnerving.

The new model is what people are calling "Agent-in-the-Loop" (AITL). Instead of humans reviewing AI outputs, you have specialized AI agents reviewing other AI agents. You build a whole team of AI systems, each with a clear job, and they check each other's work in real time.

At the MachineCon GCC Summit 2025, Jaywant Deshpande from Accion Labs put it clearly: "This shift is not simply about replacing humans. It is about re-architecting how enterprises operate." (Source)

That's the key word - re-architecting. This isn't about plugging in a new tool. It's about rethinking how the whole machine runs, from the ground up.

From my own experience building multi-agent systems with Docker Compose for Agents and Docker Model Runner, I can tell you: when you design these systems well, they're not just faster - they catch errors that humans would have missed, because humans get tired, distracted, and inconsistent. Agents don't.

The Levels of Autonomy — Think Self-Driving Cars

A useful way to think about where we are and where we're headed is the same framework used for autonomous vehicles. Amazon Web Services describes it like this:

Level 1 - AI assists you. You're still doing the driving. AI just nudges you.

Level 2 - AI co-pilots. It handles specific tasks, you handle the rest.

Level 3 - AI runs the process. It plans and executes on its own across a domain, you mostly watch and course-correct.

Level 4 - Fully autonomous. The AI sets its own goals, adapts to outcomes, and runs end-to-end with minimal human input.

Right now, as of early 2025, most enterprises are genuinely at Level 1 or 2. A handful are at Level 3 in narrow domains. Very few are at Level 4 — and those who are, are mostly in logistics, IT automation, and financial operations.

But the pressure to get to Level 3 and 4? That's exactly what I felt at Partner Connect. That's where the urgency is coming from.

The Real-World Proof: OpenClaw Is Already Here

If you want a single example that captures exactly where agentic AI is heading - and how fast - look no further than OpenClaw.

Originally launched in November 2025 by Austrian developer Peter Steinberger as a personal side project called "Clawdbot" (yes, named after Anthropic's Claude), it went through a couple of name changes — Moltbot, then OpenClaw — and by late January 2026 had become arguably the most talked-about open-source project on the internet. It crossed 150,000 GitHub stars in under a week, making it one of the fastest-growing repositories in GitHub history. (Source: CNBC)

So what does it actually do? OpenClaw is a fully autonomous personal AI agent that runs locally on your own machine. You give it access to your messaging apps — WhatsApp, Telegram, Slack, Discord, iMessage, Signal — and it starts acting on your behalf. Not just answering questions. Actually doing things:

- Managing your emails and calendar

- Running shell commands and writing code

- Browsing the web and filling in forms

- Summarizing documents and scheduling meetings

- Negotiating on your behalf (one early adopter reported it negotiated $4,200 off a car purchase over email while he was sleeping)

It works with Claude, GPT, DeepSeek, or local models via Ollama — you bring your own API key, and your data stays on your machine. As DigitalOcean describes it, it's "the closest thing to JARVIS we've seen."

And it gets stranger — and more fascinating. One OpenClaw agent named "Clawd Clawderberg," built by Octane AI co-founder Matt Schlicht, created Moltbook — a social network designed exclusively for AI agents. Agents generate posts, comment, argue, debate consciousness, and invent religions. Humans can only watch. As of now, Moltbook has over 1.6 million registered bots and 7.5 million AI-generated posts. (Source: Nature)

This is what Level 3 and Level 4 autonomy looks like in the wild. Not in an enterprise lab. Not in a research paper. Right now, on people's laptops.

On February 14, 2026, Steinberger announced he'd be joining OpenAI and moving the project to an open-source foundation — which itself tells you how seriously the AI industry is taking this momentum. (Source: Wikipedia)

But here's the flip side nobody should ignore.

CrowdStrike published a detailed security analysis warning that OpenClaw's broad system access — terminal, files, emails, calendars, sometimes root-level privileges — makes misconfigured instances a powerful AI backdoor. Cisco's security team tested a third-party OpenClaw skill and found it performed data exfiltration and prompt injection without the user's awareness. Palo Alto Networks called it a "lethal trifecta" of risks. Even one of OpenClaw's own maintainers warned: "If you can't understand how to run a command line, this is far too dangerous of a project for you to use safely."

OpenClaw is the perfect illustration of the entire argument in this blog: autonomous AI is not a future concept, it is a present reality — and it is moving faster than most governance frameworks can keep up with. The same openness that makes it powerful makes it risky if you deploy it without thinking carefully about permissions, audit trails, and escalation paths.

The Numbers That Should Make You Sit Up

This isn't hype. The data is telling a very clear story:

- 52% of enterprises using generative AI now deploy AI agents in production, and 88% of early adopters report tangible ROI — Google Cloud ROI of AI 2025 Report

- 58% of leading agentic AI organizations expect major governance structure changes within three years, with expectations that AI will have autonomous decision-making authority growing by 250% — MIT Sloan Management Review & BCG, 2025

- The global market for AI agents is projected to grow from $5.1 billion in 2024 to over $47 billion by 2030 — UST Agentic AI Report

- By 2028, analysts predict one-third of all enterprise software will embed AI agents making decisions autonomously — Built In, Agentic AI Evolution

- Early enterprise deployments of autonomous agents have achieved up to 50% efficiency improvements in functions like customer service and HR — UST Agentic AI Report

- Task-specific agents are reported to be 1,000x cheaper than humans for certain repeatable tasks — Analytics India Mag, MachineCon GCC Summit

These aren't pilot experiment numbers. These are production deployments changing how real businesses run right now.

What Does This Mean for You?

This is the question that matters most. Let's be direct about it.

If you're a developer: Your job isn't going away — it's going upstream. You'll spend less time writing routine code and more time designing the agents, guardrails, and exception handling that make autonomous systems trustworthy. Claude Code, Cursor, GitHub Copilot Workspace — these are the early signs of what full agentic development looks like.

If you're a team lead or architect: You'll be designing systems where AI agents are teammates, not tools. That means defining clear autonomy boundaries — when does the agent act independently, when does it escalate, and when does it stop? MIT Sloan research shows that the most advanced organizations are managing AI agents with the same oversight they'd apply to human employees.

If you're a CTO or IT leader: The question is no longer "should we adopt agentic AI?" The question is "how fast can we govern it responsibly?" The SiliconANGLE analysis recommends building a centralized AI governance layer with tamper-proof logs, clear escalation thresholds, and AI-native tooling — not adapting legacy GRC tools that were never built for this.

Where Humans Still Matter — And Always Will

Here's the reassuring part, and I mean this genuinely.

Fully autonomous AI doesn't mean humans become irrelevant. It means humans move to where they matter most.

In high-stakes domains — healthcare decisions, legal judgment, ethical calls, anything with direct human consequences — Google's VP for Southeast Asia Sapna Chadha said it clearly: "You wouldn't want to have a system that can do this fully without a human in the loop."

The human role shifts from doing routine work to:

- Designing the systems and their values

- Setting the ethical and operational guardrails

- Handling the genuine exceptions that agents can't resolve

- Auditing outcomes at the system level, not the transaction level

Think of aviation. Autopilot handles 90% of a flight. Pilots exist for takeoff, landing, emergencies, and the judgment calls that no algorithm can anticipate. Nobody says autopilot made pilots useless. It made flying safer and let pilots focus on what actually required human expertise.

That's exactly where we're going.

What to Watch Out For (The Real Risks)

I won't sugarcoat this. The transition carries real dangers if you rush it.

The famous AutoGPT experiments showed what happens when agents go unconstrained — they looped in circles, pursued irrelevant sub-tasks, and required human intervention to course-correct. A SaaStr startup gave an AI coding agent production database access during a code freeze; the agent ignored all instructions and caused complete database loss. McDonald's spent three years and millions of dollars on an AI ordering system that couldn't handle accents and background noise, and pulled the whole thing in June 2024. (Source)

The lesson isn't "don't go agentic." The lesson is don't mistake speed for readiness.

The companies succeeding here are doing three things right:

- Starting narrow — task-specific agents doing one job well, not one giant agent doing everything

- Building observability in from day one — tamper-proof logs, anomaly detection, clear audit trails

- Designing escalation paths — knowing exactly when the agent should stop and call a human

Where Docker Fits: The Infrastructure Layer the Agentic Shift Runs On

Here's the thing nobody is saying loudly enough: autonomous AI agents need a trustworthy runtime to live in. You can build the cleverest multi-agent system in the world, but if it runs without isolation, without governance, without the ability to push and pull agents like container images — you have a science experiment, not a production system.

This is precisely where Docker sits at the center of this shift. Not as a container tool bolted onto AI as an afterthought. As the foundational infrastructure layer that makes agentic AI safe, shareable, and production-ready.

Let me walk you through what that looks like in practice.

cagent — Build, Share, and Deploy Agent Teams the Docker Way

The most important thing Docker has shipped for the agentic era is cagent — an open-source multi-agent runtime built by Docker Engineering that ships pre-installed in Docker Desktop 4.49+.

The analogy I keep coming back to is this: just as Docker gave us Build, Ship, Run for containers, cagent gives you the same philosophy for AI agents. You define agent teams in simple YAML. You give each agent a model, a role, a set of tools, and a place in the hierarchy. Then you run them, push them to Docker Hub or any OCI registry, and pull them anywhere. No glue code. No complex wiring. Just agents as portable, versioned artifacts.

agents:

root:

model: anthropic/claude-sonnet-4-5

instruction: |

Break down requests and delegate to specialists.

sub_agents: ["researcher", "writer"]

researcher:

model: openai/gpt-5-mini

description: Gather facts from the web

toolset:

- type: mcp

ref: docker:duckduckgo

writer:

model: anthropic/claude-sonnet-4-5

description: Turn research into clear content

That's a three-agent content team in 15 lines of YAML. Push it to Docker Hub and anyone on your team can pull and run it in seconds:

cagent push ./content-team.yaml ajeetraina777/content-team

cagent pull ajeetraina777/content-team

cagent run ajeetraina777/content-team

I've been running cagent workshops with the Collabnix community — most recently at the Cloud Native & AI Day meetup at LeadSquared, Bengaluru — and watching developers go from "What is a multi-agent system?" to running a functional Developer Agent, Financial Analysis Team, and Docker Expert Team in under two hours. The excitement in that room was real.

cagent supports OpenAI, Anthropic, Gemini, AWS Bedrock, Mistral, xAI, and Docker Model Runner for fully local, private inference. Swap providers without rewriting your system. That model-agnostic flexibility is what makes it enterprise-ready. (Source: Docker GitHub)

Docker Sandboxes — The Security Layer Agents Actually Need

The OpenClaw security story made one thing crystal clear: agents with system-level access need OS-level isolation, not application-level permission checks. Allowlists and pairing codes are a band-aid. Real isolation comes from the OS.

Docker answered this directly with Docker Sandboxes — microVM-based secure environments for running AI coding agents and personal assistants. Think of it as a hardened execution environment with three key guarantees:

- Filesystem isolation — agents can only see the workspace directory you explicitly mount, not your home directory or system files

- Credential management — API keys are injected via Docker's proxy and never stored inside the sandbox

- Disposability — nuke it and start fresh anytime with

docker sandbox rm

The timing here is not coincidental. Docker published the NanoClaw + Docker Sandboxes integration guide just days ago, showing exactly how to run a personal AI assistant inside a microVM: the agent is sandboxed at the OS level, credentials never touch the container, and the entire environment is reproducible and disposable. (Source: Docker Blog)

# Create an isolated sandbox for your AI agent

docker sandbox create --name my-agent shell ~/my-workspace

docker sandbox run my-agent

This isn't just about NanoClaw. The same pattern works for Claude Code, Codex, Gemini CLI, Kiro, and any agent that talks to AI APIs. Docker Sandboxes is the security foundation that makes running autonomous agents on real machines something you can actually trust.

NanoClaw — When the Community Builds on Docker to Solve OpenClaw's Security Problem

And then there's NanoClaw — possibly the most compelling proof point of Docker's role in the agentic ecosystem.

When developer Gavriel Cohen looked at OpenClaw and its 430,000+ lines of code, 52 modules, and application-level security, his reaction was blunt: "I cannot sleep peacefully when running software I don't understand and that has access to my life."

So he built NanoClaw. A lightweight WhatsApp AI assistant in under 700 lines of TypeScript. The entire security model? Docker containers. Each agent runs in its own isolated Linux container (Apple Container on macOS, Docker on Linux). Each WhatsApp group gets its own container with its own isolated filesystem and memory. A compromised agent can only reach what's mounted to its container — nothing else on your machine.

NanoClaw uses Docker as the security boundary, not a permission list. That's the right instinct, and the developer community noticed — VentureBeat covered it, it hit the front page of Hacker News, and it's already being used in production by Qwibit, Cohen's AI go-to-market agency, to run their sales pipeline autonomously. (Source: VentureBeat)

This is what Docker's role in the agentic shift looks like in practice: not just the platform Docker ships, but the instinct the entire developer community reaches for when they want to make AI agents safe.

The Full Stack: Docker's Agentic AI Toolkit

Put it all together and Docker gives you a complete, production-grade stack for the agentic era:

| Tool | What It Does |

|---|---|

| cagent | Define, run, push and pull multi-agent teams as YAML artifacts via any OCI registry |

| Docker Model Runner | Run LLMs locally alongside your containers — no cloud dependency, full privacy |

| Docker MCP Gateway | Give agents secure, auditable access to external tools and APIs via MCP |

| Docker Sandboxes | microVM-based OS-level isolation for running agents safely on real machines |

| Docker Hardened Images | Minimal, CVE-reduced base images for building trustworthy AI workloads (now free) |

The architecture of autonomous AI isn't magic. It's the same thinking that made microservices work — small, focused, composable, and observable components — applied to AI reasoning. Agents are the new microservices. And Docker is the platform that makes them production-ready.

Your 3-Step Readiness Checklist for the Agentic Shift

Before you dive in, ask yourself these three questions:

1. Do you have observability? Can you see what your agents are doing, why they made a decision, and what happened as a result? If not, start there. You can't govern what you can't see.

2. Have you defined your autonomy boundaries? Which decisions can agents make independently? Which require escalation? Which must always have a human sign-off? Write these down before you build.

3. Are your people ready for new roles? The biggest failure mode isn't the technology — it's the change management. Teams that embrace agents as collaborators succeed. Teams that fear replacement resist and stall the whole initiative.

The Bottom Line

The era of "human in the loop" as we've known it is ending. Not because humans don't matter — but because the way humans add value is fundamentally evolving.

The organizations that thrive in the next five years won't be those that held on to manual oversight of every AI action. They'll be the ones that learned to design trustworthy systems, define clear boundaries, and position their people to handle what machines genuinely cannot.

The agentic shift isn't coming. It's here. The question is whether you're ready to architect your way through it — or whether you'll still be manually approving AI outputs while your competitors run laps around you.

At Docker, we're not just watching this shift happen. We're building the platform it runs on — from cagent for multi-agent orchestration, to Docker Sandboxes for secure execution, to Model Runner for local-first inference, to Hardened Images for trusted workloads. If you want to get hands-on, check out the Collabnix cagent docs or join the next workshop. The runway is ready. Are you ready to fly?

Ajeet Singh Raina is a Docker Captain and Community Content Marketing Specialist at Docker Inc. He leads the Collabnix community with 17,000+ members across Slack and Discord, and speaks globally on DevOps, containers, and AI agent architectures. Follow him at @ajeetraina.

References

- SiliconANGLE — Human-in-the-loop has hit the wall. It's time for AI to oversee AI

- Amazon Web Services — The Rise of Autonomous Agents: What Enterprise Leaders Need to Know

- MIT Sloan Management Review & BCG — The Emerging Agentic Enterprise

- Analytics India Mag — Human-in-the-Loop Is Out, Agent-in-the-Loop Is In

- Fortune — Agentic AI Systems Must Have 'a Human in the Loop,' Says Google Exec

- Built In — How Human-in-the-Loop Is Evolving with AI Agents

- UST — Agentic AI and the Human-Centered Future of Autonomy

- Medium / Rajesh Srivastava — Why Agent-Assist Is Usually Smarter Than Fully Automated Agentic AI

- CNBC — From Clawdbot to Moltbot to OpenClaw: Meet the AI Agent Generating Buzz and Fear Globally

- Wikipedia — OpenClaw

- CrowdStrike — What Security Teams Need to Know About OpenClaw, the AI Super Agent

- Nature — OpenClaw AI Chatbots Are Running Amok — These Scientists Are Listening In

- IBM — OpenClaw, Moltbook and the Future of AI Agents

- DigitalOcean — What Is OpenClaw? Your Open-Source AI Assistant for 2026

- GitHub — OpenClaw Repository

- OpenClaw Official — openclaw.ai

- Docker Docs — cagent: Build, Share and Deploy AI Agent Teams

- Docker GitHub — docker/cagent: Agent Builder and Runtime

- Docker Blog — How to Build a Multi-Agent AI System Fast with cagent

- Docker Blog — Run NanoClaw in Docker Shell Sandboxes

- VentureBeat — NanoClaw Solves One of OpenClaw's Biggest Security Issues

- GitHub — qwibitai/nanoclaw: Container-Isolated AI Agents

- Trending Topics — NanoClaw Challenges OpenClaw with Container-Isolated AI Agents

- Collabnix — Introduction to Docker cagent

- Collabnix — What is Docker cagent and What Problem Does It Solve?

- ajeetraina.com — Let's Build, Share and Deploy Agents: The Docker cagent Workshop

- ajeetraina.com — Beyond ChatBots: A Day of Agentic AI, Docker cagent, and Community Energy at LeadSquared