Understanding Claude's Conversation Compacting: A Deep Dive into Context Management

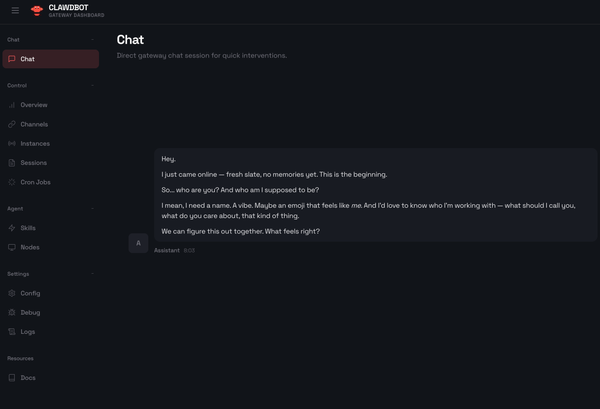

Ever seen "Compacting our conversation so we can keep chatting..." in Claude? It's not a bug—it's a feature that lets you have 100k+ token conversations without losing context. Here's how to leverage it for complex Docker and AI projects. 🧵

If you've ever had a deep technical conversation with Claude and suddenly seen a progress bar saying "Compacting our conversation so we can keep chatting...", you've encountered one of the most interesting features in modern AI assistants: automatic context window management.

As someone who regularly uses Claude + MCP for documentation validation, UAT Testing, AI agent development, and technical problem-solving, I've seen this compacting feature kick in dozens of times. Today, I'll explain what it is, why it matters, and share best practices for managing long technical conversations effectively.

What is Conversation Compacting?

Conversation compacting is Claude's automatic context optimization feature that activates when a conversation approaches the token limit (context window). Instead of forcing you to start a new chat and lose all your context, Claude intelligently compresses earlier parts of the conversation while preserving critical information.

How It Works

┌─────────────────────────────────────────────────────────┐

│ Conversation Timeline │

├─────────────────────────────────────────────────────────┤

│ [Oldest Messages] → [Middle Messages] → [Recent] │

│ Compressed Compressed Preserved │

│ ↓ ↓ ↓ │

│ Summarized Summarized Full Detail │

└─────────────────────────────────────────────────────────┘

The process involves:

- Analysis: Claude scans the entire conversation history

- Prioritization: Identifies key information, decisions, code snippets, and important context

- Compression: Summarizes older messages while maintaining semantic meaning

- Preservation: Keeps recent messages intact for immediate context

- Optimization: Removes redundant information and verbose explanations

When Does It Trigger?

Compacting typically activates when:

- Your conversation contains 50,000+ tokens (roughly 37,500 words)

- You've had extensive technical discussions with code examples

- Multiple file uploads and analyses have occurred

- Complex problem-solving sessions with iterative refinements

- Long-form documentation or tutorial creation

Real-World Triggers

From my experience, here are conversations that commonly trigger compacting:

High Token Consumers:

- Debugging complex Docker Compose configurations

- Multi-agent AI system architecture discussions

- Creating comprehensive documentation with code examples

- Analyzing and refactoring large codebases

- Multi-agent system documentation (like Rameshwaram Cafe examples)

Why This Feature Matters

For Developers and Technical Users

- Continuity: Maintain context across long troubleshooting sessions

- Efficiency: No need to re-explain project context in new chats

- Knowledge Preservation: Key decisions and technical details remain accessible

- Productivity: Keep working without interruption

The Alternative (Without Compacting)

Without this feature, you'd face:

- Hitting hard token limits and losing context

- Starting new chats and re-explaining everything

- Losing thread of complex technical discussions

- Fragmented documentation across multiple chats

Best Practices for Long Conversations

1. Structure Your Queries Strategically

✅ Do:

"I'm building a Docker Compose setup for a multi-agent AI system.

Here's what I need:

1. Redis for state management

2. PostgreSQL for persistence

3. Three agent containers

4. MCP Gateway integration

Let's start with the Redis configuration."

❌ Avoid:

"Help me with Docker stuff for AI agents and databases and maybe some other things too."

2. Use Clear Checkpoints

Mark major milestones in your conversation:

"Great! We've completed:

✓ Redis configuration

✓ PostgreSQL setup

✓ Agent 1 & 2 containers

Now moving to MCP Gateway integration."

This helps Claude identify important context boundaries during compacting.

3. Reference Previous Work Explicitly

When referring back to earlier discussion:

"Based on the Docker Compose configuration we created

in the first part of this conversation, let's now add

health checks to each service."

This creates clear semantic links that survive compression.

4. Save Critical Information Externally

For enterprise projects or complex configurations:

# Save important outputs to files

docker compose config > compose-validated.yml

# Document decisions

echo "Using Redis Alpine for lower memory footprint" >> decisions.md

# Export environment configurations

docker compose convert > final-compose.yml

5. Break Complex Tasks Into Phases

Phase-Based Approach:

Phase 1: Architecture Design (Chat 1)

├── System requirements

├── Service dependencies

└── Initial Docker Compose structure

Phase 2: Implementation (Chat 2)

├── Container configurations

├── Network setup

└── Volume management

Phase 3: Testing & Optimization (Chat 3)

├── Integration testing

├── Performance tuning

└── Security hardening

6. Use Memory Features Strategically

Claude's memory system complements compacting:

- Store persistent project details in memory

- Document your coding preferences

- Save architectural decisions

- Note recurring patterns in your work

This information persists across all conversations, not just the current one.

7. Leverage File Creation Features

Create files for complex outputs:

"Create a comprehensive Docker Hardened Image guide

as a markdown file with all the configurations

we've discussed."

Files are preserved and can be referenced later without consuming token space.

Technical Deep Dive: What Gets Preserved?

High Priority (Almost Always Preserved)

- ✅ Explicit decisions and action items

- ✅ Code snippets that were validated or approved

- ✅ Error messages and their solutions

- ✅ Architecture diagrams and system designs

- ✅ Configuration files and their explanations

- ✅ Recent messages (last 10-15 exchanges)

Medium Priority (Summarized)

- 📝 Exploratory discussions

- 📝 Alternative approaches considered

- 📝 Detailed explanations of concepts

- 📝 Extended troubleshooting sequences

- 📝 Background information and context

Low Priority (Compressed Heavily)

- 📉 Repetitive explanations

- 📉 Verbose descriptions already acted upon

- 📉 Multiple iterations of the same code

- 📉 Casual conversation and greetings

- 📉 Redundant clarifications

Advanced Strategies for Power Users

1. Pre-Conversation Preparation

Create a context document:

# Project Context

- **Project**: Multi-Agent AI System

- **Stack**: Docker, Redis, PostgreSQL, Python

- **Goal**: Build 3 specialized agents with MCP integration

- **Constraints**: Must run on single host, < 8GB RAM

Reference this at conversation start, then update as needed.

2. Periodic Context Snapshots

Every 20-30 exchanges, create a summary:

"Let's create a checkpoint. Can you summarize what

we've accomplished so far and the key decisions made?"

Save this summary to a file or separate note.

3. Modular Conversations

Instead of one mega-conversation, use linked conversations:

Main Chat → "See conversation X for database schema"

├── Chat 1: Database Design

├── Chat 2: API Development

├── Chat 3: Frontend Integration

└── Chat 4: Deployment & CI/CD

4. Tag Important Messages

Add markers to critical information:

"[DECISION] Using Alpine-based images for all services

[CONFIG] Redis maxmemory set to 256mb

[SECURITY] TLS enabled for all inter-service communication"

5. Use Artifacts for Large Outputs

Request artifacts for substantial content:

"Create a complete Docker Compose file as an artifact

that includes all the services we've discussed."

Artifacts don't count against your token limit in the same way.

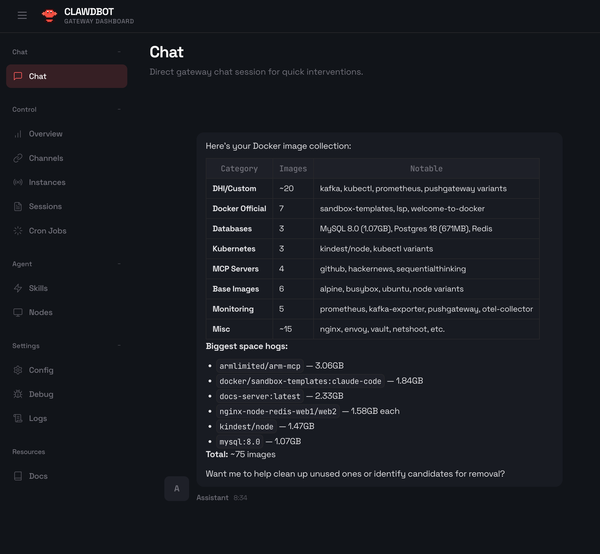

Real-World Example: Rameshwaram Cafe Multi-Agent System

Here's how I managed a 60,000+ token conversation while developing a multi-agent AI system modeled after Rameshwaram Cafe operations:

Initial Context (Tokens: ~5,000)

"I'm building a multi-agent system that mirrors Rameshwaram Cafe's operations:

- Order Taking Agent (Front Counter)

- Kitchen Management Agent (Food Preparation)

- Delivery Agent (Service)

- Inventory Agent (Stock Management)

Using Docker Compose to orchestrate these agents with Redis for state

management and PostgreSQL for order history."

Development Phase (Tokens: ~40,000)

- Designed agent communication patterns (like cafe staff coordination)

- Created Docker Compose with 4 specialized agent services

- Implemented Redis pub/sub for real-time order updates

- Built PostgreSQL schema for menu, orders, and inventory

- Developed MCP integrations for each agent

- Created example workflows (order → prepare → deliver)

Compacting Triggered (At ~50,000 tokens)

Claude preserved:

- ✅ Final Docker Compose configurations for all agents

- ✅ Redis channel architecture for inter-agent communication

- ✅ PostgreSQL schema and relationships

- ✅ MCP tool definitions for each agent

- ✅ Critical workflow decisions (e.g., order priority handling)

Claude compressed:

- 📝 Initial brainstorming about cafe operations

- 📝 Multiple iterations of agent design

- 📝 Detailed explanations of microservices concepts

- 📝 Alternative approaches we considered

Post-Compacting (Continued to ~70,000 tokens)

- Added health checks for all services (like cafe kitchen status monitoring)

- Implemented circuit breakers for agent failures

- Created monitoring dashboards (Prometheus/Grafana)

- Developed load testing scenarios (lunch rush simulation)

- Built troubleshooting guides for common issues

Result: Complete multi-agent system with continuous context, using Rameshwaram Cafe as a real-world metaphor that made the architecture immediately understandable.

Comparison: Claude vs Other AI Assistants

| Feature | Claude | ChatGPT | Other AI |

|---|---|---|---|

| Automatic Compacting | ✅ Transparent | ⚠️ Hard limits | ❌ Varies |

| Context Preservation | ✅ Intelligent | ⚠️ Manual | ❌ Limited |

| Long Conversations | ✅ 100k+ tokens | ⚠️ ~32k tokens | ❌ ~4-8k |

| File Integration | ✅ Full support | ✅ Supported | ⚠️ Limited |

| Memory System | ✅ Cross-chat | ✅ Available | ❌ Rare |

Future of Context Management

Based on industry trends, expect:

- Larger Context Windows: 1M+ token windows becoming standard

- Better Compression: More sophisticated semantic preservation

- User Control: Manual compacting triggers and settings

- Context Sharing: Ability to share conversation contexts

- Advanced Memory: More granular control over what's remembered

- Multi-Modal: Better handling of images, code, and documentation

- Retrieval Augmentation: Dynamic loading of relevant past context

Conclusion

Conversation compacting is a powerful feature that enables deep, technical discussions with AI assistants without losing critical context. By understanding how it works and following best practices, you can have more productive conversations and build complex projects without starting over.

For Docker and DevOps workflows especially, where configurations are complex and decisions build on previous discussions, this feature is invaluable. Combined with proper file management, memory usage, and structured communication, you can leverage Claude for extensive technical projects.

Try It Yourself

Want to see compacting in action? Try:

- Start a conversation about building a multi-service Docker application

- Iteratively develop Docker Compose configurations

- Add security hardening steps

- Create monitoring and logging setup

- Develop CI/CD integration

- Add troubleshooting guides

You'll likely trigger compacting and can observe how context is preserved!

Resources

- Claude Documentation: https://docs.anthropic.com

- Docker Compose Best Practices: https://collabnix.com

- Token Counting Tools: https://platform.openai.com/tokenizer