What is Docker Offload and What Problem Does It Solve?

Docker Offload revolutionizes development by seamlessly extending your local Docker workflow to cloud GPU infrastructure. Same commands, cloud-scale performance. ⚡🚀

The landscape of modern software development is rapidly evolving, with AI/ML workloads, containerized applications, and resource-intensive builds becoming the norm rather than the exception. However, developers often find themselves constrained by local hardware limitations, struggling with insufficient CPU power, memory shortages, and most critically, lack of GPU access for machine learning workloads.

Enter Docker Offload — Docker's groundbreaking solution that seamlessly extends your familiar local development workflow into a powerful, cloud-based environment. This fully managed service represents a paradigm shift in how developers approach containerized development, offering the best of both worlds: local convenience with cloud-scale performance.

Docker Team announced the availability of Docker Offload during the WeAreDevelopers 2025 Developer Conference early this July 2025.

The Developer's Dilemma: Local Limitations vs. Cloud Complexity

Modern developers working on AI/ML applications face a fundamental challenge. Local development machines, while convenient, often lack the computational power needed for:

- Large Language Model (LLM) inference requiring significant GPU memory

- Docker builds for complex, multi-stage containerized applications

- Machine learning pipelines demanding specialized hardware acceleration

- Compute-intensive workloads that exceed local resource capabilities

Traditionally, developers had to choose between maintaining their familiar Docker Desktop workflow or migrating to complex cloud infrastructure setups. This created friction, reduced productivity, and often led to inconsistent development experiences across team members with varying hardware capabilities.

What is Docker Offload?

Docker Offload is Docker's answer to this challenge — a fully managed service that lets you execute Docker builds and run containers in the cloud while preserving your local development experience. Think of it as extending your local Docker Desktop into a scalable, GPU-enabled cloud environment without changing your workflow.

Key Capabilities That Set Docker Offload Apart

🚀 One-Click GPU Access Access NVIDIA L4 GPUs instantly for AI/ML workloads and data processing tasks. No complex cloud setup, no CUDA installation headaches — just enable GPU support and you're ready to run Jupyter notebooks, train models, or execute GPU-accelerated containers.

⚡ Cloud-Powered Builds Execute docker build commands on powerful cloud infrastructure while your local machine remains free for other tasks. Your familiar Docker commands work exactly the same way, but with enterprise-grade cloud performance.

🔧 Seamless Integration The magic lies in the seamlessness. Same Docker commands, same workflow, same Docker Compose files — but with cloud execution. Docker Offload creates a secure SSH tunnel to a Docker daemon running in the cloud, making the remote infrastructure feel local.

🔒 Enterprise-Grade Security All connections use secure SSH tunnels with encrypted data transfer. Each session runs in isolated cloud environments that automatically clean up after use, ensuring both security and cost efficiency.

Architecture: How Docker Offload Works Under the Hood

When you initiate Docker Offload, here's what happens behind the scenes:

- Secure Connection: Docker Desktop establishes an encrypted SSH tunnel to dedicated cloud resources

- Cloud Execution: Docker commands execute on remote BuildKit instances with powerful EC2 infrastructure

- Active Session Management: The connection remains active during container operations, supporting bind mounts and port forwarding

- Automatic Cleanup: After 30 minutes of inactivity, the environment automatically shuts down and cleans up all resources

This architecture ensures you get cloud-scale performance without sacrificing the local development experience you're accustomed to.

Getting Started with Docker Offload

Prerequisites

- Docker Desktop 4.43.0 or later

- Active Docker account

- For GPU workloads: projects that can benefit from NVIDIA L4 GPU acceleration

Method 1: Using CLI

Open the terminal and run the following command:

docker offload

Usage: docker offload COMMAND

Docker Offload

Commands:

accounts Prints available accounts

diagnose Print diagnostic information

start Start a Docker Offload session

status Show the status of the Docker Offload connection

stop Stop a Docker Offload session

version Prints the version

Run 'docker offload COMMAND --help' for more information on a command.

Start a Docker Offload session

docker offload startPlease choose your Hub account and proceed further.

Verify if a new `docker-cloud` context is created or not.

docker context ls

NAME DESCRIPTION DOCKER ENDPOINT ERROR

default Current DOCKER_HOST based configuration unix:///var/run/docker.sock

desktop-linux Docker Desktop unix:///Users/ajeetsraina/.docker/run/docker.sock

docker-cloud * docker cloud context created by version v0.4.2 unix:///Users/ajeetsraina/.docker/cloud/docker-cloud.sock

tcd Testcontainers Desktop tcp://127.0.0.1:49496 By now, you should be able to able to print available accounts through CLI.

docker offload accounts

{

"user": {

"id": "15ee357d-XXXX-4d39-87d9-dc3b697b3392",

"fullName": "XXX",

"gravatarUrl": "",

"username": "XXX",

"state": "READY"

},

"orgs": [

{

"id": "57b45934-74c0-11e4-XXX-0242ac11001b",

"fullName": "Docker, Inc.",

"gravatarUrl": "https://www.gravatar.com/avatar/XXXXXa68e?s=80&r=g&d=mm",

"orgname": "docker",

"state": "READY"

},

{

"id": "96a075XXXXXX1a78b",

"fullName": "",

"gravatarUrl": "",

"orgname": "XXXX",

"state": "READY"

}

]

}

- Check the status of Docker Offload.

docker offload statusCheck the version

docker offload version

Docker Offload v0.4.2 build at 2025-06-30

Stop the Docker Offload

This command removes `docker-cloud` instance from your system.

Method 2: Using Docker Dashboard

For users who prefer graphical interfaces:

- Navigate to Settings > Beta Features

- Enable Docker Offload

Once enabled, you will need to hit the toggle button to start Docker Offload.

You can check the status by running the following command:

docker offload statusYou’ll notice that Docker Offload appears in multiple places

- Under Models

- On the left sidebar of the Docker Dashboard

Verify if you’re using Cloud instance through Docker Offload.

docker info | grep -E "(Server Version|Operating System)"

Server Version: 28.0.2

Operating System: Ubuntu 22.04.5 LTS

ajeetsraina ~ ♥ 16:54You can verify the type of GPU that your remote instance is leveraging.

docker run --rm --gpus all nvidia/cuda:12.4.0-runtime-ubuntu22.04 nvidia-smi

==========

== CUDA ==

==========

CUDA Version 12.4.0

Container image Copyright (c) 2016-2023, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

This container image and its contents are governed by the NVIDIA Deep Learning Container License.

By pulling and using the container, you accept the terms and conditions of this license:

https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license

A copy of this license is made available in this container at /NGC-DL-CONTAINER-LICENSE for your convenience.

Fri Jul 4 11:26:11 2025

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.247.01 Driver Version: 535.247.01 CUDA Version: 12.4 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA L4 Off | 00000000:31:00.0 Off | 0 |

| N/A 44C P0 27W / 72W | 20200MiB / 23034MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

+---------------------------------------------------------------------------------------+

It shows NVIDIA L4 GPU with 23GB of memory. You can find further details:

- GPU: NVIDIA L4 (great for AI/ML workloads)

- Memory: 23GB total, ~20GB already allocated

- Driver: 535.247.01 with CUDA 12.4 support

- Current Usage: 0% (idle)

Troubleshooting Docker Offload

All the Docker Offload logs are available under the following location:

cd ~/.docker/cloud/logs

tail -f cloud-dameon.logHands-On Demo: Docker Offload in Action

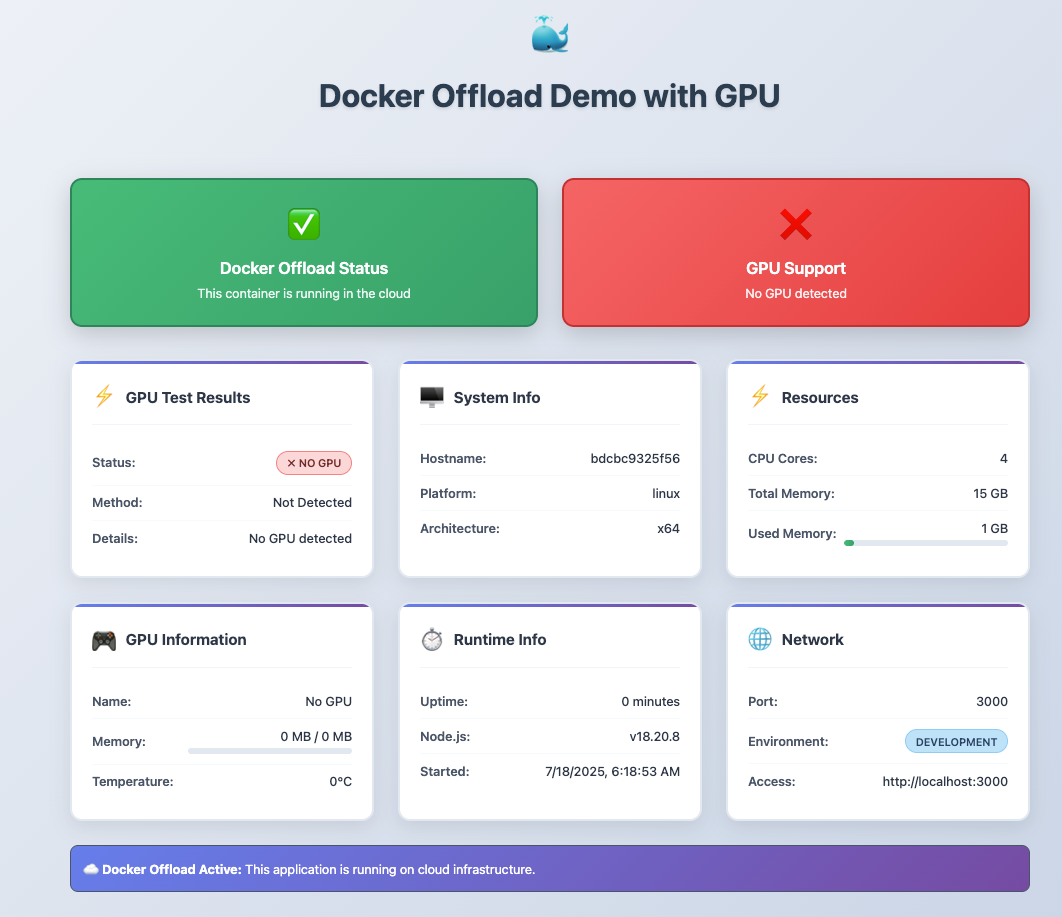

Before diving into various use cases, let's explore a practical demonstration that showcases Docker Offload's capabilities. The Docker Offload Demo is a Node.js web application specifically designed to demonstrate Docker Offload functionality with real-time monitoring and GPU detection.

Running the Docker Offload Demo

First, ensure Docker Offload is running:

# Start Docker Offload session

docker offload start

# Clone the demo repository

git clone https://github.com/ajeetraina/docker-offload-demo.git

cd docker-offload-demoBasic Demo (Without GPU)

# Build the image in the cloud

docker build -t docker-offload-demo .

# Run the container

docker run --rm -p 3000:3000 docker-offload-demo

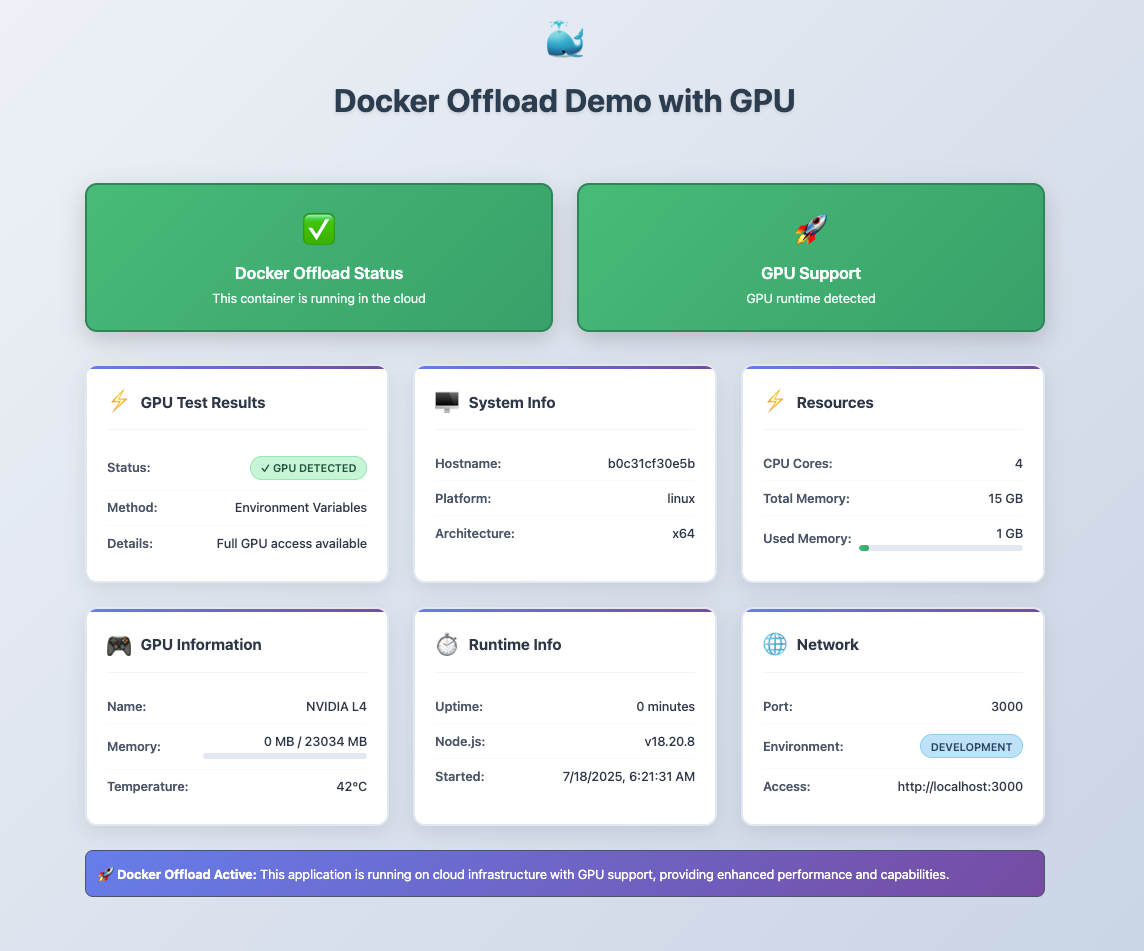

GPU-Enabled Demo

Ensure that you stopped the earlier container before you run the following command:

# Build with GPU support

docker build -t docker-offload-demo .

# Run with GPU access

docker run --rm --gpus all -p 3000:3000 docker-offload-demo

Result:

🐳 Docker Offload Demo running on port 3000

🔗 Access at: http://localhost:3000

📊 System Status:

🚀 Docker Offload: ENABLED

⚡ GPU Support: DETECTED

🖥️ GPU: NVIDIA L4

💾 Memory: 1GB / 15GB (5%)

🔧 CPU Cores: 4

⏱️ Uptime: 0 seconds

✅ Ready to serve requests!What the Demo Shows

When you visit http://localhost:3000, the web interface displays:

- ✅ Docker Offload Confirmation: Validates you're running in the cloud environment

- 🚀 GPU Status and Information: Real-time GPU metrics when GPU support is enabled

- 🖥️ Cloud Instance System Details: Hardware specifications of your cloud instance

- ⚡ Resource Utilization: Live CPU, memory, and GPU usage monitoring

- 🕒 Runtime Information: Session duration and environment details

- 🌐 Network Status: Connection information and endpoint details

GPU Detection and Monitoring

The demo intelligently detects GPU availability through multiple methods:

- Environment Variables: Checks

NVIDIA_VISIBLE_DEVICESandCUDA_VISIBLE_DEVICES - Command Availability: Verifies

nvidia-smicommand presence - Live Monitoring: Auto-refreshes GPU statistics every 30 seconds

- Resource Testing: Runs simple GPU computation tests to validate functionality

Real-World Use Cases and Demonstrations

1. GPU-Accelerated Jupyter Lab for Machine Learning

Launch a complete ML development environment in seconds:

docker run --rm -p 8888:8888 --gpus all tensorflow/tensorflow:latest-gpu-jupyterThis single command provides:

- TensorFlow with GPU support

- CUDA libraries and cuDNN

- Complete scientific Python stack (NumPy, Pandas, Matplotlib)

- Jupyter Lab interface

- Access via Docker Dashboard port forwarding

2. Streamlit App with GPU Acceleration

Create an instant data science environment:

docker run --rm -p 8501:8501 --gpus all python:3.9 sh -c \

"pip install streamlit torch torchvision && streamlit hello --server.address=0.0.0.0"Perfect for rapid prototyping of GPU-accelerated data applications.

3. Hugging Face Transformers Development

Set up an environment for state-of-the-art NLP and computer vision models:

docker run --rm -p 8080:8080 --gpus all python:3.9 sh -c \

"pip install transformers torch accelerate && python -c 'import transformers; print(\"Transformers GPU ready\")'"This establishes the foundation for:

- Pre-trained model inference (BERT, GPT, CLIP)

- Distributed training with multiple GPUs

- Mixed-precision training optimization

Conclusion

Docker Offload bridges the gap between local development convenience and cloud-scale capabilities, making powerful computing resources accessible to every developer. Whether you're training machine learning models, building complex applications, or simply need more computational power for your Docker builds, Docker Offload provides a seamless solution that integrates perfectly with your existing workflow.

The combination of familiar Docker commands, powerful cloud infrastructure, and GPU acceleration creates unprecedented opportunities for developers to innovate without constraints. As AI and machine learning continue to reshape software development, tools like Docker Offload ensure that every developer can participate in this transformation, regardless of their local hardware limitations.

Ready to experience cloud-scale development? Start your Docker Offload journey today and discover what's possible when local convenience meets cloud power.

Ready to try Docker Offload? Visit the official Docker documentation or check out the demo repository to see it in action.