Agentic AI

Collabnix AI Weekly - Edition 1

Your weekly digest of Cloud-Native AI and Model Context Protocol innovations.

Agentic AI

Your weekly digest of Cloud-Native AI and Model Context Protocol innovations.

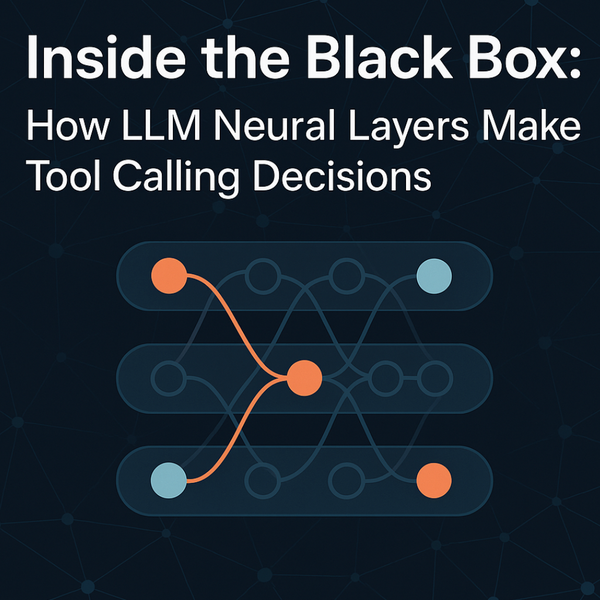

LLM

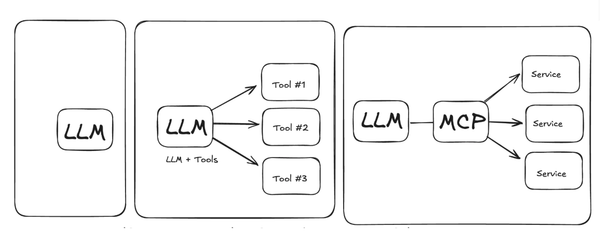

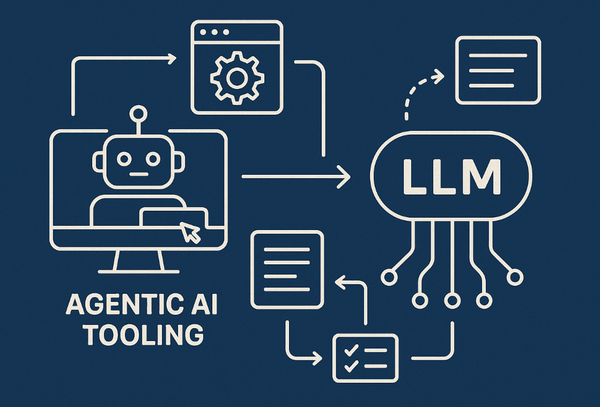

Ever wondered how AI 'decides' which tools to use? There's no magic—just 24+ neural layers working together. Discover what really happens inside LLMs when they make tool calling decisions

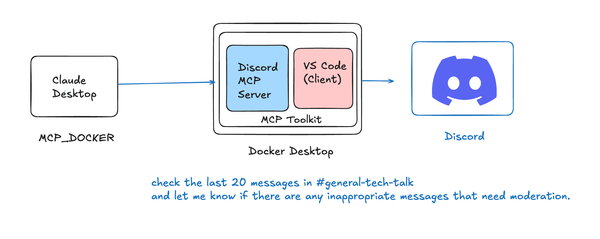

Docker MCP Toolkit

No coding required: Connect Claude AI to Discord using Docker MCP Toolkit for smarter community management

Docker Compose

Specifying a version is redundant and can even lead to warnings.

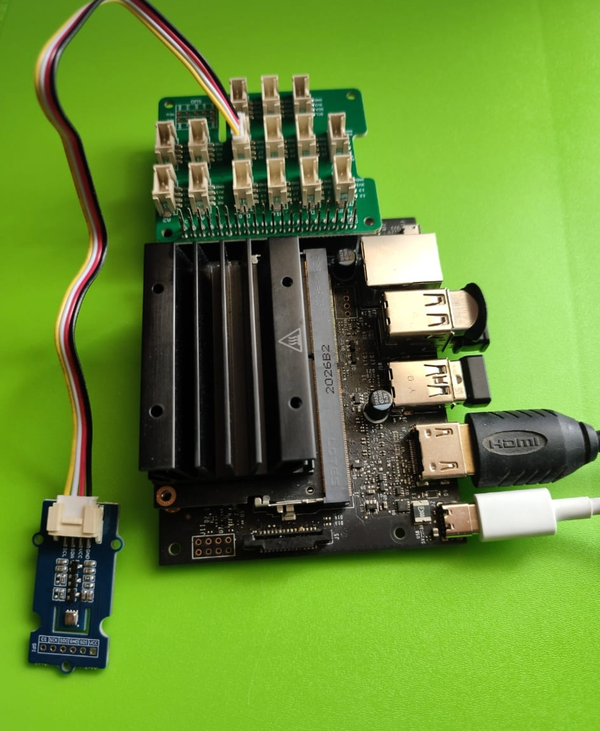

NVIDIA

First Look at NVIDIA Jetson Orin Nano Super - The Most Affordable Generative AI Supercomputer

Redis

Back in 2021 while working for Redis, I explored an exciting project related to Sensor Analytics and Visualisation.

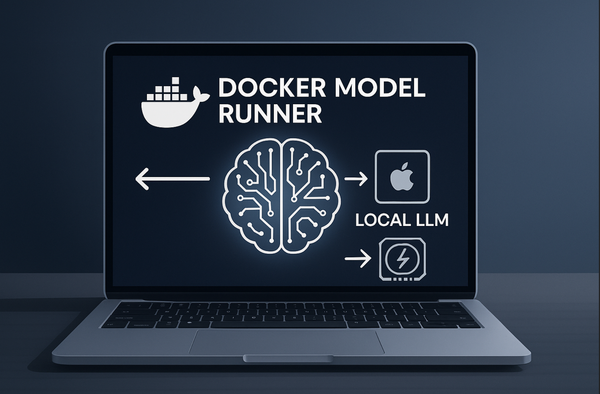

A GPU-accelerated LLM runner that enables developers to run AI models locally

What if understanding AI's revolutionary progress was as simple as watching a chemistry student evolve from freshman to research scientist?

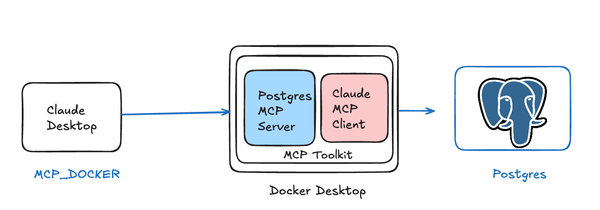

What if an AI Assistant like Claude Desktop could query your database directly? I built a custom MCP server for Postgres that lets me ask Claude natural language questions about my data, and it responds with real-time insights by running SQL queries behind the scenes.

Think AI is magic? Nope – it's actually like a really, REALLY good librarian!

Imagine telling your AI 'Plan my vacation to Japan' and it automatically finds the right tools for flights, hotels, and attractions without you specifying which tools to use. That's MCP—AI agents that discover, connect, and orchestrate external tools seamlessly

Claude Desktop

So next time someone asks you, "How do I rank on Google?" Challenge them with this instead: "How ready are you for AI agents?"