Getting Started with NVIDIA Jetson AGX Thor Developer Kit: A Complete Reference Guide

If you're building robots, you're going to want to hear about this.

NVIDIA just released Jetson Thor, and it's a beast. We're talking 2,070 teraflops of FP4 performance powered by their Blackwell architecture. What does that mean for you? You can finally run those demanding agentic AI systems, handle real-time sensor processing, and tackle complex robotics tasks—all on a single device.

And here's the thing—you're not alone in this space. There are over 2 million developers already working with NVIDIA's robotics tools. For companies ready to take their vision AI and robotics apps to the next level, Jetson Thor makes it possible to run multi-agent AI workflows right at the edge. Want to get your hands on one? Developer kits start at $3,499, or you can grab Jetson T5000 modules at $2,999 each if you're ordering 1,000 units.

So why should you care about the specs? Because robots are hungry—hungry for data and processing power. When you're juggling multiple sensors feeding data simultaneously, you need serious compute and memory to keep everything running smoothly with zero lag. That's where Jetson Thor shines. Compared to the previous Jetson Orin, we're looking at 7.5x more AI compute, 3.1x faster CPU performance, and twice the memory. All packed into a 130-watt package.

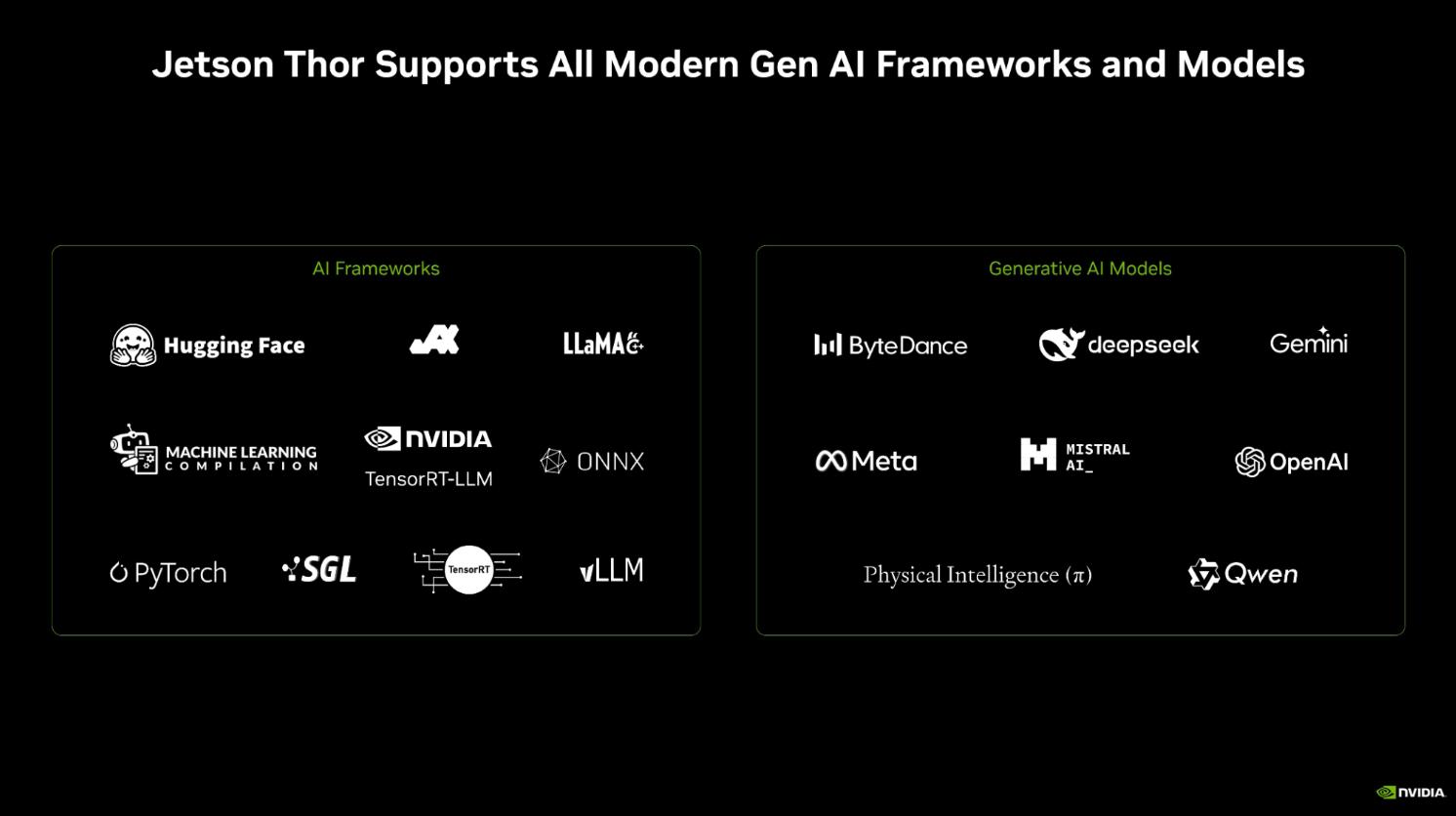

Edge AI is getting real. Think about it—2,070 TFLOPS of compute and 128 GB of memory means you can run sophisticated GenAI models directly on your robot. No cloud needed. No latency issues. The Blackwell GPU architecture isn't just powerful; it's smart about power too, delivering 3.5x better energy efficiency than the last generation. Plus, the software side is solid—full support for all the popular AI frameworks and GenAI models you're already using.

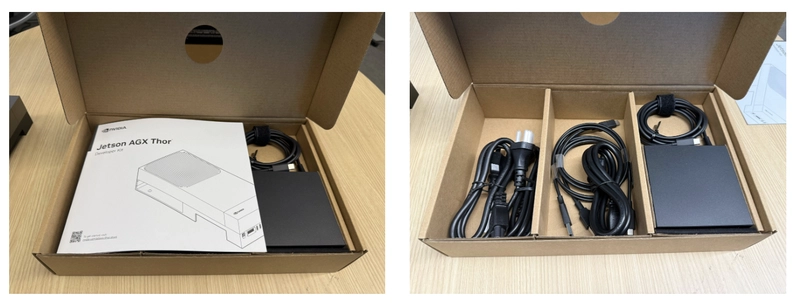

The Jetson Thor platform is available as the Jetson AGX Thor Developer Kit and the Jetson T5000 module. The developer kit comes pre-installed with an NVIDIA T5000 module, carrier board, heat sink, power supply, and a number of IO ports, providing a seamless journey from the development with developer kit to production with the T5000 modules. See detailed technical specifications for the NVIDIAJetson AGX Thor developer kit and the Jetson T5000 module

In this tutorial, I'll show you how to:

- Setup NVIDIA Jetson AGX Thor from scratch

- Run AI models using Docker Model Runner

This comprehensive guide walks you through setting up Docker Engine, Docker Desktop (optional), and Docker Model Runner on the NVIDIA Jetson Thor Developer Kit with full GPU support.

Key Configuration Details

| Component | Version/Details |

|---|---|

| Platform | NVIDIA Jetson Thor (ARM64) |

| GPU | NVIDIA Thor (Blackwell architecture) |

| GPU Driver | 580.00 |

| CUDA Version | 13.0 |

| Docker Model Runner | v0.1.44 |

| API Port | 12434 |

| Memory | 128 GB |

| AI Compute | 2070 FP4 TFLOPS |

Hardware Specifications

NVIDIA® Jetson Thor™ series modules deliver ultimate performance for physical AI and robotics:

Compute Performance

- 2070 FP4 TFLOPS of AI compute

- 128 GB of memory

- Power configurable between 40W and 130W

- 7.5x higher AI compute than NVIDIA AGX Orin™

- 3.5x better energy efficiency

GPU Architecture

- 2560-core NVIDIA Blackwell architecture GPU

- 96 fifth-gen Tensor Cores

- Multi-Instance GPU (MIG) with 10 TPCs

- Sensor Processing and Robotic AI Software Stack

CPU Configuration

- 14-core Arm® Neoverse®-V3AE 64-bit CPU

- 1 MB L2 cache per core

- 16 MB shared system L3 cache

Prerequisites

- NVIDIA Jetson Thor Developer Kit

- Bootable USB stick (for initial OS installation)

- Ubuntu host PC (for creating bootable USB)

- Display (HDMI or DisplayPort)

- USB keyboard and mouse

- Internet connection

- NVIDIA Developer account

Initial Setup and Installation

First Boot Experience

When you first power on your Jetson Thor, you may see the UEFI Interactive

UEFI Interactive Shell v2.2

EDK II

UEFI v2.70 (EDK II, 0x00010000)

Mapping table

FS1: Alias(s):F1:

MemoryMapped(0xB,0x1F04000000,0x1F042FFFFF)

FS0: Alias(s):F0:

FV(01F5F7B7-0C00-446E-8E3B-0E2D0FCB0DED)

BLK0: Alias(s):

VenHw(1E5A432C-0466-4D31-B009-D4D923271B3)/MemoryMapped(0xB,0x0BB08400000,0x0BB08463FFF)/Pc1Root(0x5)/Pc1(0x0,0x0)/Pc1(0x0,0x0)/NVMe(0x1,6C-45-E2-42-0B-44-1B-00)

Press ESC in 1 seconds to skip startup.nsh or any other key to continue.

Shell>_This means the system booted successfully but needs the operating system installed.

Creating a Bootable USB Stick

JetPack 7.0 introduces a simplified installation process without requiring a host Ubuntu PC for flashing.

Step 1: Download the Jetson ISO

Download the Jetson BSP installation media ("Jetson ISO") image file:

Direct Download Link: Jetson Installer ISO

Important: Do not simply copy the ISO file to the USB stick. You need to create a bootable USB using special software.

Step 2: Create Bootable USB with Balena Etcher

- Download and install Balena Etcher

- Launch Etcher

- Select the downloaded ISO image file

- Select your USB stick

- Click "Flash!"

Step 3: Boot from USB

- Connect a display via HDMI or DisplayPort

- Connect USB keyboard and mouse

- Insert the bootable USB stick into USB Type-A or USB-C port

- Connect power supply to one of the USB-C ports

- Press the power button (left button on the board)

Note: Jetson Thor is pre-configured to boot from USB if attached (priority over NVMe SSD).

When Jetson powers on, it will show pre-boot options. Press Enter or wait for timeout to boot from USB stick.

Installing Docker Engine

Step 1: Install Docker Engine

Use the Docker convenience script for ARM64 installation:

# Download and run the Docker installation script

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.sh

# Add your user to docker group

sudo usermod -aG docker $USER

newgrp docker

# Verify Docker Engine is installed

docker --version

# Test Docker installation

docker run hello-world

```

**Expected Output:**

```

Docker version 27.x.x, build xxxxxInstalling Docker Desktop (Optional)

If you prefer Docker Desktop's GUI features:

Step 1: Download Docker Desktop

# Download Docker Desktop ARM64 package

wget https://desktop.docker.com/linux/main/arm64/docker-desktop-arm64.debStep 2: Install Docker Desktop

# Install Docker Desktop (docker-ce-cli must be installed first)

sudo apt install ./docker-desktop-arm64.debStep 3: Verify Installation

# Check Docker Desktop status

docker desktop status

# Start Docker Desktop if needed

systemctl --user start docker-desktopInstalling NVIDIA Container Toolkit (optional)

This is an optional step. This is only if you installed Jetson BSP with SDK Manager. You can skip this step if you installed Jetson BSP with “Jetson USB” installation USB stick method.

The NVIDIA Container Toolkit enables GPU access from Docker containers.

Step 1: Add NVIDIA Repository

# Install prerequisites

sudo apt-get update

sudo apt-get install -y ca-certificates curl gnupg

# Add NVIDIA Container Toolkit keyring

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg --yes

# Add repository

echo "deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://nvidia.github.io/libnvidia-container/stable/deb/\$(ARCH) /" | sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.listStep 2: Install NVIDIA Container Toolkit

# Update package list

sudo apt-get update

# Install NVIDIA Container Toolkit

sudo apt-get install -y nvidia-container-toolkit

# Configure Docker daemon to use NVIDIA runtime

sudo nvidia-ctk runtime configure --runtime=docker

# Restart Docker Engine

sudo systemctl restart dockerAdd the default runtime to the Docker daemon configuration file, so that we don’t need to specify --runtime nvidia every time we run a container.

sudo apt install -y jq

sudo jq '. + {"default-runtime": "nvidia"}' /etc/docker/daemon.json | \

sudo tee /etc/docker/daemon.json.tmp && \

sudo mv /etc/docker/daemon.json.tmp /etc/docker/daemon.jsonLet’s make sure you have the following in your /etc/docker/daemon.json file.

$ cat /etc/docker/daemon.json

{

"runtimes": {

"nvidia": {

"args": [],

"path": "nvidia-container-runtime"

}

},

"default-runtime": "nvidia"

}It’s also recommended to add your username ($USER) to the docker group to avoid using sudo to run Docker commands.

sudo usermod -aG docker $USER

newgrp dockerAfter that, you may need to restart your terminal/session to apply the changes.

Docker Setup Test

Example 1: Run PyTorch container

docker run --rm -it \

-v "$PWD":/workspace \

-w /workspace \

nvcr.io/nvidia/pytorch:25.08-py3Once in the container, you can test PyTorch with GPU.

python3 <<'EOF'

import torch

print("PyTorch version:", torch.__version__)

print("CUDA available:", torch.cuda.is_available())

if torch.cuda.is_available():

print("GPU name:", torch.cuda.get_device_name(0))

x = torch.rand(10000, 10000, device="cuda")

print("Tensor sum:", x.sum().item())

EOFYou should see something like this:

PyTorch version: 2.8.0a0+34c6371d24.nv25.08

CUDA available: True

GPU name: NVIDIA Thor

Tensor sum: 50001728.0Verifying GPU Access

Test GPU Access from Container

# Run nvidia-smi inside a container

sudo docker run --rm --runtime=nvidia --gpus all ubuntu:22.04 nvidia-smi

```

### Expected Output

```

Thu Oct 30 07:46:51 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 580.00 Driver Version: 580.00 CUDA Version: 13.0 |

+-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA Thor Off | 00000000:01:00.0 Off | N/A |

| N/A N/A N/A N/A / N/A | Not Supported | 0% Default |

| | | Disabled |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+This confirms:

- ✅ GPU: NVIDIA Thor detected

- ✅ Driver: 580.00

- ✅ CUDA: 13.0

- ✅ GPU access from containers: Working!

Setting Up Docker Model Runner

Docker Model Runner provides a local API to run AI models with GPU acceleration.

Method 1: Using Docker Model Command (Recommended)

Docker Model Runner is built into Docker Engine and can be managed via CLI.

Check Docker Model Runner Version

sudo docker model version

**Expected Output:**

Docker Model Runner version v0.1.44

Docker Engine Kind: Docker EngineCheck Model Runner Status

# Check if Model Runner is running

sudo docker model status

# List available commands

sudo docker model --helpStart Model Runner

# Start Model Runner on port 12434 (default)

sudo docker model start --port 12434List and Pull Models

# List available models

sudo docker model list

# Pull a model (example: SmolLM2)

sudo docker model pull ai/smollm2

# Monitor download progress

watch -n 2 'sudo docker model status'Method 2: Using Docker Container (Alternative)

If you prefer running Model Runner as a container:

# Pull the Docker Model Runner image

docker pull docker/model-runner:latest

# Verify the image

sudo docker images | grep model-runner

# Start Model Runner with GPU access

sudo docker run -d \

--name model-runner \

--runtime=nvidia \

--gpus all \

-p 12434:12434 \

-e NVIDIA_VISIBLE_DEVICES=all \

-e NVIDIA_DRIVER_CAPABILITIES=compute,utility \

-v model-runner-cache:/root/.cache \

docker/model-runner:latest

# Check logs

sudo docker logs -f model-runnerNote: The internal listening port is 12434, so map it accordingly: -p 12434:12434ajeetraina@ajeetraina:~$ sudo docker model version

Docker Model Runner version v0.1.44

Docker Engine Kind: Docker Engine

ajeetraina@ajeetraina:~$ sudo docker model status

265715e1384d: Pull complete Digest: sha256:f5ea90155a20b02d4bab896966299deaf0f73166e24be1245feb7aa93216541e

Status: Downloaded newer image for docker/model-runner:latest-cuda

Successfully pulled docker/model-runner:latest-cuda

Creating model storage volume docker-model-runner-models...

Starting model runner container docker-model-runner...

Docker Model Runner is running

Status:

llama.cpp: installingAPI Reference

Base URL

- From host processes:

http://localhost:12434/ - From containers:

http://model-runner.docker.internal:12434/(Docker Desktop) orhttp://172.17.0.1:12434/(Docker Engine)

curl http://localhost:12434/models

[]

ajeetraina@ajeetraina:~$Perfect! The API is responding correctly. The empty array [] means no models are loaded yet. Let's pull and load a model!

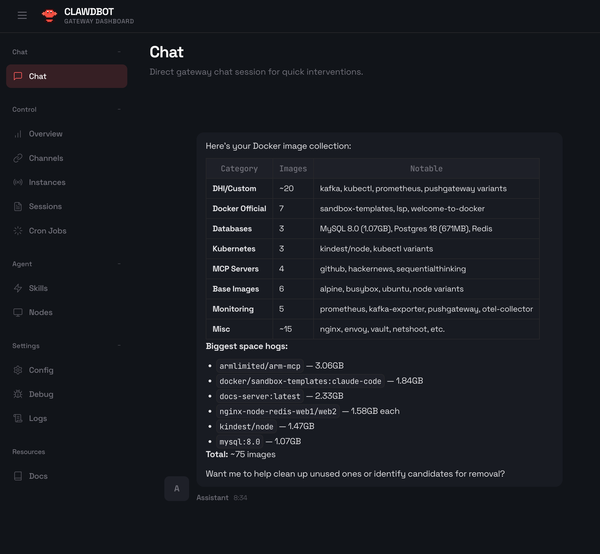

Let's pull a bunch of models:

docker model pull ai/qwen3:latest

docker model pull ai/smollm2:latestDocker Model Runner Endpoints

List Models

curl http://localhost:12434/modelsResult:

[{"id":"sha256:354bf30d0aa3af413d2aa5ae4f23c66d78980072d1e07a5b0d776e9606a2f0b9","tags":["ai/smollm2"],"created":1742816981,"config":{"format":"gguf","quantization":"IQ2_XXS/Q4_K_M","parameters":"361.82 M","architecture":"llama","size":"256.35 MiB"}}]

ajeetraina@ajeetraina:~$Get Specific Model

curl http://localhost:12434/models/{namespace}/{name}Create/Load Model

curl -X POST http://localhost:12434/models/create \

-H "Content-Type: application/json" \

-d '{

"model": "ai/smollm2"

}'Delete Model

curl -X DELETE http://localhost:12434/models/{namespace}/{name}OpenAI-Compatible Endpoints

Docker Model Runner supports OpenAI-compatible API endpoints:

List Models (OpenAI format)

curl http://localhost:12434/engines/llama.cpp/v1/modelsChat Completions

curl http://localhost:12434/engines/llama.cpp/v1/chat/completions \

> -H "Content-Type: application/json" \

> -d '{

> "model": "ai/smollm2:latest",

> "messages": [

> {

> "role": "user",

> "content": "What is Docker in one sentence?"

> }

> ]

> }'

{"choices":[{"finish_reason":"stop","index":0,"message":{"role":"assistant","content":"Docker is an open-source project that allows developers to create and run application containers using a lightweight operating system, making it easy to share and deploy applications."}}],"created":1761813028,"model":"ai/smollm2:latest","system_fingerprint":"b1-ca71fb9","object":"chat.completion","usage":{"completion_tokens":33,"prompt_tokens":37,"total_tokens":70},"id":"chatcmpl-EI4ClduOrSJO0GezSkNYzIHkdL4VndLU","timings":{"cache_n":0,"prompt_n":37,"prompt_ms":193.842,"prompt_per_token_ms":5.238972972972974,"prompt_per_second":190.87710609671794,"predicted_n":33,"predicted_ms":355.969,"predicted_per_token_ms":10.786939393939393,"predicted_per_second":92.704701813922}}ajeetraina@ajeetraina:~$Completions

curl http://localhost:12434/engines/llama.cpp/v1/completions \

-H "Content-Type: application/json" \

-d '{

"model": "ai/smollm2",

"prompt": "Docker is",

"max_tokens": 50

}'{"choices":[{"text":" a containerization platform that enables developers to build, run, and manage containers efficiently. It provides a runtime environment for containerized applications, making it easier to deploy and manage them.\n\nIn a Docker environment, images are created and managed by Docker","index":0,"logprobs":null,"finish_reason":"length"}],"created":1761813173,"model":"ai/smollm2","system_fingerprint":"b1-ca71fb9","object":"text_completion","usage":{"completion_tokens":50,"prompt_tokens":3,"total_tokens":53},"id":"chatcmpl-pBDeCqqpcUwY1ZsvN7nf9Pi0NvgPaHfl","timings":{"cache_n":0,"prompt_n":3,"prompt_ms":32.043,"prompt_per_token_ms":10.681,"prompt_per_second":93.62419249133977,"predicted_n":50,"predicted_ms":652.581,"predicted_per_token_ms":13.05162,"predicted_per_second":76.61884118599836}}ajeetraina@ajeetraina:~$Embeddings

curl http://localhost:12434/engines/llama.cpp/v1/embeddings \

-H "Content-Type: application/json" \

-d '{

"model": "ai/smollm2",

"input": "Docker containerization technology"

}'Monitoring and Troubleshooting

Monitor GPU Usage

Using nvidia-smi

# Real-time GPU monitoring (updates every 2 seconds)

watch -n 2 nvidia-smi

# Single snapshot

nvidia-smiUsing jtop (Jetson-specific)

# Install jtop if not already installed

sudo pip3 install jetson-stats

sudo reboot

# Run jtop for comprehensive monitoring

sudo jtopCheck GPU Temperature and Power

# GPU temperature

nvidia-smi --query-gpu=temperature.gpu --format=csv

# Power draw

nvidia-smi --query-gpu=power.draw --format=csv

# All metrics

nvidia-smi --query-gpu=index,name,driver_version,memory.total,memory.used,memory.free,temperature.gpu,utilization.gpu --format=csvReal-World Applications

| Project Name | Description and Links |

| Dentescope AI - a production-ready dental X-ray analysis system capable of detecting and measuring teeth with surgical precision | GitHub URL: https://github.com/ajeetraina/dentescope-ai-complete/tree/main Live Demo: https://huggingface.co/spaces/ajeetsraina/dentescope-ai Blog URL: https://www.ajeetraina.com/building-production-grade-dental-ai-from-auto-annotation-to-99-5-accuracy-with-yolov8-and-nvidia-infrastructure/ |

| Clinical Dental Pathology Detector | GitHub URL: https://github.com/ajeetraina/dentescope-ai-complete/tree/main Live Demo: https://huggingface.co/spaces/ajeetsraina/dental-width-detector-app |