Inside the Black Box: How LLM Neural Layers Make Tool Calling Decisions

Ever wondered how AI 'decides' which tools to use? There's no magic—just 24+ neural layers working together. Discover what really happens inside LLMs when they make tool calling decisions

When developers hear that large language models can "decide" which tools to use, it often feels like magic. Understanding what's actually happening inside these neural networks is crucial for developers working with AI tool calling.

The truth is, there's no magic—just sophisticated multi-layer processing that transforms user prompts and tool descriptions into intelligent decisions.

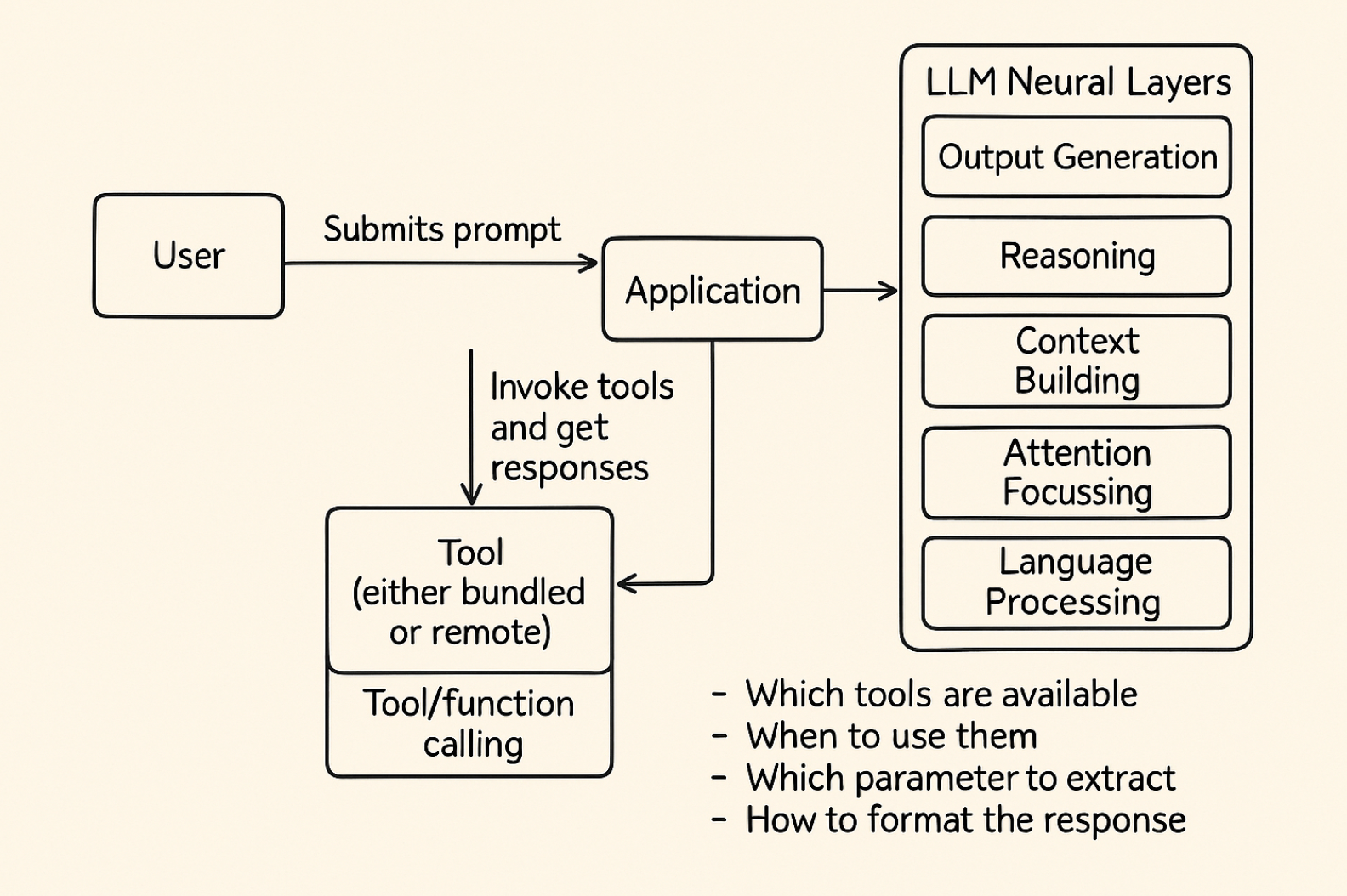

Modern LLMs like GPT-4 or Claude consist of dozens of neural network layers, each serving a specific purpose in the decision-making process.

At the foundation, input embedding layers convert your text prompts and tool descriptions into numerical vectors that the network can process. These embeddings flow upward through token processing layers that recognize language patterns, attention mechanisms that focus on relevant information, and context understanding layers that build semantic relationships between user intent and available tools.

It's this hierarchical processing that enables models to understand not just what tools are available, but when and how to use them.

The real breakthrough happens in the upper reasoning layers, where the model synthesizes everything it has learned about the conversation context, available tool capabilities, and user intent.

These layers don't just match keywords—they perform complex reasoning to determine which tools are needed, extract the specific parameters required, and format proper tool execution requests.

The attention mechanisms are particularly crucial here, as they help the model focus on the most relevant parts of tool descriptions while filtering out unnecessary information. This is why well-written tool descriptions with clear use cases perform better than generic ones.

Understanding this internal architecture isn't just academic—it has practical implications for developers building AI applications.

When you know that tool calling decisions emerge from layer-by-layer processing rather than simple rule matching, you can write better tool descriptions, craft more effective system prompts, and debug issues more efficiently. As the AI ecosystem moves toward standardized protocols like MCP for tool integration, developers who understand the underlying neural processes will be better equipped to build robust, intelligent applications that truly leverage AI's decision-making capabilities rather than just treating it as a mysterious black box.

LLM Internal Stack Architecture

Breaking down the neural network layers that power tool calling decisions

2. Intent Matching: Middle layers match user intent to tool capabilities

3. Parameter Extraction: Upper layers extract required parameters from context

4. Decision Output: Output layer generates tool call with proper formatting

Residual Connections: Information flows between layers for better understanding

Attention Mechanism: Critical for focusing on relevant tool descriptions

Emergent Behavior: Tool calling emerges from layer interactions, not explicit programming

Let's understand this with a simple analogy:

Student Question: "How do I solve this math word problem about sharing pizza?"

- 📚 Kindergarten (Input Embedding): "I see words: pizza, sharing, friends, slices"

- ✏️ Elementary (Processing L1-6): "These are math words, not story words. Numbers are important."

- 🔢 Middle School (Attention L6-12): "Focus on the numbers: 8 slices, 3 friends - this is division!"

- 📊 High School (Context L12-18): "This is a fraction/division word problem. Student needs step-by-step help."

- 🎓 College (Reasoning L18-24): "Use the math solver for division, then the visualizer to show pizza slices"

- 👨🏫 Professor (Output): "Execute: MathSolver.divide(8,3) and Visualizer.draw_pizza_slices()"

Result: Each grade level adds sophistication until we get the perfect learning solution!