Running Hugging Face Transformers with GPU Acceleration Using Docker Offload

Stop letting local machine constraints limit your AI ambitions. Docker Offload brings NVIDIA L4 GPUs with 23GB of memory to your fingertips—no hardware investment, no cloud complexity, just familiar Docker commands with unlimited compute power

The Local Development Dilemma

As an AI developer, you've probably faced this frustrating scenario: you want to experiment with the latest Hugging Face model—perhaps GPT-4, BERT, or a cutting-edge vision transformer—but your local machine simply doesn't have the horsepower. Your laptop fans are screaming, your 8GB of RAM is maxed out, and that GPU you bought two years ago is already showing its age.

Meanwhile, the model you want to run requires 16GB+ of VRAM, and inference times are measured in minutes rather than seconds. The choice becomes: either invest thousands in new hardware or deal with the complexity of setting up cloud infrastructure from scratch.

Enter Docker Offload: Cloud Power, Local Simplicity

Docker Offload solves this exact problem by seamlessly extending your local Docker workflow to powerful cloud infrastructure equipped with NVIDIA L4 GPUs. You get 23GB of GPU memory and enterprise-grade compute power, all while maintaining the familiar Docker commands you already know.

Setting Up Your GPU-Accelerated Hugging Face Environment

Prerequisites

Before we dive in, ensure you have:

- Docker Desktop 4.43.0 or later

- A Docker Hub account

- Basic familiarity with Docker commands

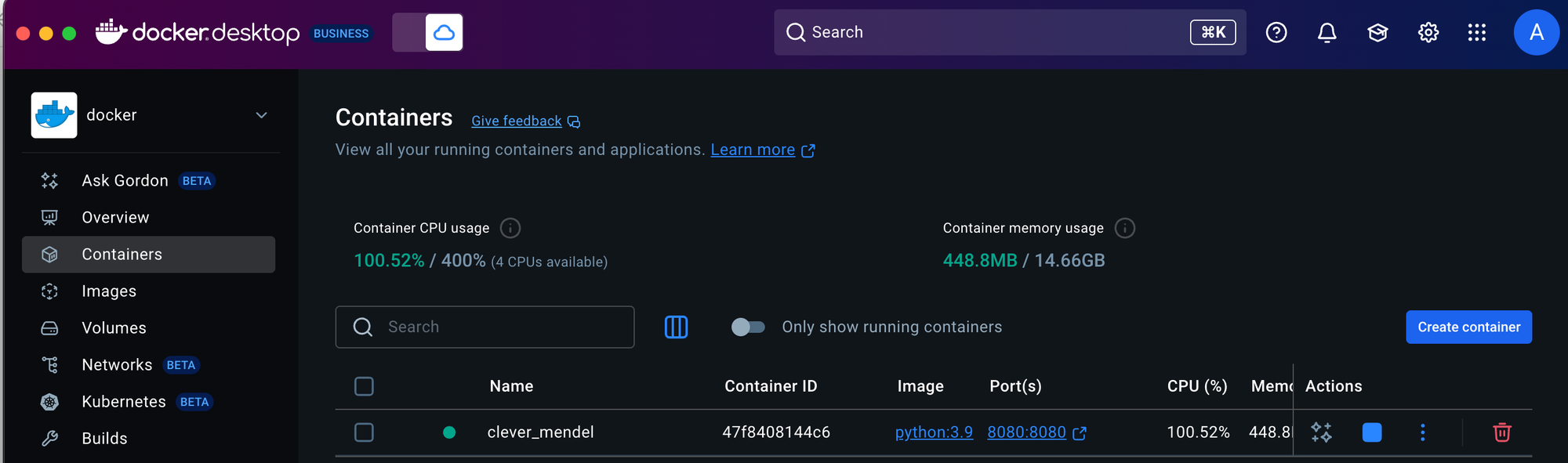

Starting Your Docker Offload Session

First, let's initialize Docker Offload with GPU support:

docker offload start

When prompted, enable GPU support. This provisions an NVIDIA L4 GPU instance in the cloud, giving you access to 23GB of GPU memory—more than most local setups.

Verify your GPU setup:

docker run --rm --gpus all nvidia/cuda:12.4.0-runtime-ubuntu22.04 nvidia-smiYou should see output showing your NVIDIA L4 GPU with CUDA 12.4 support.

Running Hugging Face Transformers: From Zero to AI in One Command

Here's where Docker Offload truly shines. With a single command, you can create a complete, GPU-accelerated environment for Hugging Face Transformers:

docker run --rm -p 8080:8080 --gpus all python:3.9 sh -c "pip install transformers torch accelerate && python -c 'import transformers; print(\"Transformers GPU ready\")'"This command accomplishes several critical tasks:

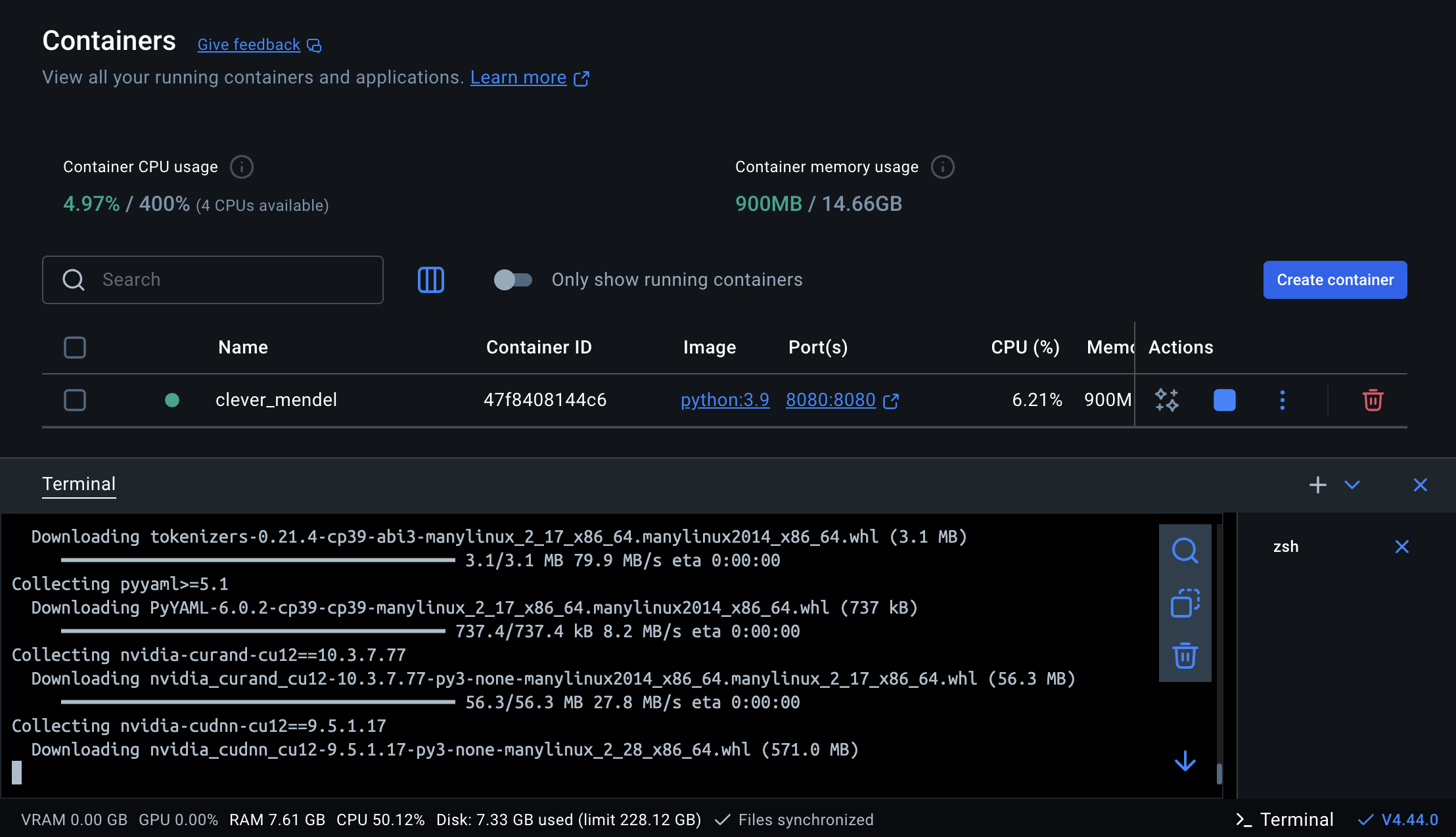

What's Happening Under the Hood

- Container Creation: Spins up a Python 3.9 environment with full GPU access

- Library Installation: Installs the essential AI stack:

transformers: Hugging Face's library with thousands of pre-trained modelstorch: PyTorch for deep learning computations with CUDA supportaccelerate: Simplifies distributed and mixed-precision training

- GPU Verification: Confirms the environment can access GPU resources

- Port Forwarding: Opens port 8080 for interactive applications

Practical Examples: Real AI Workloads

Text Generation with GPT-2

Let's run a practical example using GPT-2 for text generation:

docker run --rm -p 8080:8080 --gpus all python:3.9 sh -c "

pip install transformers torch accelerate flask &&

python -c \"

from transformers import GPT2LMHeadModel, GPT2Tokenizer

import torch

print('Loading GPT-2 model and tokenizer...')

# Load model and tokenizer

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

model = GPT2LMHeadModel.from_pretrained('gpt2')

# Set pad token to eos token to avoid warnings

tokenizer.pad_token = tokenizer.eos_token

# Move to GPU

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

print(f'Model loaded on device: {device}')

# Generate text

input_text = 'The future of AI development is'

print(f'Input: {input_text}')

input_ids = tokenizer.encode(input_text, return_tensors='pt').to(device)

with torch.no_grad():

output = model.generate(

input_ids,

max_length=100,

num_return_sequences=1,

temperature=0.7,

do_sample=True,

pad_token_id=tokenizer.eos_token_id,

no_repeat_ngram_size=2

)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(f'Generated: {generated_text}')

print('\\nGPU Memory Usage:')

if torch.cuda.is_available():

print(f'Allocated: {torch.cuda.memory_allocated()/1024**3:.2f} GB')

print(f'Cached: {torch.cuda.memory_reserved()/1024**3:.2f} GB')

\"

"

The current state of the field is as follows:

"We have a new approach to AI that has never been done before. This approach is very unique and very different from what we have been doing before in that we are now using high-level APIs from the ground up to build highly optimized applications. We see this as an important step in the evolution

GPU Memory Usage:

Allocated: 0.48 GB

Cached: 0.54 GBImage Classification with Vision Transformers

For computer vision tasks, you can run state-of-the-art vision transformers:

docker run --rm -p 8080:8080 --gpus all python:3.9 sh -c "

pip install transformers torch accelerate pillow requests &&

python -c \"

from transformers import ViTImageProcessor, ViTForImageClassification

from PIL import Image

import requests

import torch

# Load model and processor

processor = ViTImageProcessor.from_pretrained('google/vit-base-patch16-224')

model = ViTForImageClassification.from_pretrained('google/vit-base-patch16-224')

# Move to GPU

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

print(f'Model loaded on: {device}')

print('Vision Transformer ready for image classification!')

\"

"[notice] To update, run: pip install --upgrade pip

Model loaded on: cuda

Vision Transformer ready for image classification!Performance Benefits: Local vs. Cloud

Local Machine Limitations

- Memory Constraints: Most laptops have 8-16GB RAM, insufficient for large models

- GPU Limitations: Consumer GPUs often lack the VRAM for modern transformers

- Thermal Throttling: Extended AI workloads cause performance degradation

- Inconsistent Performance: Results vary dramatically across team members' hardware

Docker Offload Advantages

- 23GB GPU Memory: Run models that would never fit locally

- Consistent Performance: Same powerful hardware for your entire team

- No Thermal Issues: Cloud infrastructure designed for sustained compute loads

- Cost Efficiency: Pay only for actual usage, no hardware investment

Interactive Development with Jupyter

For a more interactive experience, you can run Jupyter Lab with Hugging Face pre-installed:

docker run --rm -p 8888:8888 --gpus all python:3.9 sh -c "

pip install transformers torch accelerate jupyter &&

jupyter lab --ip=0.0.0.0 --port=8888 --no-browser --allow-root --token=your-secure-token

"This gives you a complete notebook environment where you can experiment with different models interactively. Access it at http://localhost:8888 with the token you specified.

Creating a Persistent Development Environment

For ongoing development, create a dedicated workspace:

# Start a long-running container

docker run -d --name hf-workspace -p 8888:8888 --gpus all python:3.9 sh -c "

pip install transformers torch accelerate jupyter ipywidgets &&

jupyter lab --ip=0.0.0.0 --port=8888 --no-browser --allow-root --token=dev-token &&

tail -f /dev/null

"

# Access the container for interactive work

docker exec -it hf-workspace bashAdvanced Use Cases

Fine-tuning with Multiple GPUs

For more intensive workloads, you can leverage the accelerate library for distributed training:

docker run --rm -p 8080:8080 --gpus all python:3.9 sh -c "

pip install transformers torch accelerate datasets &&

accelerate config --config_file accelerate_config.yaml

"Model Serving with FastAPI

Create a production-ready API endpoint:

docker run --rm -p 8080:8080 --gpus all python:3.9 sh -c "

pip install transformers torch accelerate fastapi uvicorn &&

python -c \"

from fastapi import FastAPI

from transformers import pipeline

import torch

app = FastAPI()

# Initialize the pipeline with GPU

device = 0 if torch.cuda.is_available() else -1

generator = pipeline('text-generation', model='gpt2', device=device)

@app.post('/generate')

async def generate_text(prompt: str):

result = generator(prompt, max_length=50, num_return_sequences=1)

return {'generated_text': result[0]['generated_text']}

if __name__ == '__main__':

import uvicorn

uvicorn.run(app, host='0.0.0.0', port=8080)

\"

"Troubleshooting Common Issues

GPU Memory Errors

If you encounter CUDA out-of-memory errors:

- Reduce batch size in your model configuration

- Use gradient checkpointing with

model.gradient_checkpointing_enable() - Consider model sharding with the accelerate library

Package Installation Failures

For persistent environments, create a custom Dockerfile:

FROM python:3.9-slim

RUN pip install transformers torch accelerate

WORKDIR /app

COPY your_script.py .

CMD ["python", "your_script.py"]Network Connectivity

Ensure your Docker Offload session is active:

docker offload statusBest Practices for Production Use

1. Model Caching

Cache downloaded models to reduce startup time:

from transformers import AutoModel

model = AutoModel.from_pretrained('model-name', cache_dir='/app/models')2. Memory Management

Monitor GPU memory usage:

import torch

print(f"GPU memory allocated: {torch.cuda.memory_allocated() / 1024**3:.2f} GB")

print(f"GPU memory cached: {torch.cuda.memory_reserved() / 1024**3:.2f} GB")3. Batch Processing

Process multiple inputs simultaneously for better GPU utilization:

inputs = tokenizer(texts, padding=True, truncation=True, return_tensors="pt")

outputs = model(**inputs.to(device))Cost Optimization Tips

- Session Management: Docker Offload automatically shuts down after 30 minutes of inactivity

- Efficient Development: Test locally first, then scale to Offload for resource-intensive tasks

- Batch Operations: Process multiple tasks in a single session rather than frequent start/stop cycles

What's Next?

This foundation enables you to:

- Experiment with Latest Models: Try models like Llama 2, Code Llama, or Stable Diffusion

- Build Production APIs: Create scalable inference endpoints

- Fine-tune Models: Adapt pre-trained models to your specific use cases

- Prototype Quickly: Test ideas without hardware constraints

Docker Offload transforms GPU-accelerated AI development from a hardware challenge into a simple Docker command. Whether you're prototyping new ideas, building production systems, or just exploring the latest in AI, you now have enterprise-grade compute power at your fingertips.

Ready to Get Started?

Start your Docker Offload session and begin experimenting with Hugging Face Transformers today. The only limit is your imagination, not your hardware.

docker offload start

# Enable GPU support when prompted

docker run --rm -p 8080:8080 --gpus all python:3.9 sh -c "pip install transformers torch accelerate && python -c 'import transformers; print(\"Ready for AI magic!\")'"The future of AI development is here, and it runs on Docker Offload.