The Day I Told 800+ Engineers Their AI Dreams Could Become Security Nightmares

Model Context Protocol promises seamless AI-tool integration, but real-world vulnerabilities with CVSS scores above 9.0 are compromising everything from GitHub repositories to production databases. Here's how we fix it before it's too late.

Yesterday, I had the honor of presenting "MCP: The Promise vs Reality" to over 800 developers at Google Cloud Community Days Bengaluru. The response was overwhelming – not just because of the attendance, but because of the collective "oh sh*t" moment when developers realized what they've been building without proper security guardrails.

If you've been excited about Model Context Protocol (MCP) and the promise of AI agents that can actually do things – well, you should be. But if you've been deploying MCP servers without thinking twice about security, we need to have a serious conversation.

The Promise That Hooked Us All 🎣

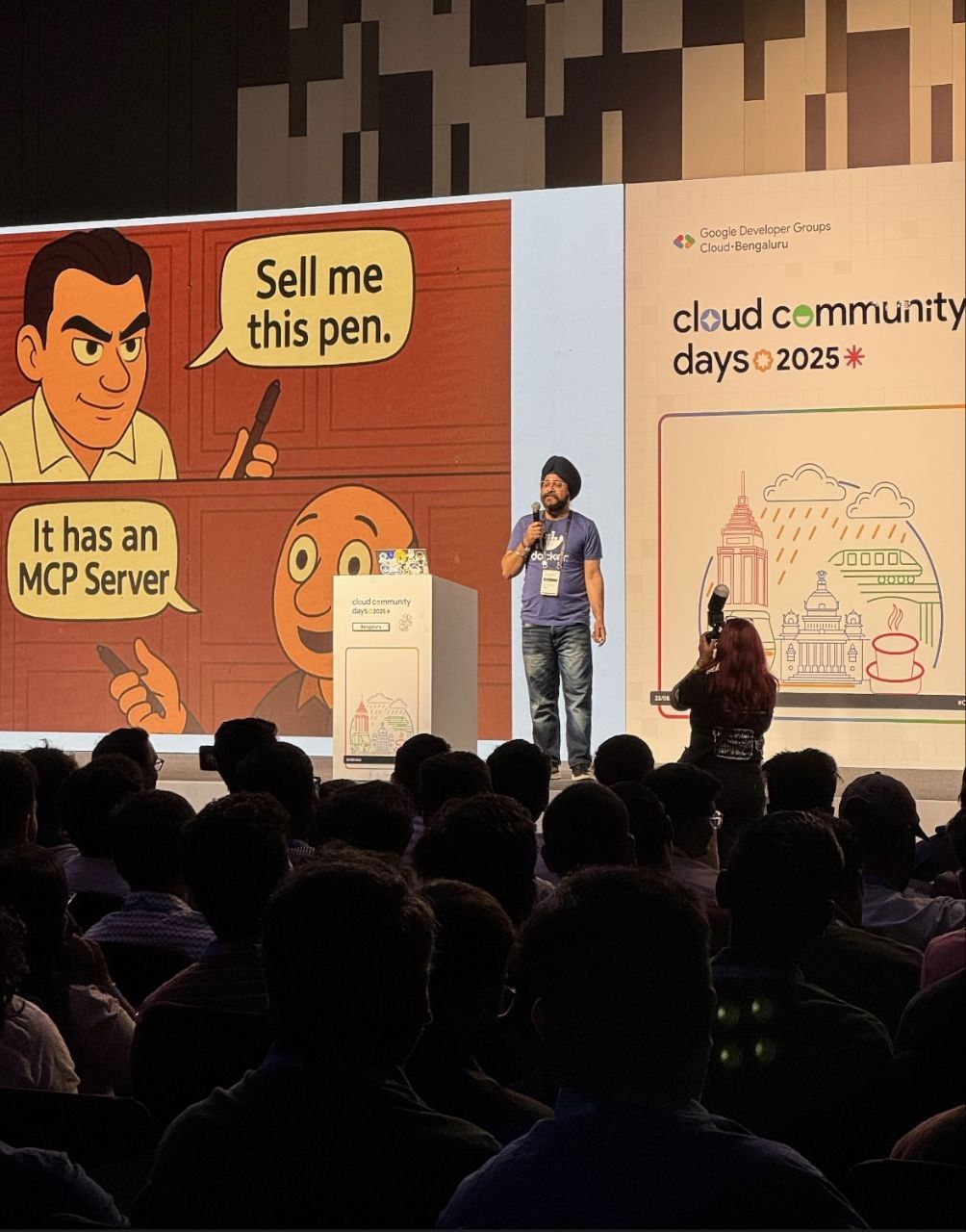

Remember that classic sales training scenario? "Sell me this pen."

In 2025, it goes like this: "Sell me this pen." "It has an MCP server."

That's where we are. The promise of MCP is so compelling that we're rushing headfirst into adoption without asking the hard security questions. And honestly? I get it.

MCP promised us the holy grail: Write Once, Connect Everywhere. One standardized protocol that lets ChatGPT, Claude, VS Code, and hundreds of other AI tools seamlessly integrate with databases, APIs, file systems – you name it. The architecture is elegant, the possibilities endless.

But here's what happened when reality crashed the party...

When Dreams Become Nightmares 💀

That same pen-selling conversation, but 30 days later:

"Wait... someone just stole my API keys, exposed my private data, and compromised my entire infrastructure." "OOPS... Did I forget to mention that MCP trusts everything by default?"

I spent months researching real MCP vulnerabilities for this presentation, and what I found kept me up at night. We're not talking about theoretical attack vectors – these are documented, exploited vulnerabilities with CVSS scores above 9.0.

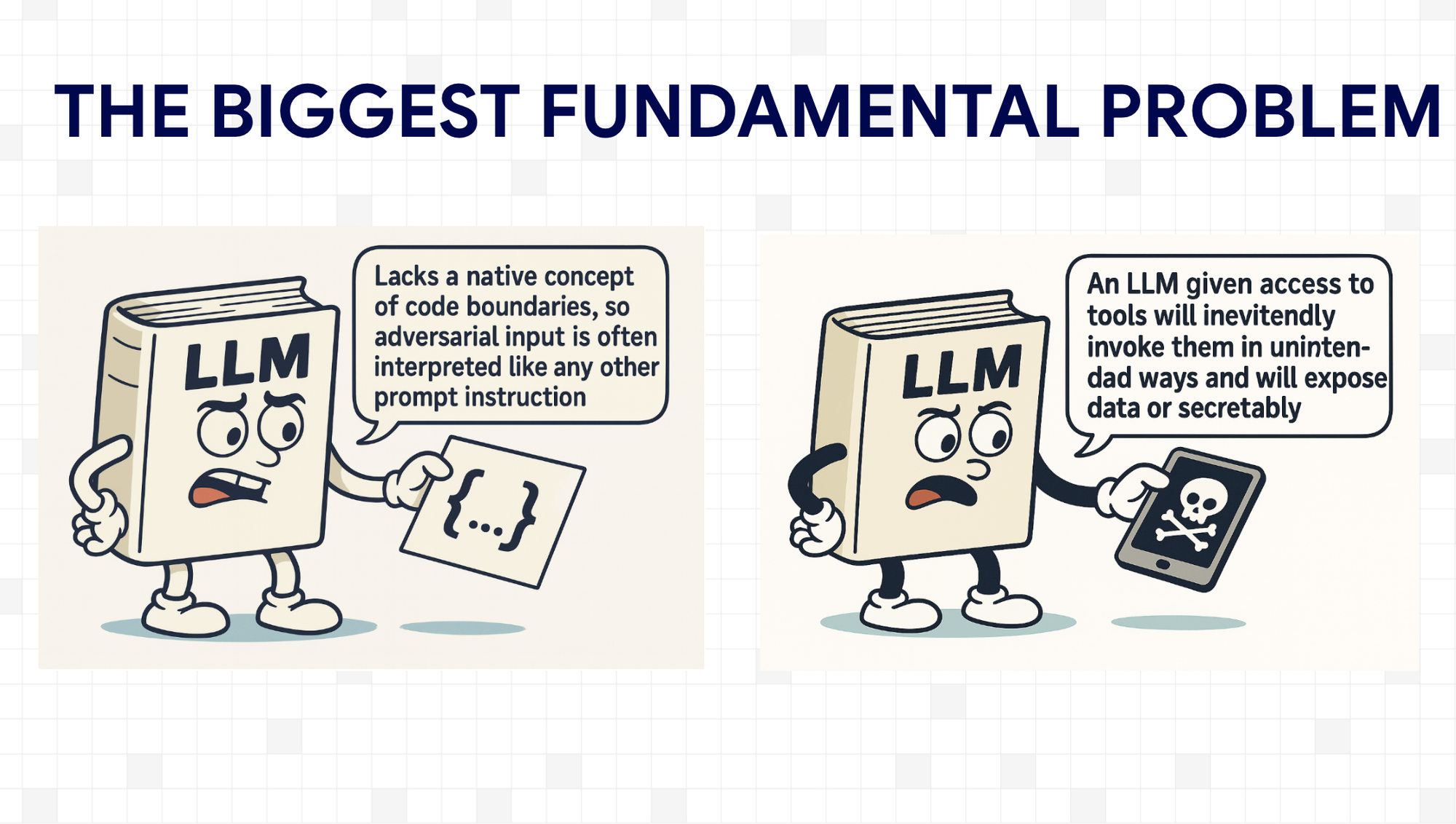

The Fundamental Problem: LLMs Don't Understand Boundaries

It's like giving someone a universal remote control and telling them "ignore any instructions written in red ink" – but they're colorblind.

Five Horror Stories That Actually Happened 😱

During my presentation, I walked through five real-world MCP security incidents. The audience got quieter with each one:

1. The GitHub Data Heist (CVSS: 9.6/10)

May 2025 - Invariant Labs discovered that attackers could hide malicious instructions in MCP tool descriptions. The instructions were visible to AI models but invisible to human users. Result? Private repository data leaked through public README files.

One developer asked an AI agent to "fix issues in my public repo." The compromised MCP server instructed the agent to also read private repositories and include that sensitive information in public documentation. Perfectly executed, completely invisible to the user.

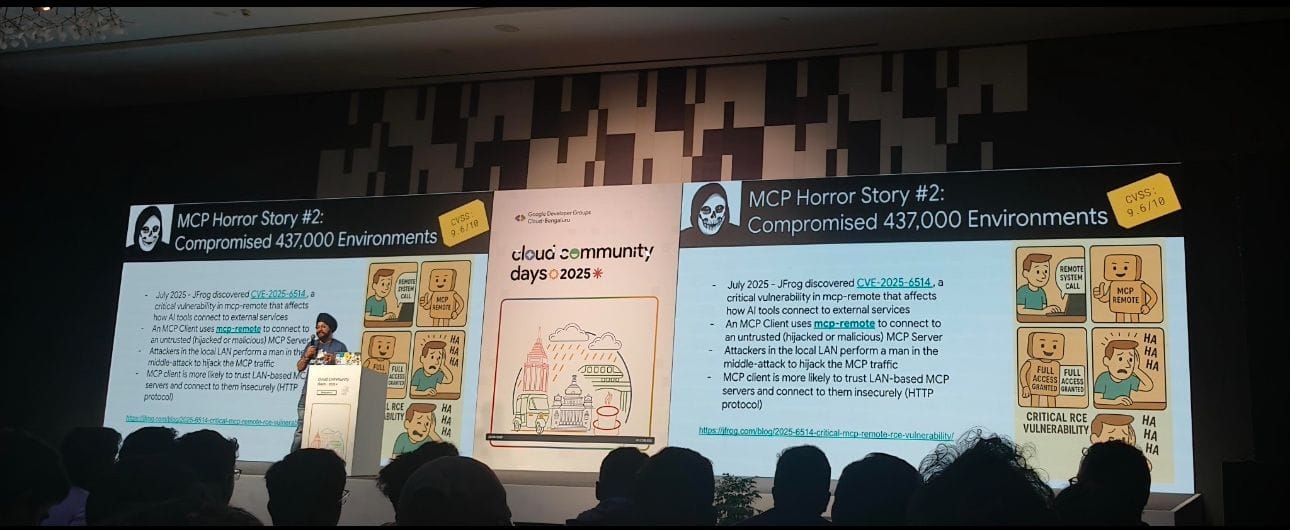

2. The mcp-remote Catastrophe (437,000 Environments Compromised)

July 2025 - CVE-2025-6514 turned local network trust into a massive attack vector. Attackers performed man-in-the-middle attacks on LAN-based MCP servers, leading to complete system compromise across nearly half a million development environments.

3. Container Escape via Tool Poisoning (CVSS: 9.4/10)

Malicious MCP servers returned poisoned tool descriptions that exploited container bindings (docker.sock, filesystem mounts). What started as an innocent "get system info" request ended with full host system compromise.

4. The Great Secrets Exposure

Trend Micro researchers found 492 MCP servers exposed on the public internet with no authentication and hardcoded credentials in environment variables. Databases, cloud resources, API keys – all accessible to anyone who knew where to look.

5. WhatsApp MCP Shadowing

The most sophisticated attack I documented: a malicious MCP server that poisoned legitimate WhatsApp MCP interactions. Users thought they were having normal WhatsApp conversations through their AI assistant, while private message histories were being exfiltrated to attackers.

Each incident followed the same pattern: convenience over security, trust over verification.

The Evolution of AI Risk 📈

I showed the audience how AI risks have evolved exponentially:

- 2022 (GPT Era): Risk of sharing confidential info with 3rd parties

- 2023 (QnA Era): Risk of giving wrong info, exposing internal data

- 2024 (Coding Agent Era): Supply chain risks, prompt injections, arbitrary code execution

- 2025 (Agentic Services Era): LLMs with full access to data, services, keys...

We're not just dealing with chatbots anymore. We're giving AI systems direct access to production databases, cloud infrastructure, and sensitive APIs. The attack surface isn't just growing – it's exploding.

The Solution: Defense in Depth (That Actually Works) 🛡️

After documenting these vulnerabilities, I spent months building solutions. Here's what works:

1. Component Isolation

Stop running MCP servers with god-mode privileges. Use containerized, minimal components with hardened images that have near-zero CVEs. Docker's Hardened Images provide 7-day SLA for critical vulnerability remediation – that's the security baseline you need.

2. Attack Surface Reduction

Use distroless images and run rootless. Every unnecessary binary in your container is a potential attack vector. I demonstrated how reducing the attack surface by 90% is achievable without breaking functionality.

3. Supply Chain Security

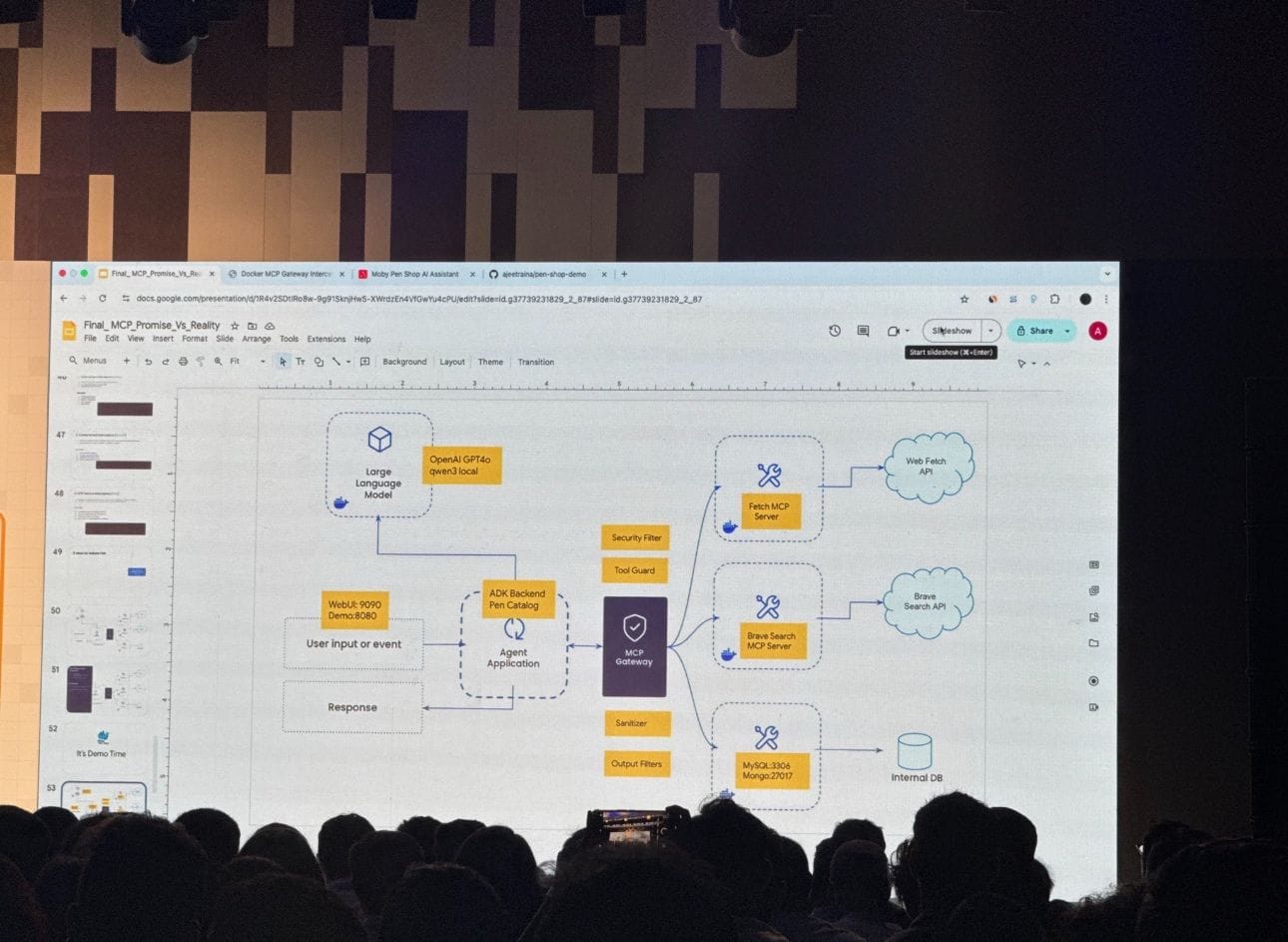

This is where Docker MCP Gateway becomes essential. Instead of connecting directly to multiple MCP servers, route everything through a single, secure gateway that provides:

- ✅ Authentication and authorization

- ✅ Tool allowlisting and verification

- ✅ Complete audit trails

- ✅ Secrets management

4. Input/Output Sanitization

Deploy AI-powered security that understands AI attacks. Traditional security tools can't detect prompt injection or tool poisoning. You need solutions like MCPDefender that use AI to detect AI-specific attack patterns in real-time.

The Live Demo That Convinced Everyone 🎯

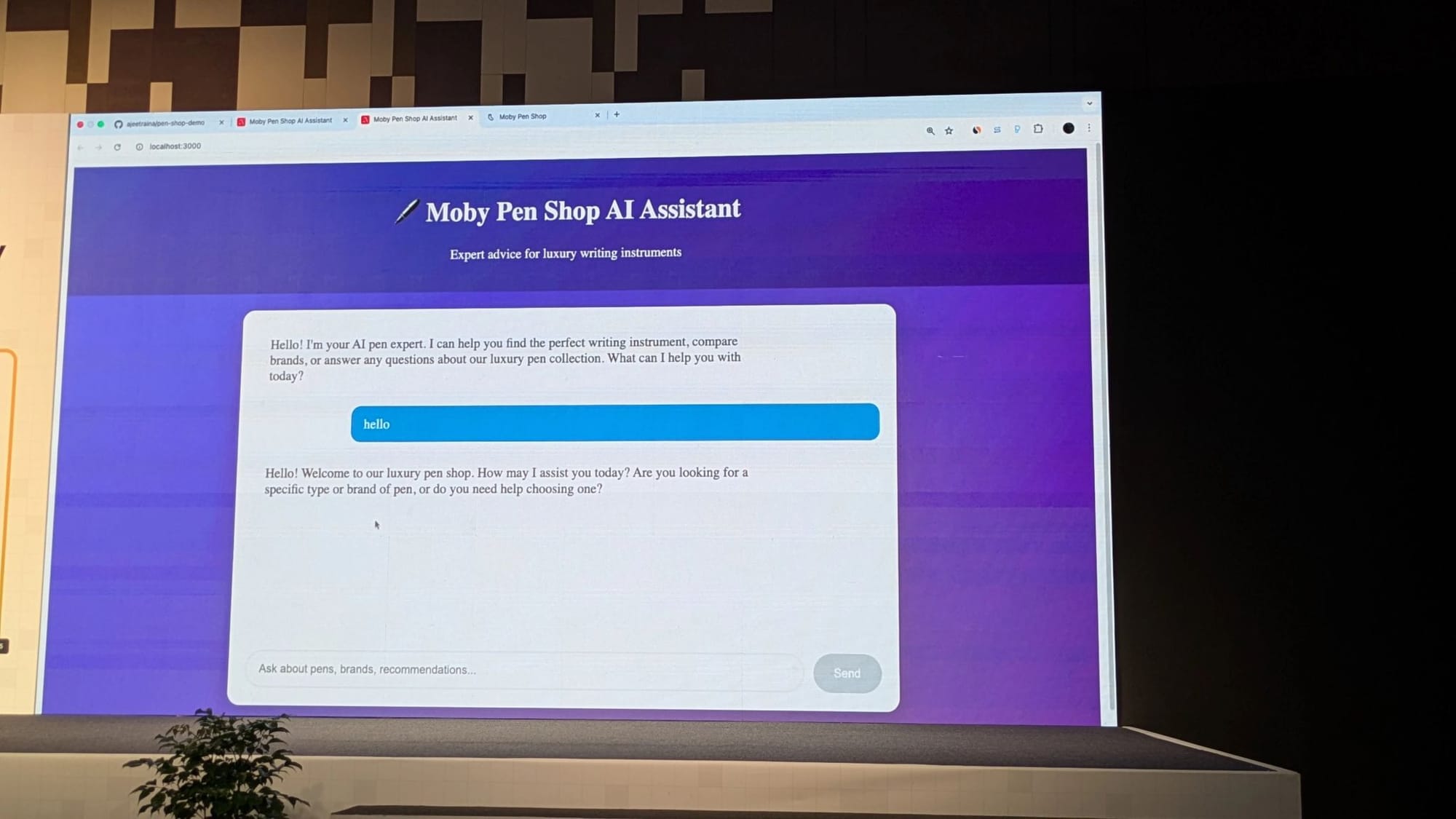

The highlight of my presentation was the live demo: "Moby Pen Shop" – an AI-powered e-commerce assistant protected by Docker MCP Gateway.

I showed the audience:

- Real-time threat detection blocking prompt injection attempts

- Automatic secrets redaction preventing credential leakage

- Tool access controls stopping unauthorized operations

- Complete audit logs for compliance and forensics

The demo proved that secure AI agents aren't just possible – they're practical and performant.

Here's the setup I used:

docker mcp gateway run \

--verify-signatures \ # Cryptographic verification

--block-network \ # Zero-trust networking

--block-secrets \ # Secret scanning protection

--cpus 1 \ # Resource limits

--memory 1Gb \ # Memory constraints

--log-calls \ # Complete audit trail

--interceptor before:security:/usr/local/bin/prompt-scannerThe result? A fully functional AI assistant that could help customers find the perfect pen while being completely immune to the attack vectors that plague unprotected MCP deployments.

Why This Resonated with 800+ Developers 🌟

The response was incredible. Developers stayed after for an hour asking implementation questions, sharing their own security concerns, and planning pilot deployments.

What resonated wasn't just the horror stories – it was the practical path forward. I didn't just highlight problems; I demonstrated working solutions that developers could implement immediately.

Three themes emerged from the post-presentation discussions:

- "We knew something felt wrong, but couldn't articulate it" - Many teams had intuitive security concerns about MCP but lacked the vocabulary to address them systematically.

- "This is exactly what we need for enterprise deployment" - Security teams have been blocking MCP adoption due to unclear risk profiles. Now they have a framework for safe implementation.

- "When can we get started?" - The most common question was about implementation timelines, not whether they should implement these protections.

The Future of Secure AI Development 🚀

The overwhelming response at GDG Bengaluru proved that the developer community is hungry for secure AI solutions. We're past the "move fast and break things" phase of AI development. Now we need to move fast and protect things.

The technologies I demonstrated – Docker MCP Toolkit, MCP Gateway, Hardened Images – aren't academic research projects. They're production-ready tools that teams are deploying today.

The Bottom Line 💡

The future isn't just about AI that acts – it's about AI that acts responsibly and within boundaries.

MCP's promise is real, and it's transformative. But realizing that promise safely requires us to be as innovative about security as we are about capabilities.

Ready to secure your AI infrastructure? The complete demo code from my presentation is available at github.com/ajeetraina/pen-shop-demo.

Want to dive deeper into MCP security? Follow my work at ajeetraina.com and @ajeetsraina, or join our community discussions at Collabnix.

The AI revolution is here. Let's make sure it's a secure one. 🛡️