Docker Model Runner Now Supports Anthropic Messages API Format

Develop with Anthropic SDK locally, deploy to Claude in production

Develop with Anthropic SDK locally, deploy to Claude in production

Wellness

We live in one of the sunniest countries on earth, yet most of us are running on empty. If you're a developer who's always tired, brain-fogged, and blaming it on deadlines — your blood work might tell a different story.

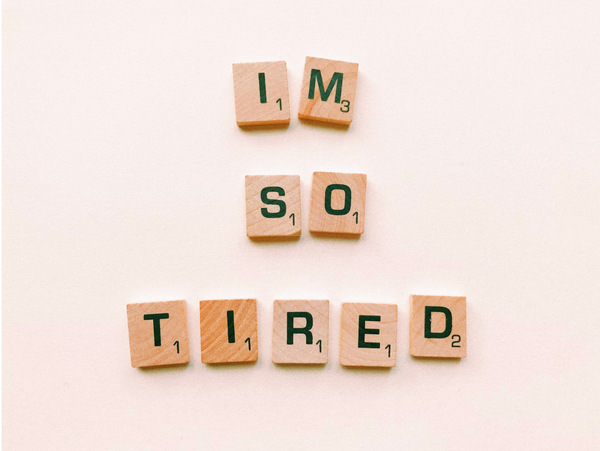

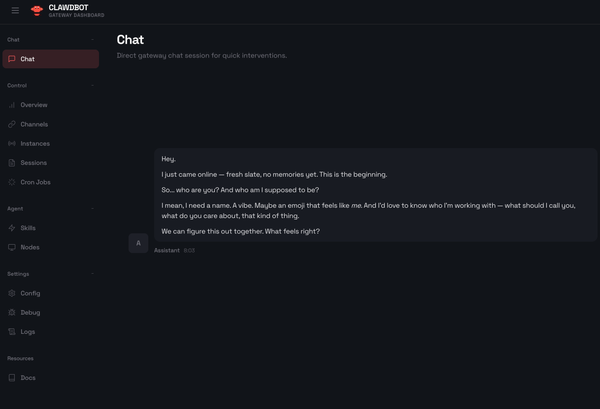

A quick guide to creating a Docker skill for Clawdbot — bringing container management, image analysis, and Compose operations to your favorite messaging platform.

Deploy your personal AI assistant across WhatsApp, Telegram, Discord, and 10+ messaging platforms

Triple your screen real estate in under 5 minutes

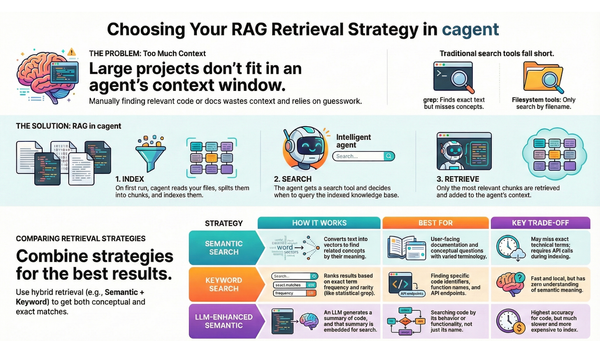

Want to run AI-powered code search without sending your proprietary code to the cloud? This tutorial shows you how to set up Retrieval-Augmented Generation (RAG) using Docker Model Runner and cagent.

Your agent can access your codebase, but can't load it all into context—even 200K tokens isn't enough. Without smart search, it wastes tokens loading irrelevant files.

The 2026 State of AI Agents Report details a major transition as organizations shift from experimental pilots to autonomous production systems.

Wellness

Everyone's sharing how they 10x'd their startup with AI. Nobody's sharing how they skipped another meal, ignored another headache, postponed another checkup. The highlight reel is lying to you.

2026 promises to be transformative. Organizations now run 20+ clusters across 5+ cloud environments. 55% have adopted platform engineering in 2025. 58% are running AI workloads on K8s. Docker made 1,000+ hardened images free. 5 trends that will dominate K8s in 2026—and what they mean for your infra.

Build a beautiful web dashboard for your Tapo CCTV cameras using Docker Compose, tapo-rest API, and vanilla JavaScript - control your cameras from any browser in 15 minutes.

CrashLoopBackOff is Kubernetes telling you: "I've tried restarting your container multiple times, but it keeps failing, so I'm giving up temporarily."