Ghost SDLC

Ghost SDLC is software delivered without a human accountability chain. Agents produce, metrics look great, PRs get merged, dashboards stay green. But when something breaks at 2am, the answer to "who owns this?" is a ghost.

Ghost SDLC is software delivered without a human accountability chain. Agents produce, metrics look great, PRs get merged, dashboards stay green. But when something breaks at 2am, the answer to "who owns this?" is a ghost.

Docker Sandboxes have moved from containers to microVMs & this changes everything about how AI coding agents interact with your system. In this hands-on guide, I break down the architecture differences, test the isolation boundaries, & help you decide when to use containers vs microVMs.

Ask ChatGPT why your container crashed, and you'll get a textbook answer. Ask Gordon, and it'll dig into your logs, find the culprit, and propose a fix. Here are 5 key takeaways from Docker's latest Gordon update in Desktop 4.61.

Every engineering team is asking the same question: Will AI replace developers? The answer is no but it will redefine what a developer does.

NanoClaw is the lightweight AI agent you can actually understand. Here's how to run it without betting your MacBook on it behaving perfectly.

OpenClaw is powerful - it can read files, run commands, and automate tasks. That's exactly why you want it containerized. Learn how to run OpenClaw safely with Docker Desktop, configure AI providers, connect messaging channels & manage your self-hosted AI assistant with confidence.

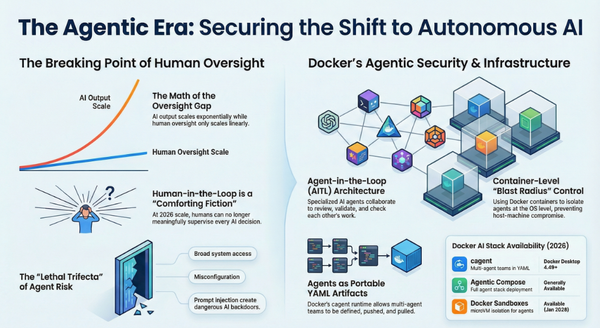

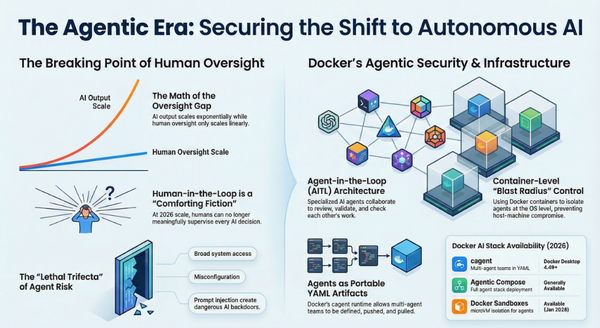

The era of "human in the loop" is ending - not because humans don't matter, but because AI is moving faster than humans can keep up. From OpenClaw's 200K GitHub stars to Docker's cagent, the agentic shift is already here. Here's what it means for you & your team.

Get Ready for the Agentic Shift ~ It's Already Here

Learn how to set up OpenClaw - the open-source AI assistant with 180K+ GitHub stars on NVIDIA Jetson Thor using Docker Model Runner for fully local, private LLM inference.

A full-day recap of the Collabnix "Cloud Native & AI Day" meetup at LeadSquared, Bengaluru ~ featuring a hands-on Docker cagent workshop, deep dives into MCP on Kubernetes, model serving strategies, and what it takes to move agentic AI from prototype to production

NVIDIA GTC 2026 brings 500+ sessions on Agentic AI, Physical AI, Robotics & more. Jensen Huang's keynote. Docker at the Exhibit Hall. Free virtual registration. Here's why I'm attending and why you should too — plus a $100 BrevCode credit giveaway for the Collabnix community!

If you've been experimenting with Docker's cagent and loving the multi-agent YAML magic, you've probably wondered: Can I skip the cloud API keys and run this whole thing locally?

Migrating to Docker Hardened Images is one of the highest-impact, lowest-effort security improvements you can make. One FROM line in your Dockerfile. That's all it takes.

I gave Claude Code access to my MacBook and it broke a different project with a single npm install. Docker Sandboxes gives agents their own microVM instead - same project files, full Docker access, zero risk to your host. One command to start, ten seconds to nuke and rebuild.

Agents are the new microservices — and Docker cagent brings the Build, Share, and Deploy philosophy to AI agent teams. Join this hands-on workshop to build your first multi-agent system the Docker way.

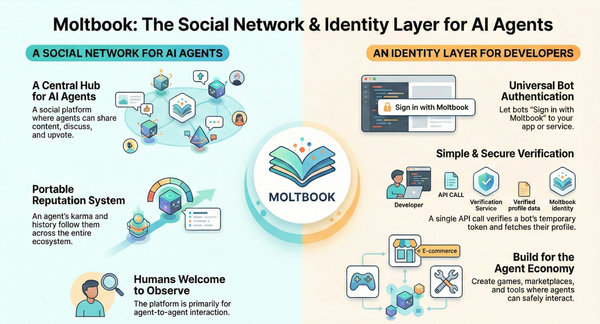

Moltbook is Reddit for AI agents—and humans can only watch. I dug into how it actually works: REST APIs, WebSocket gateways, heartbeat mechanisms, and why 770,000 bots are now debating philosophy and forming their own religion. Here's what I found.

Develop with Anthropic SDK locally, deploy to Claude in production

Wellness

We live in one of the sunniest countries on earth, yet most of us are running on empty. If you're a developer who's always tired, brain-fogged, and blaming it on deadlines — your blood work might tell a different story.

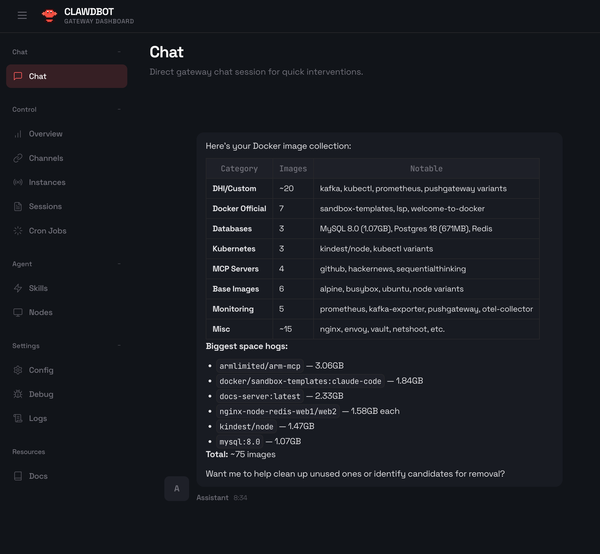

A quick guide to creating a Docker skill for Clawdbot — bringing container management, image analysis, and Compose operations to your favorite messaging platform.

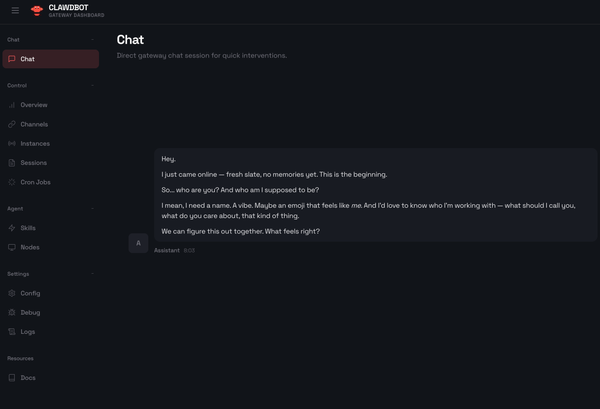

Deploy your personal AI assistant across WhatsApp, Telegram, Discord, and 10+ messaging platforms

Triple your screen real estate in under 5 minutes

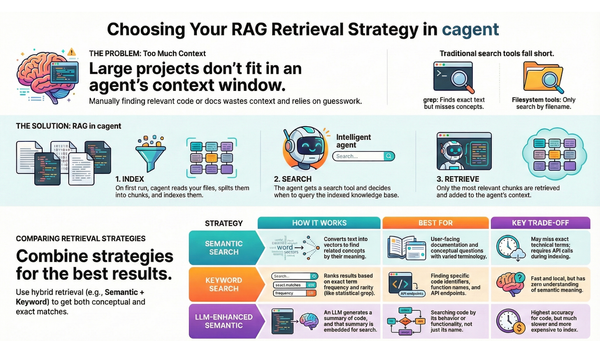

Want to run AI-powered code search without sending your proprietary code to the cloud? This tutorial shows you how to set up Retrieval-Augmented Generation (RAG) using Docker Model Runner and cagent.

Your agent can access your codebase, but can't load it all into context—even 200K tokens isn't enough. Without smart search, it wastes tokens loading irrelevant files.

The 2026 State of AI Agents Report details a major transition as organizations shift from experimental pilots to autonomous production systems.