Top 5 Docker Desktop Features That You Must Try in 2025

Docker Desktop has evolved far beyond containerization. These 5 features are reshaping development workflows: Model Runner (local AI), MCP Toolkit (secure agents), Docker Offload (cloud GPUs), Debug (enhanced troubleshooting), and Agentic Compose (AI infrastructure).

As someone who has been working with Docker for years and sharing knowledge through the Docker community, I'm genuinely excited about the direction Docker Desktop is heading. The platform has evolved far beyond simple containerization—it's now becoming the central hub for modern development workflows, especially in the AI era.

After testing and experimenting with the latest features, I've identified five game-changing capabilities that every developer should explore. These aren't just incremental improvements; they represent fundamental shifts in how we approach development, debugging, and AI integration.

1. Docker Offload: Supercharge Your AI Workloads with Cloud GPUs

The Problem: You're building AI applications locally, but your laptop's resources are limited. Running large language models or compute-intensive workloads locally either takes forever or simply isn't possible.

The Solution: Docker Offload seamlessly moves your AI workloads to high-performance cloud environments with just one click, while keeping your familiar local development workflow intact.

Why Docker Offload is Revolutionary

Docker Offload provides a truly seamless way to run your models and containers on cloud GPUs when local resources aren't sufficient. It frees you from infrastructure constraints by offloading compute-intensive workloads like large language models and multi-agent orchestration to high-performance cloud environments.

Key Benefits:

- Seamless Integration: Works with existing Docker workflows

- GPU Access: High-performance cloud GPUs designed for AI workloads

- No Setup: No complex infrastructure setup required

- Cost Effective: 300 free GPU minutes to get started, then $0.015 per GPU minute

- Local-like Experience: Port forwarding and bind mounts work just like local development

The service is currently in beta, offering developers the ability to scale their AI workloads without worrying about local hardware limitations.

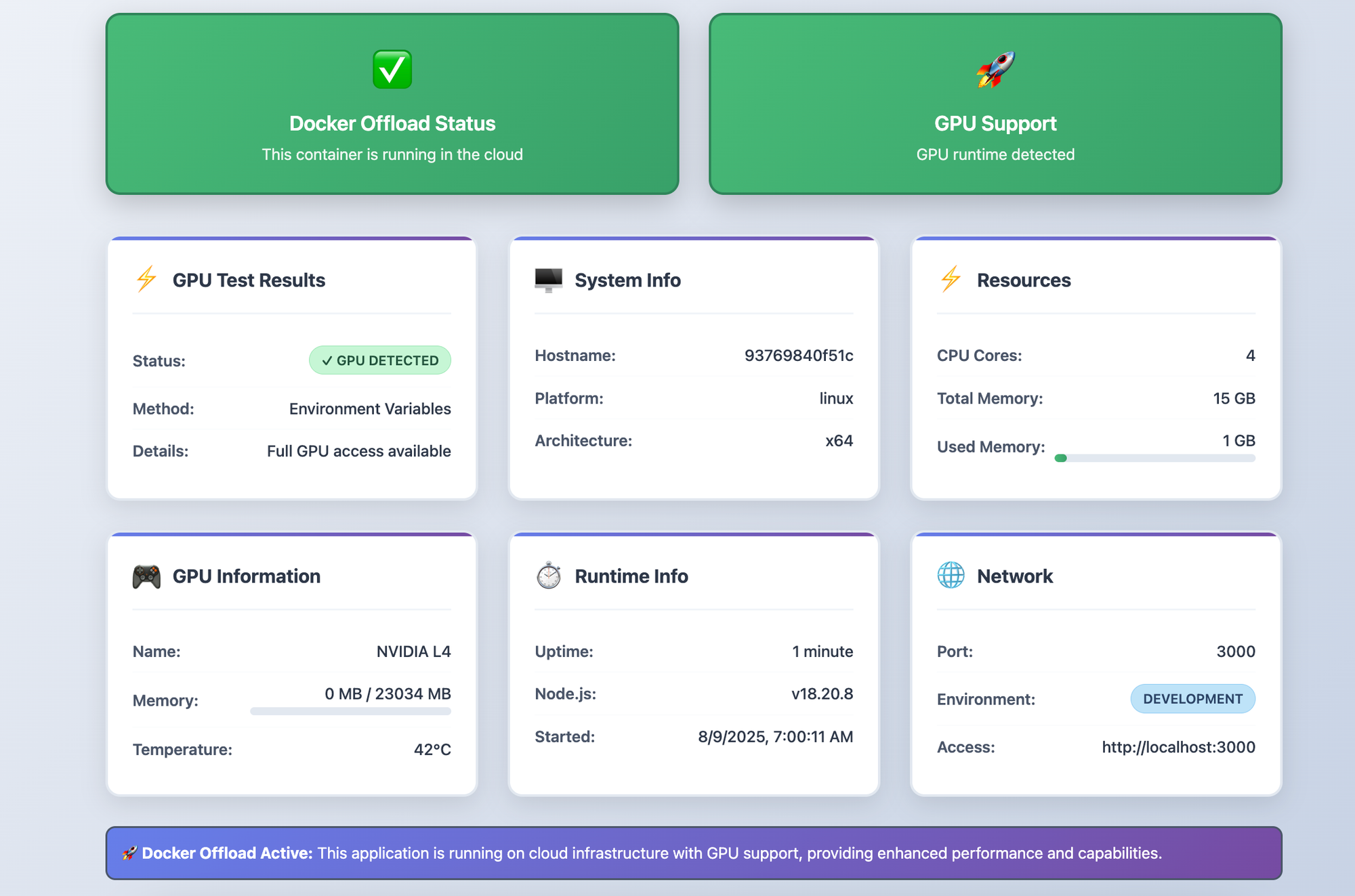

Let's deploy a simple Node.js web application demonstrating Docker Offload functionality with GPU support.

git clone https://github.com/ajeetraina/docker-offload-demo

cd docker-offload-demo

docker build -t docker-offload-demo-enhanced .

docker run --rm --gpus all -p 3000:3000 docker-offload-demo-enhanced

🐳 Docker Offload Demo running on port 3000

🔗 Access at: http://localhost:3000

📊 System Status:

🚀 Docker Offload: ENABLED

⚡ GPU Support: DETECTED

🖥️ GPU: NVIDIA L4

💾 Memory: 1GB / 15GB (6%)

🔧 CPU Cores: 4

⏱️ Uptime: 0 seconds

✅ Ready to serve requests!

2. Model Runner: Local LLM Execution Without the Complexity

The Problem: Running AI models locally typically involves managing Python environments, CUDA installations, model formats, and complex dependencies. Each model seems to need a different setup.

The Solution: Docker Model Runner treats AI models as first-class citizens in Docker, providing GPU-accelerated inference with simple CLI commands.

How Model Runner Changes Everything

What makes Model Runner unique is that models don't run in containers. Instead, Docker Desktop runs llama.cpp directly on your host machine for optimal GPU acceleration, while treating models as OCI artifacts in Docker Hub.

Getting Started

First, enable Model Runner:

# Enable Model Runner

docker desktop enable model-runner

# Enable with TCP support (optional)

docker desktop enable model-runner --tcp 12434

Basic Commands

# Check available commands

docker model --help

# Check if Model Runner is running

docker model status

# List available models (initially empty)

docker model ls

# Download a model

docker model pull ai/llama3.2:1B-Q8_0

# List downloaded models

docker model ls

# Run a single prompt

docker model run ai/llama3.2:1B-Q8_0 "Hi"

# Run in interactive mode

docker model run ai/llama3.2:1B-Q8_0

# Interactive chat mode started. Type '/bye' to exit.

# Remove a model

docker model rm ai/llama3.2:1B-Q8_0

Available Models

The growing model catalog hosted at https://hub.docker.com/u/ai includes:

- ai/gemma3 - Google's efficient model family

- ai/llama3.2 - Meta's latest models

- ai/qwq - Reasoning-focused model

- ai/mistral-nemo and ai/mistral - Mistral AI models

- ai/phi4 - Microsoft's efficient model

- ai/qwen2.5 - Alibaba's multilingual model

- ai/deepseek-r1-distill-llama - Reasoning-optimized (distilled version)

Connection Methods

Model Runner provides three ways to connect:

1. From within containers:

http://model-runner.docker.internal/

2. From the host via Docker Socket:

curl --unix-socket /var/run/docker.sock \

localhost/exp/vDD4.40/engines/llama.cpp/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{"model":"ai/llama3.2:1B-Q8_0",...}'

3. From the host via TCP: When TCP support is enabled, you can access via port 12434 or use a helper container:

docker run -d --name model-runner-proxy -p 8080:80 \

alpine/socat tcp-listen:80,fork,reuseaddr tcp:model-runner.docker.internal:80

Building GenAI Applications

Here's how to build a complete GenAI application using Model Runner with the provided demo:

Step 1. Download the model:

docker model pull ai/llama3.2:1B-Q8_0

Step 2. Clone the repository:

git clone https://github.com/dockersamples/genai-app-demo

cd genai-app-demo

Step 3. Set environment variables:

BASE_URL: http://model-runner.docker.internal/engines/llama.cpp/v1/

MODEL: ai/llama3.2:1B-Q8_0

API_KEY: ${API_KEY:-ollama}

Step 4. Start the application:

docker compose up -d

Step 5. Access the application: Open http://localhost:3000 to interact with your locally running AI application.

3. MCP Toolkit: The Missing Link for AI Agent Development

The Problem: AI agents need to interact with external tools and services, but setting up these connections is fragmented, insecure, and complex. Discovery is difficult, setup is clunky, and security is often an afterthought.

The Solution: Docker MCP Toolkit provides a secure, standardized way to connect AI agents to 100+ verified tools through the Model Context Protocol.

Why MCP Toolkit is Game-Changing

The Model Context Protocol (MCP) is becoming the standard for AI-tool integration, and Docker's implementation solves the three major challenges:

- Discovery: Centralized catalog of verified tools on Docker Hub

- Security: Containerized execution with access controls and isolation

- Simplicity: One-click installation and connection to popular MCP clients

The Docker MCP Catalog and Toolkit

Docker MCP Catalog serves as your starting point for discovery, surfacing a curated set of popular, containerized MCP servers. It's integrated directly into Docker Hub with 100+ verified tools from trusted partners like:

- Stripe - Payment processing

- Elastic - Search and analytics

- Heroku - Cloud platform operations

- Pulumi - Infrastructure as code

- Grafana Labs - Monitoring and observability

- Kong Inc. - API management

- Neo4j - Graph database operations

- New Relic - Application monitoring

MCP Toolkit complements the catalog by:

- Simplifying installation and credential management

- Enforcing access control and security policies

- Securing the runtime environment with container isolation

- Providing one-click connections to leading MCP clients

Supported MCP Clients

The toolkit integrates seamlessly with popular clients:

- Gordon (Docker AI Agent)

- Claude Desktop

- Cursor

- VS Code

- Windsurf

- continue.dev

- Goose

Security-First Approach

Every MCP server runs in an isolated container with:

- Image signing and attestation for verified integrity

- No host filesystem access by default

- Credential management through secure Docker Hub integration

- Access control with user-defined permissions

- Resource limits to prevent abuse

Building powerful, intelligent AI agents has never been easier with this secure, standardized approach.

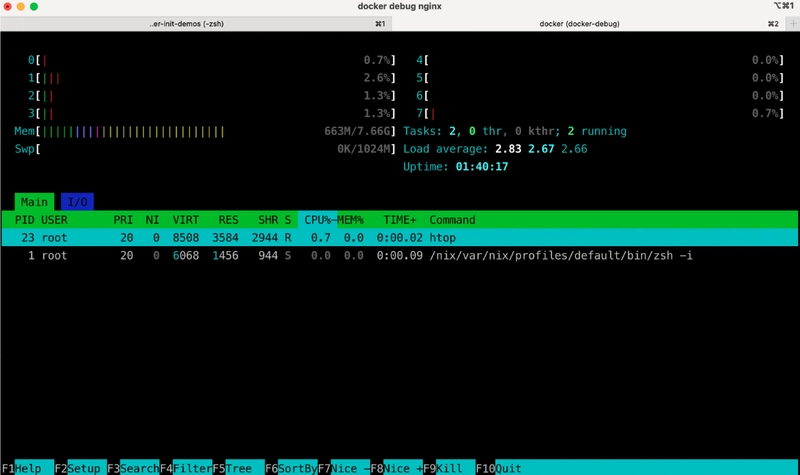

4. Docker Debug: Finally, Proper Container Debugging

The Problem: Debugging containers has always been painful. Traditional docker exec only works for containers that ship with a shell and doesn't work for stopped containers. When containers exit unexpectedly, debugging becomes impossible.

The Solution: Docker Debug gives you an enhanced shell with debugging tools into any container or image, whether it's running, stopped, or minimal.

Getting Started with Docker Debug

Docker Debug is available in Docker Desktop 4.27.0+ for Pro subscribers. First, verify it's working:

docker debug --help

Usage: docker debug [OPTIONS] {CONTAINER|IMAGE}

Get an enhanced shell with additional tools into any container or image

Options:

-c, --command string Evaluate the specified commands instead

--host string Daemon docker socket to connect to

--shell shell Select a shell. Supported: "bash", "fish", "zsh", "auto"

--version Display version of the docker-debug plugin

Debugging Docker Images

You can debug any image directly, even without a running container:

docker debug nginx

This pulls the image and gives you an enhanced shell:

▄

▄ ▄ ▄ ▀▄▀

▄ ▄ ▄ ▄ ▄▇▀ █▀▄ █▀█ █▀▀ █▄▀ █▀▀ █▀█

▀████████▀ █▄▀ █▄█ █▄▄ █ █ ██▄ █▀▄

▀█████▀ DEBUG

Builtin commands:

- install [tool1] [tool2] ... Add Nix packages

- uninstall [tool1] [tool2] ... Uninstall NixOS package(s)

- entrypoint Print/lint/run the entrypoint

- builtins Show builtin commands

Checks:

✓ distro: Debian GNU/Linux 12 (bookworm)

✓ entrypoint linter: no errors (run 'entrypoint' for details)

Note: This is a sandbox shell. All changes will not affect the actual image.

Key Features

Entrypoint Analysis: Understand how containers start:

docker > entrypoint

Understand how ENTRYPOINT/CMD work and if they are set correctly.

From CMD in Dockerfile:

['nginx', '-g', 'daemon off;']

From ENTRYPOINT in Dockerfile:

['/docker-entrypoint.sh']

By default, any container from this image will be started with following command:

/docker-entrypoint.sh nginx -g daemon off;

Nix Package Manager: Install debugging tools on demand:

install crossplane

# Tip: You can install any package available at: https://search.nixos.org/packages.

Built-in Tools: Access to vim, htop, and other essential debugging utilities without installation.

Debugging Running Containers

# Start a container

docker run -d -p 6379:6379 redis

# Debug the running container

docker debug <container-name>

The debug session shows you're in an attach shell where changes to the container filesystem are visible to the container directly, while the /nix directory remains invisible to the actual container.

Enhanced Shell Experience

Docker Debug provides:

- Descriptive prompt showing container context

- Auto-detection of your preferred shell

- Persistent history across debug sessions

- Rich toolset via Nix package manager

5. Agentic Compose: AI Agents as Infrastructure

The Problem: Building AI agent systems requires orchestrating multiple models, tools, and services. Each framework has its own way of defining agents, and scaling them is complex.

The Solution: Docker Compose now supports AI agents as first-class citizens, allowing you to define, run, and scale agentic applications using familiar YAML syntax.

The Agentic Compose Revolution

Starting today, Docker makes it easy to build, ship, and run agents and agentic applications. With just a compose.yaml, you can define your open models, agents, and MCP-compatible tools, then spin up your full agentic stack with a simple docker compose up.

Framework Integration

Agentic Compose integrates with today's most popular frameworks:

LangGraph: Define your LangGraph workflow, wrap it as a service, plug it into compose.yaml, and run the full graph with docker compose up.

Vercel AI SDK: Compose makes it easy to stand up supporting agents and services locally.

Spring AI: Use Compose to spin up vector stores, model endpoints, and agents alongside your Spring AI backend.

CrewAI: Compose lets you containerize CrewAI agents for better isolation and scaling.

Google's ADK: Easily deploy your ADK-based agent stack with Docker Compose - agents, tools, and routing layers all defined in a single file.

Agno: Use Compose to run your Agno-based agents and tools effortlessly.

Integration with Docker's AI Features

Agentic Compose works seamlessly with Docker's broader AI suite:

Docker MCP Catalog: Instant access to a growing library of trusted, plug-and-play tools for your agents. Just drop what you need into your Compose file.

Docker Model Runner: Pull open-weight LLMs directly from Docker Hub, run them locally with full GPU acceleration using built-in OpenAI-compatible endpoints.

Docker Offload: When local resources aren't sufficient, seamlessly offload compute-intensive workloads like large language models and multi-agent orchestration to high-performance cloud environments.

Real-World Example: Multi-Agent Fact Checker

Here's an example from the Docker samples showing a collaborative multi-agent fact checker using Google ADK:

The Critic agent gathers evidence via live internet searches using DuckDuckGo through the Model Context Protocol (MCP), while the Reviser agent analyzes and refines conclusions using internal reasoning alone. The system showcases how agents with distinct roles and tools can collaborate under orchestration.

Production Deployment

Deploy your agentic applications to production with familiar commands:

# Deploy to Google Cloud Run

gcloud run compose up

Support for Azure Container Apps is coming soon, providing multiple deployment options for your AI agent systems.

Getting Started

Explore the complete collection of agentic examples:

Bringing It All Together: The Complete AI Development Stack

The real magic happens when you combine these features. Here's how they work together:

- Model Runner provides local LLM execution with GPU acceleration

- MCP Toolkit connects your agents to external tools and services securely

- Agentic Compose orchestrates multi-agent systems using familiar Docker workflows

- Docker Offload scales compute-intensive workloads to the cloud seamlessly

- Docker Debug helps troubleshoot issues across your entire AI stack

A Real Development Workflow

Using the provided genai-app-demo as an example:

- Pull your model:

docker model pull ai/llama3.2:1B-Q8_0 - Clone the demo:

git clone https://github.com/dockersamples/genai-app-demo - Configure environment: Set BASE_URL, MODEL, and API_KEY

- Start the stack:

docker compose up -d - Access your app: Open http://localhost:3000

The Go backend connects to Model Runner's API, the React frontend talks to the backend, and everything runs locally with GPU acceleration visible in Activity Monitor.

Getting Started Today

Ready to explore these features? Here's your action plan:

Step 1: Update Docker Desktop

Ensure you're running Docker Desktop 4.40+ for Model Runner and 4.27+ for Docker Debug.

Step 2: Enable Features

# Enable Model Runner

docker desktop enable model-runner

# Enable MCP Toolkit (via Docker Desktop Settings > Beta features)

Step 3: Try Each Feature

- Model Runner:

docker model pull ai/llama3.2:1B-Q8_0 - Debug:

docker debug nginx - MCP Toolkit: Enable in Docker Desktop's Beta features

- Offload: Sign up for beta access

- Agentic Compose: Explore the sample projects

The Future of Development is Here

These five features represent more than incremental improvements—they signal a fundamental shift toward AI-native development. We're moving toward a world where AI models are as easy to manage as container images, debugging is visual and tool-rich, AI agents are infrastructure components, and local and cloud resources blend seamlessly.

The tools are here. The ecosystem is growing. The question isn't whether to adopt these features—it's how quickly you can integrate them into your workflow to stay competitive in the AI era.

Start with Model Runner to experience local LLM execution, then gradually explore the others. Before you know it, you'll be building sophisticated AI-powered applications with the same ease and confidence that Docker brought to traditional software development.